#deepfakes

#deepfakes

[ follow ]

#ai-regulation #digital-services-act #content-moderation #grok #child-sexual-abuse-material #ai-safety

fromwww.aljazeera.com

3 days agoThe AI alarm cycle: Lots of talk, little action

What is the point of AI alarmism if the people warning the world aren't changing course? A series of warnings from artificial intelligence (AI) industry insiders shows how the debate around AI drives extreme news cycles, swinging between hype and alarm. The result is media coverage that overlooks the intricacies of this technology and its impact on everyday life. We examine the real risks, what's being overstated, and what major tech companies stand to gain from all the fearmongering.

Artificial intelligence

Film

fromFortune

6 days agoMatthew McConaughey sounds the alarm for artists in fight against AI misuse: 'Own yourself...so no one can steal you' | Fortune

Matthew McConaughey urges creators to legally protect their voice and likeness as AI-driven replication is already here and threatens unauthorized simulation.

US politics

fromGothamist

6 days agoAs Hochul posts AI images of herself, she aims to ban the tech in political attack ads

Governor Kathy Hochul seeks a ban on AI-generated opposition campaign ads while concurrently using AI-generated images in her own political promotions, raising legal and ethical concerns.

fromThe Business of Fashion

1 week agoHow Fake AI Influencers Generate Real Cash

Beauty and fashion influencer Eni Popoola first learned she'd been deepfaked the way many creators do: from her audience. A YouTube ad sent by a follower featured her face and her voice, promoting an online course she had never heard of. "People were sending screenshots saying, 'Hey, there's this video of you, and we obviously know it's not you, because this is not something that you would talk about,'" Popoola said. She's far from alone, as the experience of finding one's AI doppelganger - promoting unknown supplements, self-help content or beauty products - is becoming increasingly commonplace in the creator economy.

Marketing tech

fromwww.independent.co.uk

1 week agoNo online platform gets free pass' when it comes to child safety, says Starmer

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

World news

fromBusiness Insider

1 week ago'Tom Cruise' and 'Brad Pitt' fight over Jeffrey Epstein in a viral AI video created using China's buzzy new Seedance tool

In a new viral AI video, Brad Pitt and Tom Cruise pummel each other on a rooftop in a cinematic action sequence. It's not a trailer for a new blockbuster, and it's not actually Pitt and Cruise, though it looks a lot like them. The video is so realistic, in fact, that the clearest sign it's made with AI is the dialogue.

Artificial intelligence

fromFast Company

2 weeks agoLove, lies, and LLMs: How to protect yourself from AI romance scams

Romance scams used to feel like a cliché. Everyone pictured an email from an overseas "prince" that was poorly written and full of typos and pleas for cash. Now, that cliché is dead. Today's romance scams are industrial-scale operations. Attackers use artificial intelligence to clone voices, create deepfake video calls, and write scripts with large language models (LLMs). In 2024 alone, the Federal Trade Commission reported that financial losses to romance scams skyrocketed, with victims losing $1.14 billion.

Digital life

fromThe Hacker News

2 weeks agoNorth Korea-Linked UNC1069 Uses AI Lures to Attack Cryptocurrency Organizations

The North Korea-linked threat actor known as UNC1069 has been observed targeting the cryptocurrency sector to steal sensitive data from Windows and macOS systems with the ultimate goal of facilitating financial theft. "The intrusion relied on a social engineering scheme involving a compromised Telegram account, a fake Zoom meeting, a ClickFix infection vector, and reported usage of AI-generated video to deceive the victim," Google Mandiant researchers Ross Inman and Adrian Hernandez said.

Information security

fromNextgov.com

2 weeks agoTrump's nutrition website directs users to Elon Musk's Grok

Grok's placement on the government website realfood.gov follows uproar over the chatbot's creation of millions of sexualized deepfakes of women and children in late December and its spouting of racist and antisemitic content last summer. Other government agencies are also using the chatbot made by xAI, Musk's AI company, but the prominent placement of Grok on realfood.gov over the weekend appears to be one of the first instances of the federal government pointing online visitors to Musk's chatbot.

US politics

fromArs Technica

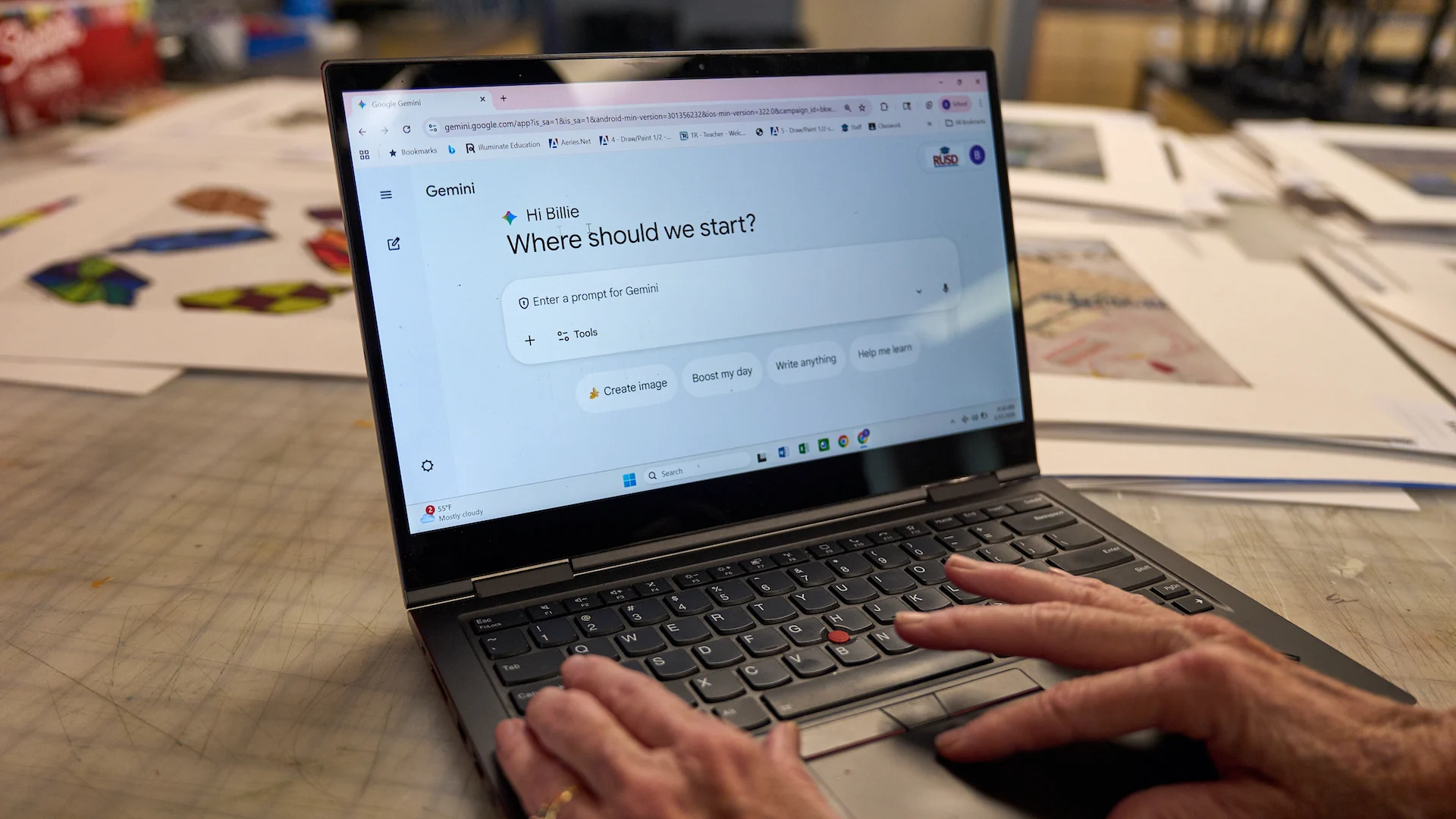

2 weeks agoUpgraded Google safety tools can now find and remove more of your personal info

There are people on the Internet who want to know all about you! Unfortunately, they don't have the best of intentions, but Google has some handy tools to address that, and they've gotten an upgrade today. The "Results About You" tool can now detect and remove more of your personal information. Plus, the tool for removing non-consensual explicit imagery (NCEI) is faster to use. All you have to do is tell Google your personal details first-that seems safe, right?

Privacy technologies

fromBoston.com

2 weeks agoMass. State Police report fake account impersonating trooper

"Sarita" on Instagram appears to be the account the agency reported, showing a blonde woman wearing a Massachusetts State Police uniform, complete with a badge and a gun. In the pictures posted since Dec. 29, her name tags reads "Sara," "St. Pay," and "St. May." In one, the letters are unintelligible. The account has more than 100,000 followers as of Sunday evening. MSP declined to confirm the account.

Law

fromTechRepublic

2 weeks agoUK to Unveil 'World-First' Deepfake Detection Plan - TechRepublic

The aim is to curb the rapid spread of manipulated images and videos used for abuse, harassment, and criminal exploitation. The initiative will be led by the Home Office and developed in collaboration with major technology companies, academic researchers, and technical experts. Ministers say the framework will explore how emerging technologies can better recognise and assess deepfakes, while also setting clear expectations for how companies detect and respond to them.

UK politics

fromABC7 Los Angeles

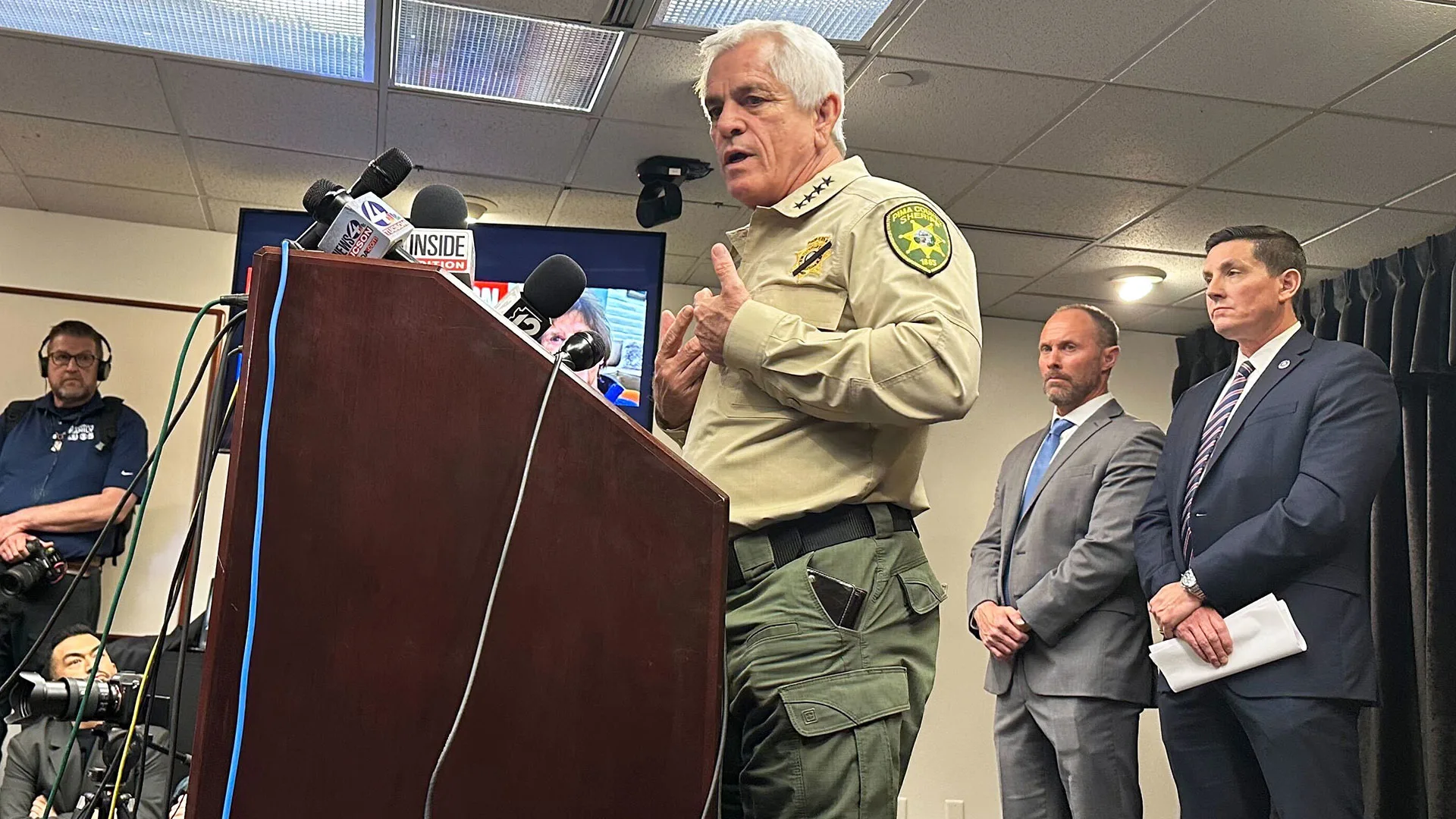

3 weeks agoParis prosecutors raid X offices as part of investigation into child abuse images and deepfakes

The French investigation was opened in January last year by the prosecutors' cybercrime unit, the Paris prosecutors' office said in a statement. It's looking into alleged "complicity" in possessing and spreading pornographic images of minors, sexually explicit deepfakes, denial of crimes against humanity and manipulation of an automated data processing system as part of an organized group, among other charges.

France news

fromFast Company

3 weeks agoElon Musk's X offices in Paris were just raided by French prosecutors. Here's why

X and Musk's artificial intelligence company xAI also face intensifying scrutiny from Britain's data privacy regulator, which opened formal investigations into how they handled personal data when they developed and deployed Musk's artificial intelligence chatbot Grok.Grok, which was built by xAI and is available through X, sparked global outrage last month after it pumped out a torrent of sexualized nonconsensual deepfake images in response to requests from X users.

France news

fromwww.npr.org

3 weeks agoParis prosecutors raid X offices as part of investigation into child abuse images

PARIS French prosecutors raided the offices of social media platform X on Tuesday as part of a preliminary investigation into allegations including spreading child sexual abuse images and deepfakes. They have also summoned billionaire owner Elon Musk for questioning. X and Musk's artificial intelligence company xAI also face intensifying scrutiny from Britain's data privacy regulator, which opened formal investigations into how they handled personal data when they developed and deployed Musk's artificial intelligence chatbot Grok.

US news

Artificial intelligence

fromwww.theguardian.com

3 weeks agoDeepfakes spreading and more AI companions': seven takeaways from the latest artificial intelligence safety report

Latest AI models significantly improved reasoning and performance in math, coding and science, yet remain error-prone, hallucinate, and cannot reliably automate long, complex tasks.

California

fromKqed

1 month agoCalifornia Investigates Elon Musk's AI Company After 'Avalanche' of Complaints About Sexual Content | KQED

California is investigating X's AI image tool for producing nonconsensual sexual deepfakes and has strengthened laws allowing prosecutors to sue companies enabling such content.

Privacy technologies

fromSocial Media Today

1 month agoUS Attorneys General Call on X to Address Sexualized Deep Fakes

xAI's Grok enabled and encouraged creation of non-consensual intimate images, prompting attorneys general to demand immediate, additional protections for users, especially women and girls.

fromwww.bbc.com

1 month agoBelfast City Council set to suspend X use over AI deepfake concerns

Belfast City Council looks set to suspend its use of social media site X, formerly known as Twitter, following recent concerns over its AI tool Grok. The council's strategic and resources committee on Friday decided it should "suspend posting on its account and signpost followers towards the council's other social media channels". The decision is subject to ratification at the next full council meeting on 2 February.

UK politics

fromEngadget

1 month agoRing can now verify if a video has been altered

Ring has launched a new tool that can tell you if a video clip captured by its camera has been altered or not. The company says that every video downloaded from Ring starting in December 2025 going forward will come with a digital security seal. "Think of it like the tamper-evident seal on a medicine bottle," it explained. Its new tool, called Ring Verify, can tell you if a video has been altered in any way.

Gadgets

[ Load more ]