#content-licensing

#content-licensing

[ follow ]

#generative-ai #ai-training-data #netflix #copyright #disney #artificial-intelligence #google #openai #reddit #amazon

World news

fromTechCrunch

1 month agoWikimedia Foundation announces new AI partnerships with Amazon, Meta, Microsoft, Perplexity and others | TechCrunch

Wikimedia Foundation expanded Wikimedia Enterprise partnerships with major AI and tech companies to monetize and supply high-volume, fast access to Wikimedia content.

Artificial intelligence

fromwww.mercurynews.com

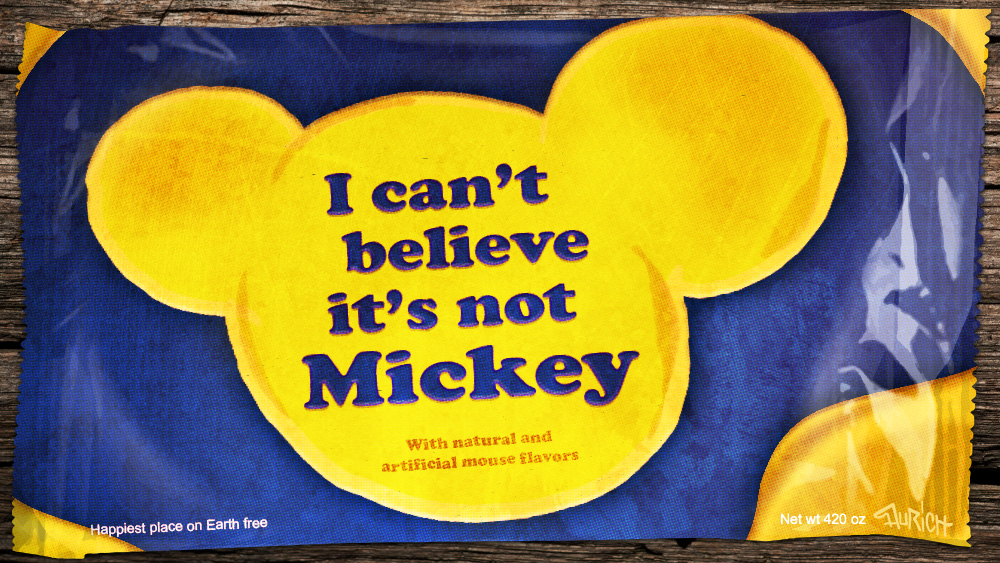

2 months agoDisney invests $1 billion in OpenAI in deal to bring characters like Mickey Mouse to Sora AI video tool

Disney will invest $1 billion in OpenAI and license over 200 Disney, Marvel, Pixar and Star Wars characters to OpenAI's Sora AI video generator under a three-year deal.

Media industry

fromwww.theguardian.com

2 months agoAI is changing the relationship between journalist and audience. There is much at stake | Margaret Simons

AI-generated search summaries reduce traffic to news websites, undermining media business models by decreasing subscribers and advertising revenue.

fromThe Verge

3 months agoNetflix might make its own video podcasts

Netflix's video podcast ambitions may extend beyond its recent deal with Spotify. A new report from Bloomberg suggests Netflix is preparing to create original video podcasts exclusive to its streaming service as well. The streaming giant has reportedly contacted talent to create the new shows and plans to update the layout of its mobile app to help users find the video podcasts.

Television

fromDigiday

4 months agoMedia Briefing: From standards to marketplaces: the AI 'land grab' is on

It's starting to feel like every corner of the industry wants to be the one to write the rulebook on AI and help publishers get paid for it - and the space is getting almost as dense as the buzzwords themselves. From trade groups racing to set AI standards to vendors rolling out content marketplaces - and everyone else piling in with provenance tech and licensing schemes - the space is filling up fast.

Artificial intelligence

fromstupidDOPE | Est. 2008

4 months agoThe Future of Content Licensing: How RSL Bridges Publishers and AI | stupidDOPE | Est. 2008

For decades, publishers large and small have created the news, culture, entertainment, and educational resources that shape how society consumes information. Yet in recent years, the rise of artificial intelligence has added a new twist to the ongoing struggle for sustainable publishing. AI companies are building tools capable of generating responses, summaries, and insights trained on vast amounts of web content. The problem? Many publishers see little to no compensation for their role in shaping the data that fuels these systems.

Artificial intelligence

fromWIRED

5 months agoUSA Today Enters Its Gen AI Era With a Chatbot

The publishing company behind USA Today and 220 other publications is today rolling out a chatbot-like tool called DeeperDive that can converse with readers, summarize insights from its journalism, and suggest new content from across its sites. "Visitors now have a trusted AI answer engine on our platform for anything they want to engage with, anything they want to ask," Mike Reed, CEO of Gannett and the USA Today Network, said at the WIRED AI Power Summit in New York, an event that brought together voices from the tech industry, politics, and the world of media. "and it is performing really great."

Media industry

fromTechCrunch

5 months agoGoogle is a 'bad actor' says People CEO, accusing the company of stealing content | TechCrunch

Google has one crawler, which means they use the same crawler for their search, where they still send us traffic, as they do for their AI products, where they steal our content,

Artificial intelligence

fromZDNET

5 months agoPublishers are fighting back against AI with a new web protocol - is it too late?

The idea behind RSL is brutally simple. Instead of the old file -- which only said, "yes, you can crawl me," or "no, you can't," and which AI companies often ignore -- publishers can now add something new: machine-readable licensing terms. Want an attribution? You can demand it. Want payment every time an AI crawler ingests your work, or even every time it spits out an answer powered by your article?

Media industry

Television

fromVariety

5 months agoLionsgate Hires Apple TV+'s Justin Manfredi as Head of TV Marketing

Justin Manfredi joins Lionsgate as executive vice president of worldwide television marketing, succeeding Suzy Feldman and overseeing series marketing, licensing, FAST channels, and digital distribution.

[ Load more ]