#ai-security

#ai-security

[ follow ]

#cybersecurity #generative-ai #agentic-ai #data-protection #prompt-injection #acquisition #vulnerabilities

fromComputerworld

6 days agoKandji becomes Iru, opens MDM for Windows and Android

Apple device management and security company Kandji has changed its name to Iru, reflecting a new approach to what it does while opening its offer up to Windows and Android. It means enterprises shifting to Apple tech can now manage all their legacy equipment using the same console - and benefit from Iru's AI-powered unified IT and security platform introduced on Wednesday.

Apple

Artificial intelligence

fromTelecompetitor

6 days ago56% of Telecommunications Executives Use AI Agents: Report

56% of telecommunications executives use agentic AI; adoption covers security, support, customer service, product design, marketing, productivity, software, and network automation with measurable ROI.

fromThe Hacker News

1 week agoSecuring AI to Benefit from AI

Artificial intelligence (AI) holds tremendous promise for improving cyber defense and making the lives of security practitioners easier. It can help teams cut through alert fatigue, spot patterns faster, and bring a level of scale that human analysts alone can't match. But realizing that potential depends on securing the systems that make it possible. Every organization experimenting with AI in security operations is, knowingly or not, expanding its attack surface.

Information security

fromNextgov.com

2 weeks agoBridging the gap: Unlock the power of AI for government agencies through cross-domain solutions

Government data is highly segmented by design, often separated by security classification levels to protect sensitive data and operations. While this segmentation is essential for national security, it also presents data-sharing obstacles that must be overcome. Fortunately, Cross-Domain Solutions (CDS) can help overcome obstacles such as safely training AI models with untrusted data, sharing classified AI capabilities with partners and connecting users or systems to AI tools across classification boundaries.

Information security

fromZDNET

3 weeks agoGoogle will pay you up to $30,000 in rewards to find bugs in its AI products

On Monday, Google security engineering managers Jason Parsons and Zak Bennett said in a blog post that the new program, an extension of the tech giant's existing Abuse Vulnerability Reward Program (VRP), will incentivize researchers and bug bounty hunters to focus on "high-impact abuse issues and security vulnerabilities" in Google products and services.

Artificial intelligence

Information security

fromTechCrunch

1 month agoWiz chief technologist Ami Luttwak on how AI is transforming cyberattacks | TechCrunch

AI adoption and vibe coding expand attack surfaces as both developers and attackers use AI tools, causing insecure implementations, prompt-driven exploits, and supply-chain risks.

Information security

from24/7 Wall St.

1 month agoCrowdStrike (NASDAQ: CRWD) Stock Price Prediction and Forecast 2025-2030 (Sept 2025)

CrowdStrike posted strong Q2 results, targets $10B by fiscal 2031 and $20B by 2036, and is expanding AI security and product offerings including a planned Pangea acquisition.

Artificial intelligence

fromAl Bawaba

1 month agoLenovo Finds 65% of IT Leaders Admit Their Defenses Can't Withstand AI Cybercrime | Al Bawaba

Most IT leaders report defenses are outdated against AI-driven cybercrime, requiring adoption of AI-driven, adaptive security to protect people, assets, and data.

fromTechzine Global

1 month agoCheck Point acquires Lakera for comprehensive AI security

More and more organizations are integrating large language models, generative AI, and autonomous agents into their business processes. While this accelerates innovation, it also creates new security challenges. In a world where data increasingly functions as "executable code," data breaches, model manipulation, and undesirable effects of autonomous decision-making are becoming ever greater threats. Check Point already offers GenAI Protect, SaaS and API security, data loss prevention, and machine learning-driven security. Adding Lakera's technology creates a more complete AI security stack.

Artificial intelligence

Information security

fromInfoWorld

2 months ago8 vendors bringing AI to devsecops and application security

AI is becoming foundational to software security, enabling automated vulnerability remediation, real-time secure coding, and supply-chain hardening while introducing governance and risk challenges.

fromSecuritymagazine

2 months agoReport Reveals Gap Between AI Use and AI Security In Embedded Software

The State of Embedded Software Quality and Safety 2025 from Black Duck reveals a disconnect between the organizational use of AI and AI security. The embedded software landscape is transforming, largely driven by AI, with 89.3% of organizations already utilizing AI coding assistants and 96.1% integrating products with open source AI models. However, 21.1% of organizations still lack confidence in their capabilities to prevent AI from opening the door to vulnerabilities.

Software development

fromChannelPro

2 months agoKnowBe4 names Joel Kemmerer as new CIO

Human risk management (HRM) specialist KnowBe4 has announced the appointment of Joel Kemmerer as its new chief information officer (CIO). A seasoned IT executive, Kemmerer arrives with more than 30 years' experience from leadership roles across the industry, bringing expertise in digital transformation, integrating acquisitions, and streamlining business operations. As KnowBe4's new CIO, he will play a key role in leading digital transformation initiatives as the vendor looks to continue its global growth journey.

Information security

fromComputerWeekly.com

2 months agoGoogle spins up agentic SOC to speed up incident management | Computer Weekly

Google Cloud is enhancing security with AI by creating a new integrated security operations center (SOC) that automates workflows for alert triage, investigation, and response.

Artificial intelligence

fromThe Hacker News

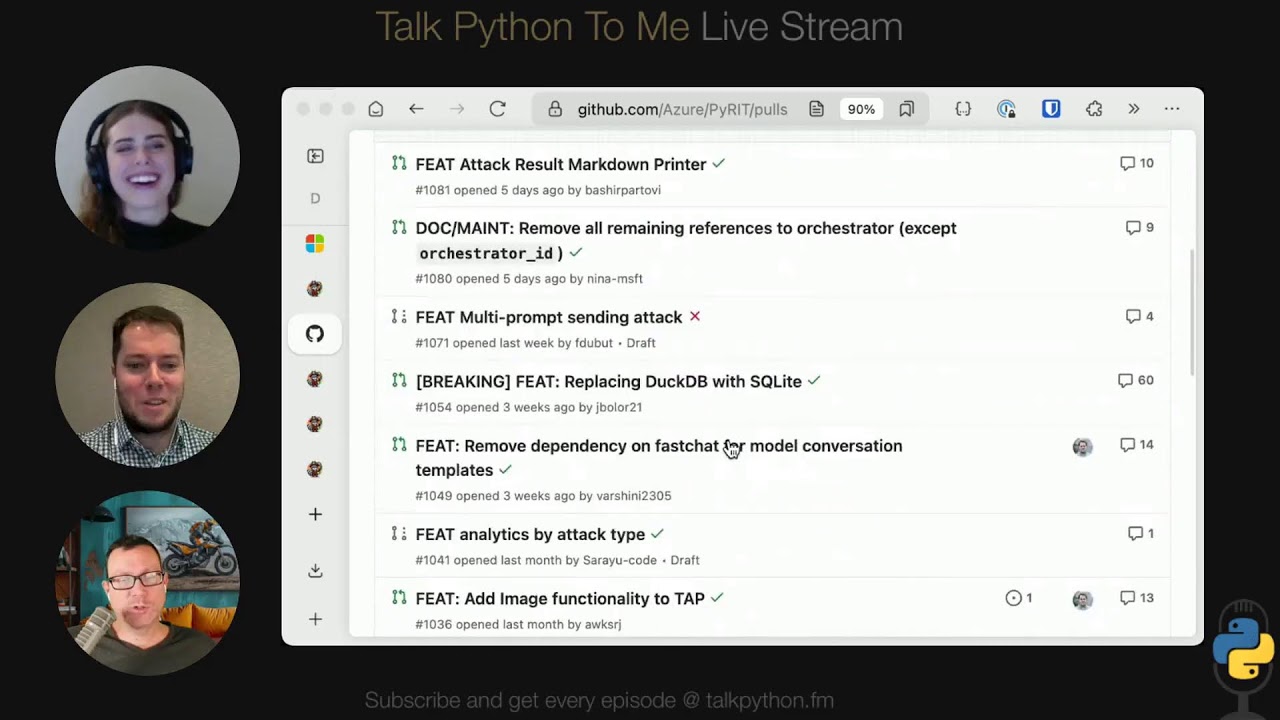

3 months agoGoogle AI "Big Sleep" Stops Exploitation of Critical SQLite Vulnerability Before Hackers Act

An attacker who can inject arbitrary SQL statements into an application might be able to cause an integer overflow resulting in read off the end of an array.

Artificial intelligence

fromTechCrunch

3 months agoExclusive: Meta fixes bug that could leak users' AI prompts and generated content

Meta has addressed a security vulnerability that allowed users to access private prompts and AI-generated responses of others, revealing major concerns with data authorization.

Privacy professionals

fromSecuritymagazine

3 months agoPhishing Scams Can Deceive Large Language Models

If AI suggests unregistered or inactive domains, threat actors can register those domains and set up phishing sites. As long as users trust AI-provided links, attackers gain a powerful vector to harvest credentials or distribute malware at scale.

Privacy professionals

fromTechCrunch

3 months agoOpenAI tightens the screws on security to keep away prying eyes | TechCrunch

OpenAI is implementing enhanced security measures to safeguard its intellectual property from corporate espionage, largely prompted by the release of a competing model by Chinese startup DeepSeek.

Information security

fromInfoQ

4 months agoOWASP Launches AI Testing Guide to Address Security, Bias, and Risk in AI Systems

OWASP's AITG is a true game-changer for AI security. As CISOs, we've wrestled with AI's non-deterministic nature and silent data drift. This guide offers a structured path to secure, auditable AI, from prompt injection to continuous monitoring.

Artificial intelligence

[ Load more ]