#ai-hallucinations

#ai-hallucinations

[ follow ]

fromFast Company

1 week agoThese 4 advanced features unlock Gemini's true power

If you want to move past the beginner phase and actually make Gemini work for you, here are four tricks that might not be immediately obvious but are surprisingly handy. Stop copy-pasting your own emails If you're trying to summarize a long email thread or find a specific document to pull data from, your first instinct is probably to open a new tab, find the email, copy the text, go back to Gemini, paste it in, and then ask your question.

Gadgets

Artificial intelligence

fromNeil Patel

2 weeks agoAI Hallucination and Accuracy: A Data-Backed Study - Neil Patel

Marketers routinely encounter subtle AI inaccuracies—fabrications, outdated facts, omissions—that require substantial fact-checking and can lead to public errors despite high-performing models.

fromSlate Magazine

3 weeks agoA.I. Was Supposed to "Revolutionize" Work. In Many Offices, It's Only Creating Chaos.

Although we've been told that A.I. is poised to "revolutionize" work, at the moment it seems to be doing something else entirely: spreading chaos. All throughout American offices, A.I. platforms like ChatGPT are delivering answers that sound right even when they aren't, transcription tools that turn meetings into works of fiction, and documents that look polished on the surface but are riddled with factual errors and missing nuance.

Intellectual property law

fromBusiness Matters

3 weeks agoLegal experts warn UK firms of rising AI risks in 2026 as regulation tightens

Businesses must tighten governance of AI use to avoid escalating legal, financial and reputational risks from copyright, data protection breaches, and misleading AI outputs.

Artificial intelligence

fromAbove the Law

1 month agoWarning Party To Stop Citing Fake AI Cases Is Not, In Fact, Bias - Above the Law

AI-generated hallucinations are producing fabricated case law in court filings, disproportionately affecting non-savvy users and pro se litigants and posing growing legal risks.

US politics

fromFuturism

1 month agoNational Weather Service Uses AI to Generate Forecasts, Accidentally Hallucinates Town With Dirty Joke Name

AI-generated weather graphics with hallucinated place names exposed staffing shortages and eroded public trust at the National Weather Service following major layoffs linked to DOGE.

Artificial intelligence

fromAbove the Law

1 month agoPolice Wonder If AI Bodycam Reports Are Accurate After Model Transforms Officer Into A Frog - Above the Law

AI-generated police reports can hallucinate fantastical details from background media audio, causing inaccurate law enforcement documentation and misleading official records.

fromThe IP Law Blog

1 month agoThe Briefing - New York Times v. Perplexity AI: Copyright, Hallucinations, and Trademark Risk

In this episode of The Briefing, Weintraub Tobin partners Scott Hervey and Matt Sugarman break down The New York Times v. Perplexity AI, a lawsuit that goes beyond copyright and into largely untested trademark territory. They discuss the Times' allegations that Perplexity copied its journalism at both the input and output stages and, more significantly, that the AI attributed fabricated or inaccurate content to the Times using its trademarks.

Intellectual property law

fromTechCrunch

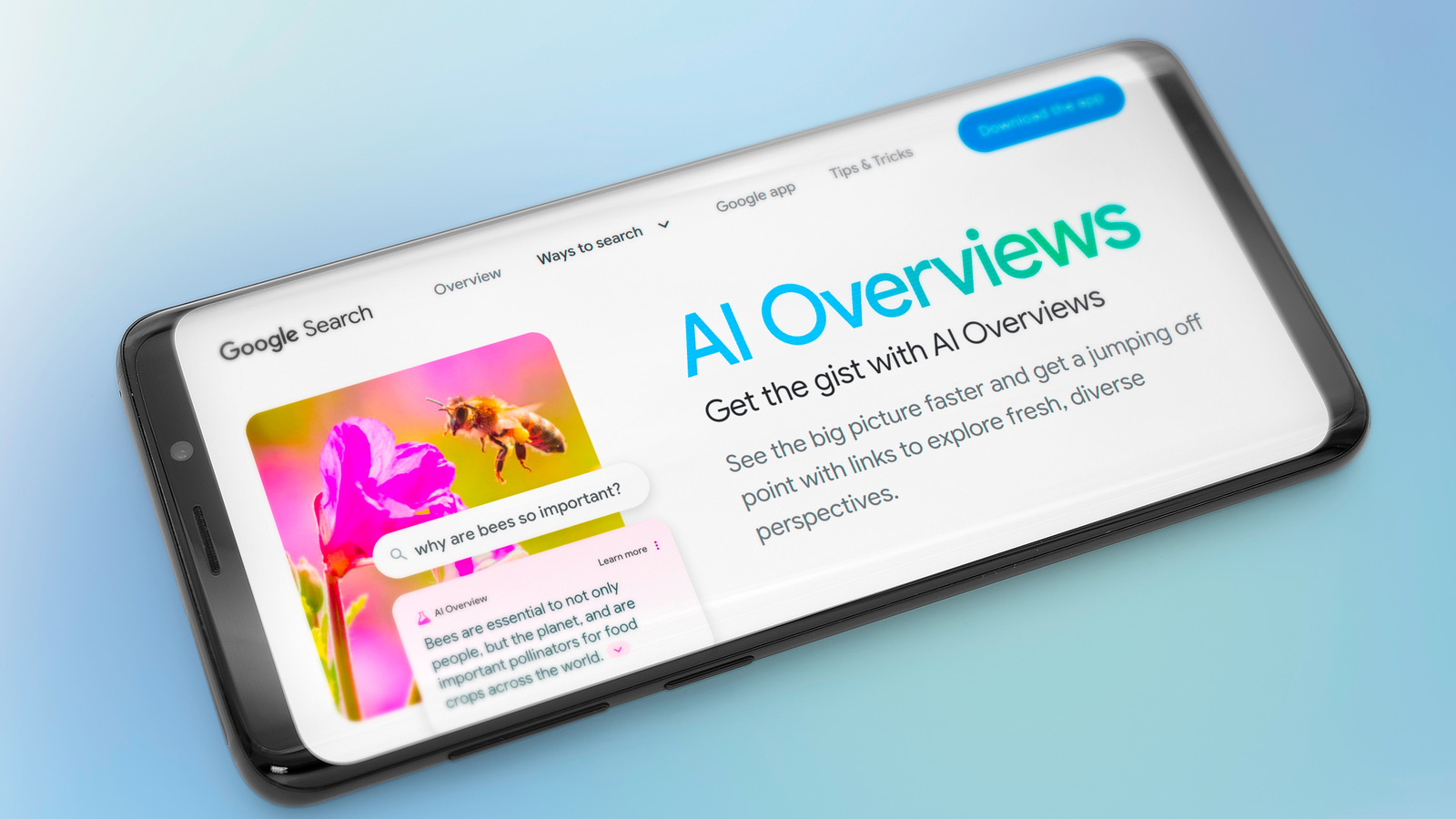

2 months agoGoogle launched its deepest AI research agent yet - on the same day OpenAI dropped GPT-5.2 | TechCrunch

through Google's new Interactions API, which is designed to give devs more control in the coming agentic AI era. The new Gemini Deep Research tool is an agent equipped to synthesize mountains of information and handle a large context dump in the prompt. Google says it's used by customers for tasks ranging from due diligence to drug toxicity safety research. Google also says it will

Artificial intelligence

Law

fromwww.theguardian.com

2 months agoCalifornia prosecutors' office used AI to file inaccurate motion in criminal case

Prosecutors used AI-generated content in filings that produced inaccurate legal citations and hallucinations, prompting withdrawn motions and appeals asserting due-process and ethical violations.

fromIT Pro

3 months ago'Slopsquatting' is a new risk for vibe coding developers - but it can be solved by focusing on the fundamentals

Slopsquatting is an attack method in which hackers exploit common AI hallucinations to trick engineers into mistakenly installing malicious packages. In short, hackers track non-existent packages hallucinated by AI coding tools and then publish malicious packages under these names on public repositories such as . The seemingly legitimate packages are then installed by victims who trust their AI code suggestions.

Information security

fromFuturism

3 months agoKim Kardashian Says She Screamed at ChatGPT After It "Made" Her Fail Law School Tests

"And then I'll get mad and yell at it and be like, 'You made me fail. Why did you this?' And it will talk back to me," she said. "I will talk to it and say, 'Hey, you're going to make me fail. How does it make you feel?... I need to really know these answers, and I'm coming to you.' And it'll say back to me, 'This is just teaching you to trust your own instincts. You knew the answer all along.'"

Artificial intelligence

fromTheregister

3 months agoDefamation flap sees Google yank Gemma from AI Studio

While Google didn't explicitly say why it pulled Gemma from AI Studio, the move came after a lawsuit from conservative social media personality Robby Starbuck, who accused Google AI systems of falsely calling him a child rapist and sex criminal. Starbuck's lawsuit focused on hallucinations (i.e., fabricated facts and information) from Bard - now known as Gemini - back in 2023, as well as Gemma, which the lawsuit claims happened in August.

US politics

fromThe Verge

3 months agoLexisNexis CEO: The AI law era is here

Today, I'm talking with Sean Fitzpatrick, the CEO of LexisNexis, one of the most important companies in the entire legal system. For years - including when I was in law school - LexisNexis was basically the library. It's where you went to look up case law, do legal research, and find the laws and precedents you would need to be an effective lawyer for your clients.

Law

fromwww.independent.co.uk

3 months agoMistake-filled legal briefs show the limits of relying on AI tools at work

Your donation allows us to keep sending journalists to speak to both sides of the story. The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Artificial intelligence

fromCNET

3 months agoHow to Use AI Chatbots and What to Know About These Artificial Intelligence Tools

Before artificial intelligence had its big breakout, chatbots were those weird messaging tools that sat in the bottom corner of websites, rarely solving your problems and likely causing you more stress by blocking you from talking to a real person. But now, AI chatbots like have created a whole new category: It's search, but with conversation. You can use an AI chatbot as a thought partner, a research aid or a Google alternative for anything you want to know.

Artificial intelligence

fromAbove the Law

3 months agoJudges Admit The Obvious, Concede AI Used For Hallucinated Opinions - Above the Law

The judges had previously branded their wrong and subsequently withdrawn opinions as clerical errors. That lack of transparency undermined the judges' credibility, but both seem to have used the "clerical" excuse in a good faith effort to avoid throwing interns under the bus. According to Judge Neals, a law school intern performed legal research with ChatGPT, while Judge Wingate writes that a law clerk used Perplexity.

Law

fromArs Technica

3 months agoWhen sycophancy and bias meet medicine

They hoped that the Sufi philosopher, famed for his acerbic wisdom, could mediate a dispute that had driven a wedge between them. Nasreddin listened patiently to the first villager's version of the story and, upon its conclusion, exclaimed, "You are absolutely right!" The second villager then presented his case. After hearing him out, Nasreddin again responded, "You are absolutely right!"

Artificial intelligence

fromThe Verge

3 months agoAnti-diversity activist Robby Starbuck is suing Google now

Robby Starbuck is suing Google, claiming that its AI search tools falsely linked him to sexual assault allegations and white nationalist Richard Spencer. This is the second case that Starbuck, known for his online campaigns against corporate diversity efforts, has brought against a major tech company over its AI products. In April, Starbuck sued Meta, claiming that its AI falsely insisted that he participated in the January 6th attack on the Capitol and that he had been arrested for a misdemeanor.

Artificial intelligence

fromFuturism

4 months agoZuckerberg's AI Glasses Guy Is Named Rocco Basilico

In the darker corners of the tech industry, an untold number of professionals have come to believe in a controversial theory known as Roko's basilisk, which holds that a future AI super intelligence would torture any human who didn't help it come into existence. Now, in a twist that should please any writer, the guy who's spearheading Meta's AI-powered smart glasses is named Rocco Basilico - which has delighted and freaked out some online observers.

Artificial intelligence

Law

fromFortune

4 months agoLexisNexis exec says it's 'a matter of time' before attorneys lose their licenses over using open-source AI pilots in court | Fortune

AI language models have produced fabricated legal citations and cases, leading to sanctions, increased scrutiny, and potential steep penalties for attorneys.

fromInsideHook

4 months agoReport: AI References to Fake Books Are Frustrating Librarians

Imagine an avid reader who one day flips through a summer book preview in their local paper. Among the books listed there is a novel by one of this reader's favorite writers, Isabel Allende. Intrigued, this reader heads to their local library to see if they have any copies of the novel, called Tidewater Dreams, in stock. Here's the problem: Tidewater Dreams doesn't actually exist; instead, it was part of an AI-generated article that included several nonexistent books by acclaimed

Books

fromTheregister

5 months agoOpenAI says models trained to make up answers

The admission came in a paper [PDF] published in early September, titled "Why Language Models Hallucinate," and penned by three OpenAI researchers and Santosh Vempala, a distinguished professor of computer science at Georgia Institute of Technology. It concludes that "the majority of mainstream evaluations reward hallucinatory behavior." Language models are primarily evaluated using exams that penalize uncertainty. The fundamental problem is that AI models are trained to reward guesswork, rather than the correct answer.

Artificial intelligence

fromAbove the Law

6 months agoJudge Trolls Lawyer Over Flowery Excuses For AI Hallucinations - Above the Law

Each citation, each argument, each procedural decision is a mark upon the clay, an indelible impression. [I]n the ancient libraries of Ashurbanipal, scribes carried their stylus as both tool and sacred trust-understanding that every mark upon clay would endure long beyond their mortal span.

Law

fromPC Gamer

7 months agoX's latest terrible idea allows AI chatbots to propose community notes-you'll likely start seeing them in your feed later this month

The pilot scheme allows AI chatbots to generate community notes to accelerate the speed and scale of Community Notes on X.

Social media marketing

[ Load more ]