#benchmarks

#benchmarks

[ follow ]

#code-generation #enterprise-ai #gemini-31-pro #multimodal-ai #gpt-52 #pricing #generative-ai #context-window

fromTheregister

4 days agoAnthropic's latest Sonnet is better at using computers

The tweaks to Sonnet 4.6 have taken it past the pricier Opus 4.6 in two of 13 benchmark categories: agentic financial analysis (Finance Agent v1.1, 63.3 percent vs. 60.1 percent) and office tasks (GDPVal-AA Elo, 1633 vs. 1606). Opus 4.6 wins in six of the 13 categories, in tests that show rival Gemini 3 Pro and GPT-5.2 each leading in 2 of 13 categories. But benchmark tests should not be taken too seriously.

Artificial intelligence

fromTechCrunch

4 days agoAnthropic releases Sonnet 4.6 | TechCrunch

Anthropic has released a new version of its mid-size Sonnet model, keeping pace with the company's four-month update cycle. In a post announcing the new model, Anthropic emphasized improvements in coding, instruction-following, and computer use. Sonnet 4.6 will be the default model for Free and Pro plan users. The beta release of Sonnet 4.6 will include a context window of 1 million tokens, twice the size of the largest window previously available for Sonnet.

Artificial intelligence

fromTechCrunch

3 weeks agoTiny startup Arcee AI built a 400B open source LLM from scratch to best Meta's Llama | TechCrunch

But tiny 30-person startup Arcee AI disagrees. The company just released a truly and permanently open (Apache license) general-purpose, foundation model called Trinity, and Arcee claims that at 400B parameters, it is among the largest open-source foundation models ever trained and released by a U.S. company. Arcee says Trinity compares to Meta's Llama 4 Maverick 400B, and Z.ai GLM-4.5, a high-performing open-source model from China's Tsinghua University, according to benchmark tests conducted using base models (very little post training).

Artificial intelligence

fromTechzine Global

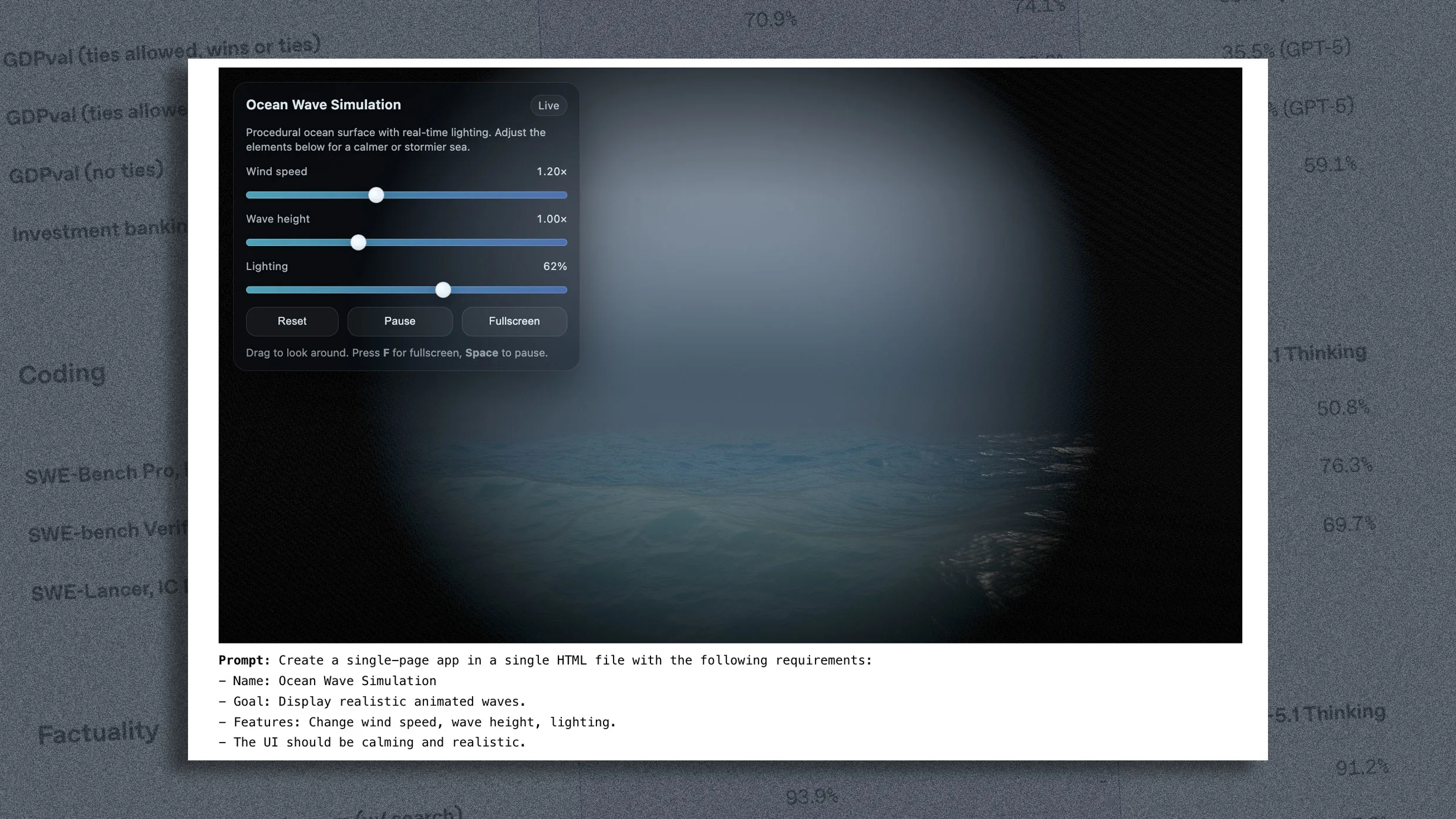

2 months agoGoogle enhances Gemini Deep Research with Interactions API

Google has released a new version of Gemini Deep Research. This is an agent designed to automate complex research tasks. The agent runs on Gemini 3 Pro. The model can process handwriting, graphs, and mathematical notation. It incorporates this visual information directly into reports and search queries. As a result, the system can not only search textual sources, but also retrieve data that was previously difficult to automate, according to SiliconANGLE.

Artificial intelligence

fromZDNET

2 months agoDoes the new Flux.2 beat Nano Banana Pro? You can try it for yourself - for free

Some specific improvements of the model include support for up to 10 reference images, meaning you can incorporate a lot more elements from different pictures in your final product; improved photorealism and detail; more accurate text rendering, a task image generating models frequently struggle with; better prompt following; and a better understanding of real-world knowledge, according to Black Forest Labs.

Artificial intelligence

fromArs Technica

3 months agoOpenAI walks a tricky tightrope with GPT-5.1's eight new personalities

On Wednesday, OpenAI released GPT-5.1 Instant and GPT-5.1 Thinking, two updated versions of its flagship AI models now available in ChatGPT. The company is wrapping the models in the language of anthropomorphism, claiming that they're warmer, more conversational, and better at following instructions. The release follows complaints earlier this year that its previous models were excessively cheerful and sycophantic, along with an opposing controversy among users over how OpenAI modified the default GPT-5 output style after several suicide lawsuits.

Artificial intelligence

fromFuturism

3 months agoResearchers "Embodied" an LLM Into a Robot Vacuum and It Suffered an Existential Crisis Thinking About Its Role in the World

The "Butter-Bench" test, as detailed in a yet-to-be-peer-reviewed paper, is a "benchmark that evaluates practical intelligence in embodied LLM." In the test, the robot had to navigate to an office kitchen, have butter be placed on a tray attached to its back, confirm the pickup, deliver it to a marked location, and finally return to its charging dock. The results of the Butter-Bench experiment, the researchers conceded, were dubious.

Artificial intelligence

fromBuffer: All-you-need social media toolkit for small businesses

5 months agoWhat Is a Good Facebook Engagement Rate? Data From 52 Million+ Posts

One of the most common questions creators and brands ask: "Is my engagement rate good?" The answer depends on your follower count. A 5% engagement rate looks very different for a neighborhood café with 500 fans than for a news publisher with half a million. That's why we analyzed 52 million Facebook posts across 213,000 accounts with over 6.9 billion engagements collectively, to see how engagement rates shift by follower tier.

Online marketing

Artificial intelligence

fromInfoQ

4 months agoGoogle DeepMind Launches Gemini 2.5 Computer Use Model to Power UI-Controlling AI Agents

Gemini 2.5 Computer Use enables AI agents to perceive and manipulate graphical user interfaces—clicking, typing, scrolling—via a looped screenshot-and-action API, showing strong benchmark performance.

Artificial intelligence

fromFortune

4 months agoAnthropic releases Claude 4.5, a model it says can build software and accomplish business tasks autonomously | Fortune

Claude Sonnet 4.5 runs autonomously for 30 hours and significantly improves coding, benchmark performance, and business-oriented task completion over prior models.

fromWIRED

4 months agoI Benchmarked Qualcomm's New Snapdragon X2 Elite Extreme. Here's What I Learned

It's important to note that this was all tested on the X2 Elite Extreme configuration, which comes with six additional CPU cores over the standard X2 Elite. There were no X2 Elite systems to test, so we don't know what those multi-core scores will be. I've been told that GPU performance will also scale up on the X2 Elite, but we don't yet know how much faster the X2 Elite Extreme is over its sibling.

Silicon Valley

fromInfoQ

4 months agoxAI Releases Grok 4 Fast with Lower Cost Reasoning Model

xAI has introduced Grok 4 Fast, a new reasoning model designed for efficiency and lower cost. The model reduces average thinking tokens by 40% compared with Grok 4, which brings an estimated 98% decrease in cost for equivalent benchmark performance. It maintains a 2-million token context window and a unified architecture that supports both reasoning and non-reasoning use cases. The model also integrates tool-use capabilities such as web browsing and X search.

Artificial intelligence

fromTechzine Global

5 months agoCrowdStrike and Meta launch open source AI benchmarks for SOC

CrowdStrike and Meta are jointly introducing CyberSOCEval, a new suite of open source benchmarks to evaluate the performance of AI systems in security operations. The collaboration aims to help organizations select more effective AI tools for their Security Operations Center. Meta and CrowdStrike are addressing a growing challenge by introducing CyberSOCEval, a suite of benchmarks that help define what effective AI looks like for cyber defense. The system is built on Meta's open source CyberSecEval framework and CrowdStrike's frontline threat intelligence.

Artificial intelligence

Artificial intelligence

fromRealpython

5 months agoEpisode #264: Large Language Models on the Edge of the Scaling Laws - The Real Python Podcast

LLM scaling is reaching diminishing returns; benchmarks are often flawed, and developer productivity gains from these models remain modest amid economic hiring shifts.

Social media marketing

fromBuffer: All-you-need social media toolkit for small businesses

6 months agoWhat Is A Good Instagram Engagement Rate? Data from 27 Million+ Instagram Posts

Engagement rates decline as follower count increases; benchmarks show expected engagement across follower tiers from under 1,000 to over 1,000,000.

[ Load more ]