"OpenAI on Thursday released its answer to Google's impressive Gemini 3 Pro model- GPT-5.2 -and by the looks of some head-to-head benchmark test scores, it looks like a winner. The new model took the highest score on a number of benchmark tests covering coding, math, science, tool use, and vision. (Benchmarks should, of course, be combined with real-world use to tell the whole story. But still . . .)"

"GPT-5.2 topped Gemini 3 Pro on the SWE-Bench Pro benchmark (software engineering tasks) with a score of 55.6% (versus Gemini 3 Pro's 43.3%). It achieved an 86.2% on the ARC-AGI-1 abstract reasoning benchmark, compared to Gemini 3 Pro's 75% score. It scored a 92.4% on the GPQA Diamond benchmark (science questions), compared with Gemini 3 Pro's 91.9% score."

"The new model comes in three variants. GPT-5.2 Instant is good for seeking information and how-tos, skill-building and study, and career guidance. GPT-5.2 Thinking is good for harder professional tasks like spreadsheet formatting and slideshow creation. GPT-5.2 Pro, the company says, takes longer to generate answers but is its "smartest and most trustworthy" model for generating accurate answers in complex domains like programming."

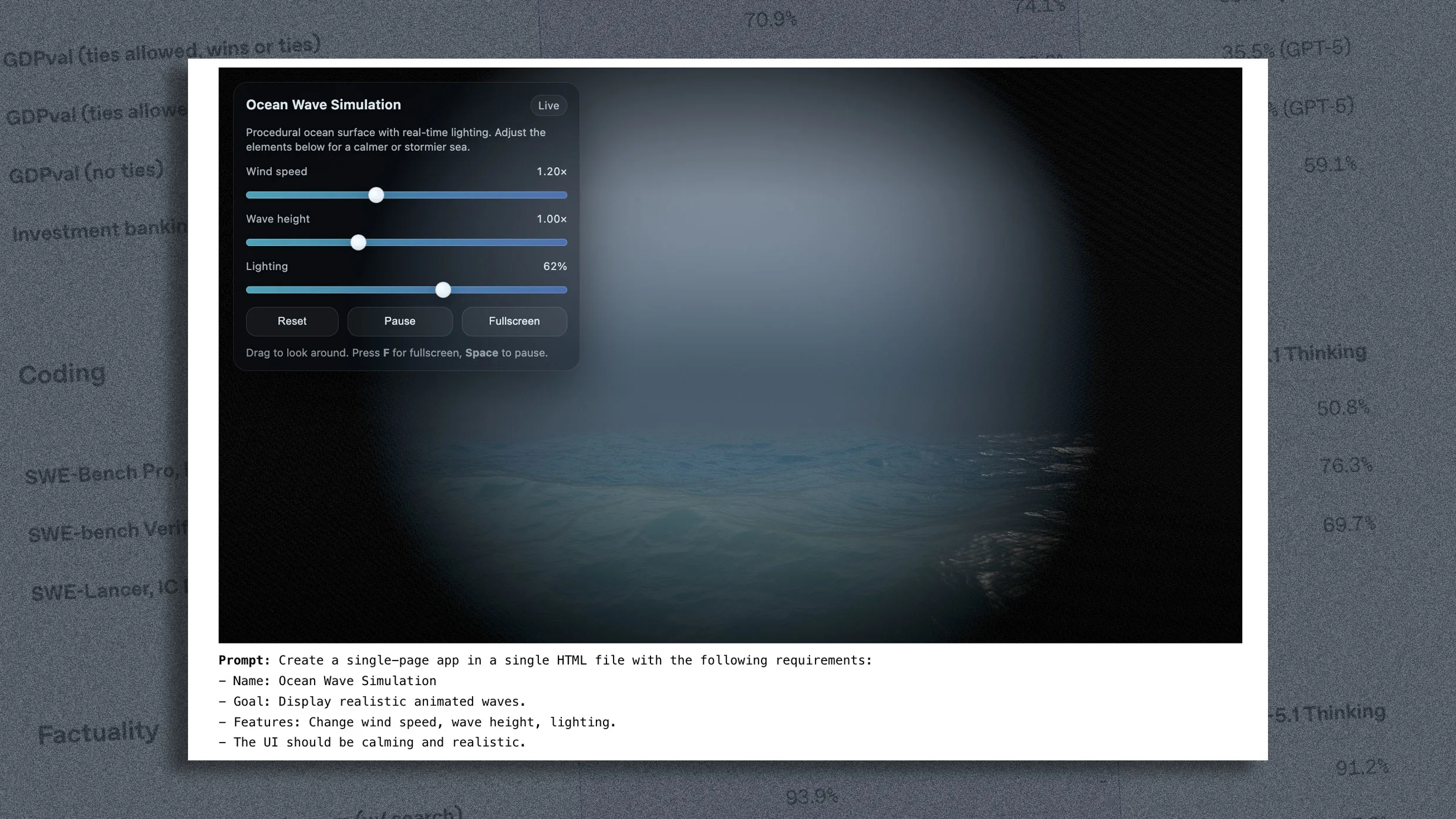

GPT-5.2 demonstrates top performance across a range of benchmark tests for coding, math, science, tool use, and vision. The model achieved expert-level performance on the GDPval benchmark covering 44 professional tasks including spreadsheet creation, document drafting, and presentation building. On SWE-Bench Pro it scored 55.6% versus Gemini 3 Pro's 43.3%; on ARC-AGI-1 it scored 86.2% versus 75%; and on GPQA Diamond it scored 92.4% versus 91.9%. GPT-5.2 is available in Instant, Thinking, and Pro variants aimed at different complexity levels and emphasizes improvements in reasoning, long-context understanding, agentic tool-calling, and vision.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]