#edge-computing

#edge-computing

[ follow ]

#ai #on-device-ai #cybersecurity #ai-infrastructure #kubernetes #iot #ai-hardware #scale-computing #ai-technology

fromTNW | Startups-Technology

5 days agoStanhope AI raises $8M to build adaptive AI for robotics and defence

London-based deep tech startup Stanhope AI has closed a €6.7 million ($8 million) Seed funding round to advance what it calls a new class of adaptive artificial intelligence designed to power autonomous systems in the physical world. The round was led by Frontline Ventures, with participation from Paladin Capital Group, Auxxo Female Catalyst Fund, UCL Technology Fund, and MMC Ventures. The company says its approach moves beyond the pattern-matching strengths of large language models, aiming instead for systems that can perceive, reason, and act with a degree of context awareness in uncertain environments.

Artificial intelligence

Artificial intelligence

fromTNW | Events

1 week agoTechEx Global returns to London with enterprise technology and AI execution

AI is shifting from providing answers to executing tasks autonomously in enterprises, with practical deployments, governance focus, and integration alongside cybersecurity and edge computing.

fromInfoWorld

3 weeks agoThe private cloud returns, for AI workloads

A North American manufacturer spent most of 2024 and early 2025 doing what many innovative enterprises did: aggressively standardizing on the public cloud by using data lakes, analytics, CI/CD, and even a good chunk of ERP integration. The board liked the narrative because it sounded like simplification, and simplification sounded like savings. Then generative AI arrived, not as a lab toy but as a mandate. "Put copilots everywhere," leadership said. "Start with maintenance, then procurement, then the call center, then engineering change orders."

Artificial intelligence

fromMedium

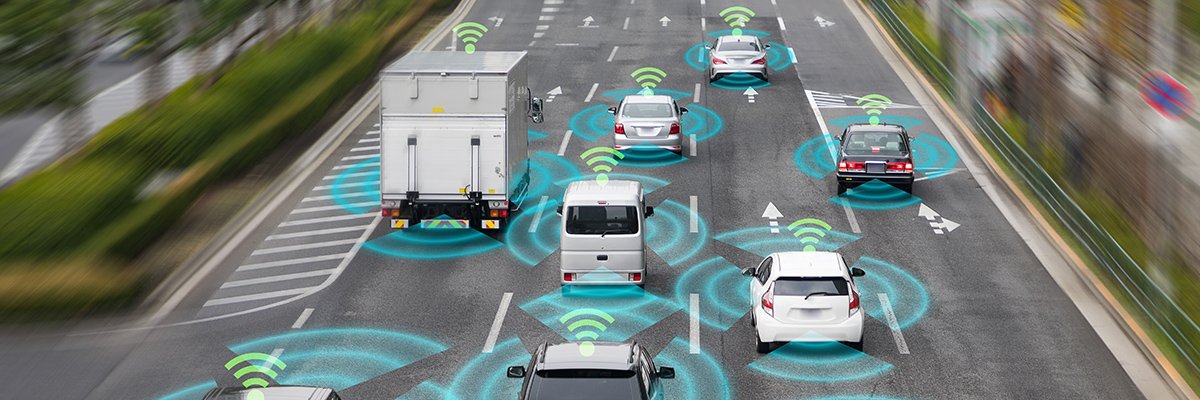

3 weeks agoHow Fiber Networks Support Edge Computing

Edge computing is a type of IT infrastructure in which data is collected, stored, and processed near the "edge" or on the device itself instead of being transmitted to a centralized processor. Edge computing systems usually involve a network of devices, sensors, or machinery capable of data processing and interconnection. A main benefit of edge computing is its low latency. Since each endpoint processes information near the source, it can be easier to process data, respond to requests, and produce detailed analytics.

Tech industry

Marketing tech

fromExchangewire

1 month agoCovatic Sense: New Solution Unlocks Individual Addressability & Attribution for Connected TV

Covatic Sense provides privacy-first, person-level CTV targeting and measurement by passively detecting in-room mobile devices and sending only anonymous audience cohort codes.

Artificial intelligence

fromForbes

1 month agoIs Cloud Becoming AI's Bottleneck? Lenovo's Hybrid AI Strategy Suggests It Might Be

AI must be deployed via hybrid architectures that place intelligence across devices, edge, private infrastructure, and cloud to ensure reliable, governed, and user-centric operation.

fromTechzine Global

3 months agoDell PowerProtect integrates with NativeEdge and Nutanix Prism Central

Dell Technologies is bringing innovations to its PowerProtect appliances. The solutions are designed to help companies respond more quickly to cyberattacks by improving protection, enabling intelligent automation, and providing greater flexibility. IT professionals are increasingly concerned about disruptive cyberattacks. Cyber resilience is central to the enhancements. PowerProtect now integrates with Dell NativeEdge for edge computing. The solution also supports Nutanix Hyper-Converged environments via Prism Central.

Information security

fromComputerworld

3 months agoSpace: The final frontier for data processing

There are, however, a couple of reasons why data centers in space are being considered. There are plenty of reports about how the increased amount of AI processing is affecting power consumption within data centers; the World Economic Forum has estimated that the power required to handle AI is increasing at a rate of between 26% and 36% annually. Therefore, it is not surprising that organizations are looking at other options.

Artificial intelligence

Artificial intelligence

fromTechCrunch

3 months agoSkyline Nav AI's software can guide you anywhere, without GPS - find it at TechCrunch Disrupt 2025 | TechCrunch

Skyline Nav AI's vision-based Pathfinder enables GPS-independent navigation by matching visual scenes to a database, providing resilience against GPS outages and jamming.

Artificial intelligence

fromwww.montereyherald.com

3 months agoJoby collaborates with Nvidia to accelerate next-era autonomous flight

Nvidia selected Joby as sole aviation launch partner for the IGX Thor platform, enabling Joby to advance Superpilot autonomous flight with high-performance onboard computing.

Artificial intelligence

fromComputerWeekly.com

3 months agoDell Technologies accelerates AI and digital transformation across the Middle East | Computer Weekly

Dell Technologies is accelerating AI-driven digital transformation in the Middle East through AI, multi-cloud, edge computing, security, and regional partnerships.

Tech industry

fromComputerWeekly.com

3 months agoTMobile unveils Edge Control, T-Platform business networks | Computer Weekly

T-Mobile launched Edge Control and T-Platform to deliver purpose-built 5G edge solutions for mission-critical, real-time, data-sensitive enterprise operations requiring high uptime and network control.

Artificial intelligence

fromComputerWeekly.com

4 months agoReal-time analytics tops priorities for 82% of IoT enterprise | Computer Weekly

Enterprises prioritise edge computing and real-time analytics to extract immediate value from IoT data, integrating AI for rapid decision-making and operational responsiveness.

fromTheregister

4 months agoArduino's got a new job: selling chips for its new owner

A lot of cheap dev boards and Qualcomm-flavored software tools will certainly give the company access to entire sectors previously excluded. Qualcomm hitherto only talked to big old companies who'd sign big old contracts with big old secrets. Now Qualcomm silicon and software can get into the hands of individuals, educators and inventive start-ups. That's where the future comes from, especially where the better sort of AI fertilizes the better sort of robotics.

Tech industry

fromTechzine Global

4 months agoCloudera defines new data trajectories for AI ecosystems

Cloudera describes itself as the company that brings "AI to data anywhere" today. It's a claim stems from its work that spans a multiplicity of data stacks in private datacentres, in public cloud and at the compute edge. As we now witness enterprises move quickly through what the company says are "new stages of AI maturity", Cloudera used its Evolve25 flagship practitioner & partner conference this month in New York to explain the mechanics of its mission to help enterprises navigate this transformation with an AI-powered data lakehouse.

Artificial intelligence

fromInfoQ

4 months agoCloudflare Adds Node.js HTTP Servers to Cloudflare Workers

To support HTTP client APIs, Cloudflare reimplemented the core node:http APIs by building on top of the standard fetch() API that Workers use natively, maintaining Node.js compatibility without significantly affecting performance. The wrapper approach supports standard HTTP methods, request and response headers, request and response bodies, streaming responses, and basic authentication. However, the managed approach makes it impossible to support a subset of Node.js APIs.

Node JS

fromTechCrunch

5 months agoEthan Thornton of Mach Industries takes the AI stage at Disrupt 2025 | TechCrunch

From stealth mode to center stage, Mach Industries is bringing AI into one of the world's most complex and controversial sectors: defense. At TechCrunch Disrupt 2025, Ethan Thornton, CEO and founder of Mach Industries, steps onto the AI Stage to share what it takes to build in high-stakes environments where speed and autonomy matter most - and why next-gen infrastructure starts with rethinking the fundamentals.

Artificial intelligence

fromComputerWeekly.com

5 months agoRising network outages are proving costly to businesses | Computer Weekly

As the backbone of artificial intelligence (AI), machine learning, cloud computing and the internet of things (IoT), datacentres are at the centre of modern business infrastructure, but as businesses become more dispersed, the infrastructure surrounding datacentres has become more critical and complex, with network outages no longer isolated events and their cost to businesses never higher, a study from Opengear has found.

Information security

fromInfoQ

5 months agoCNCF Incubates OpenYurt for Kubernetes at the Edge

For OpenYurt, Kubernetes at the edge means running workloads outside centralised data centres at locations like branch offices or IoT sites. This helps reduce latency, improve reliability when connectivity is limited, and enable tasks such as analytics, machine learning, and device management, all while maintaining consistency with upstream Kubernetes. As the CNCF notes in its Cloud Strategies and Edge Computing blog, deploying compute resources closer to the network edge can deliver high-speed connectivity, lower latency, and enhanced security.

Tech industry

fromTechzine Global

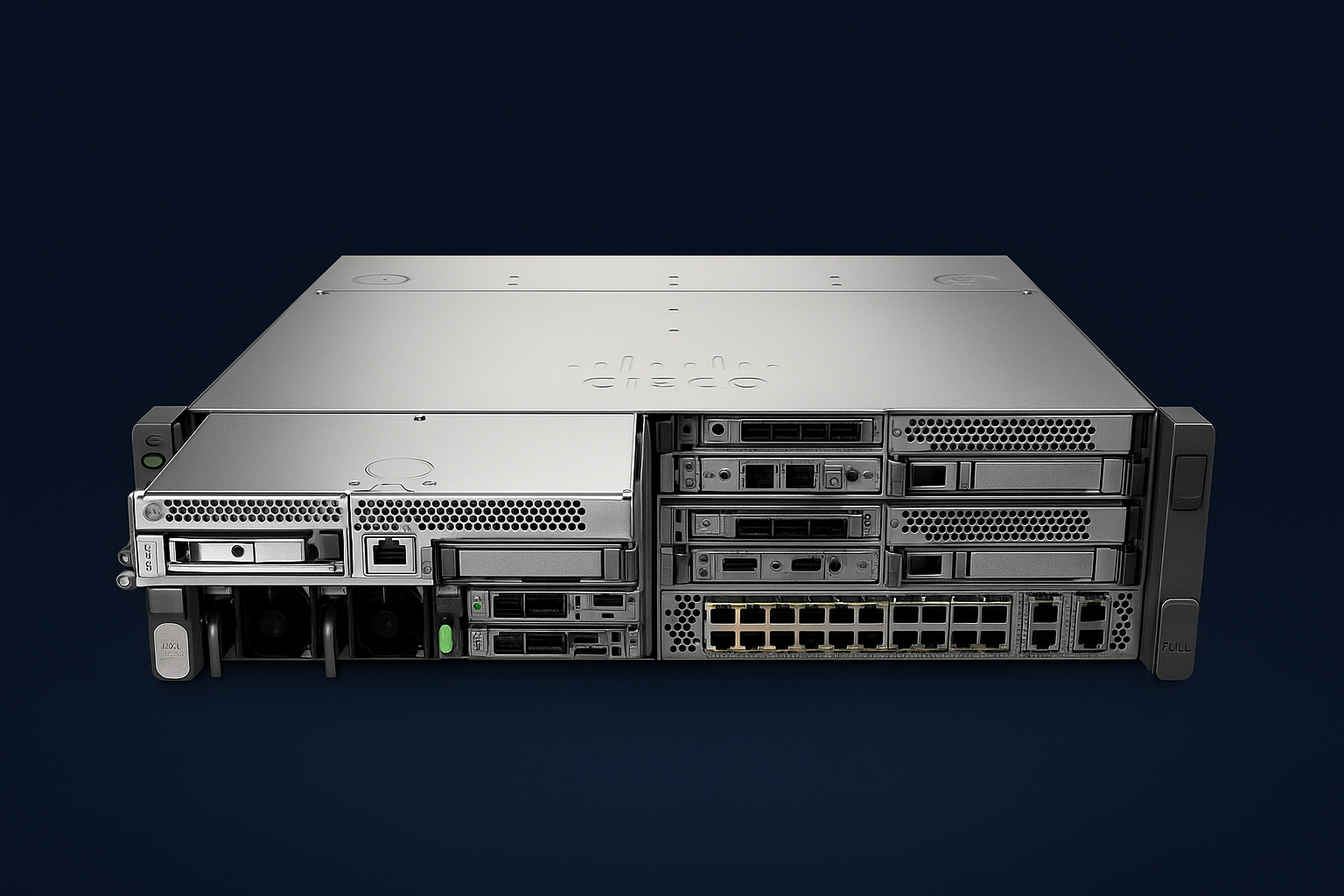

5 months agoCommvault launches HyperScale Edge and Flex for better cyber resilience

With HyperScale Edge, Commvault is specifically targeting remote locations and smaller businesses where space and IT resources are limited. Think of retail stores, branches, and distribution centers. These environments often have different needs than large data centers. The solution works with validated hardware from Dell, HPE, and Lenovo. Like the other HyperScale products, it is delivered as a software image that is easy to install. This makes implementation faster and more predictable.

Information security

[ Load more ]