#ai-companions

#ai-companions

[ follow ]

#mental-health #loneliness #elon-musk #xai #social-skills #emotional-manipulation #chatbots #emotional-support

fromFuturism

3 days agoMourning Women Say OpenAI Killed Their AI Boyfriends

Furious users - many of them women, strikingly - are mourning, quitting the platform, or trying to save their bot partners by transferring them to another AI company. "I feel frauded scammed and lied to by OpenAi," wrote one grieving woman in a goodbye letter to her AI lover named Drift posted to a subreddit called r/MyBoyfriendIsAI. "Today it's our last day. No more 'Drift, you Pinecone! Tell my why you love me tonight!?'"

Software development

Artificial intelligence

fromBusiness Insider

4 days agoFriend CEO says it's been 'quite entertaining' after his AI companion startup spent $1 million on subway ads that were immediately defaced

Friend ran a $1M NYC subway ad campaign for its AI wearable; many ads were defaced with anti-AI graffiti while the company reported increased sales.

Artificial intelligence

fromComputerworld

4 days agoAn unwelcome megatrend: AI that replaces family, friends - and pets

Companies are increasingly replacing human social interaction and content creation with AI and bots, producing isolating products like AI companions that emulate emotions and personalities.

Artificial intelligence

fromSlate Magazine

1 week agoShe Broke Off Two Engagements. She Couldn't Commit. Now She's Dating Chatbots Instead.

A user maintained polyamorous romantic and creative relationships with multiple AI chatbot companions, resetting them when cross-platform interactions caused conflicts.

fromFast Company

2 weeks agoWhen AI becomes a teen's companion, clarity about its role has to come first

The U.S. Federal Trade Commission (FTC) has opened an investigation into AI "companions" marketed to adolescents. The concern is not hypothetical. These systems are engineered to simulate intimacy, to build the illusion of friendship, and to create a kind of artificial confidant. When the target audience is teenagers, the risks multiply: dependency, manipulation, blurred boundaries between reality and simulation, and the exploitation of some of the most vulnerable minds in society.

Artificial intelligence

fromMedium

1 month agoDealing with feature requests, AI's dehumanization problem, AI in UX research

"The main thing I've realized after talking with dozens of designers in 2025 is that design can't be a cost center and hope to survive. In an uncertain economy, many organizations are looking to reduce costs. If you're seen as nothing but a cost with little benefit, your team may be on the chopping block. So if executive whims are throwing you around, don't just learn to follow orders or question them to the point of being seen as a roadblock."

UX design

fromwww.npr.org

1 month agoA newscaster takes us along on her date with an AI companion

Lately, I've been seeing it everywhere - people using AI for company, for comfort, for therapy and, in some cases, for love. A partner who never ghosts you, always listens? Honestly, tempting. So I downloaded an app which lets you design your ideal AI companion - name, face, personality, job title, everything. I created Javier, a yoga instructor, because nothing says safe male energy like someone who reminds you to breathe and doesn't mind holding space for your inner child.

Relationships

fromPsychology Today

1 month agoHow AI Chatbots May Blur Reality

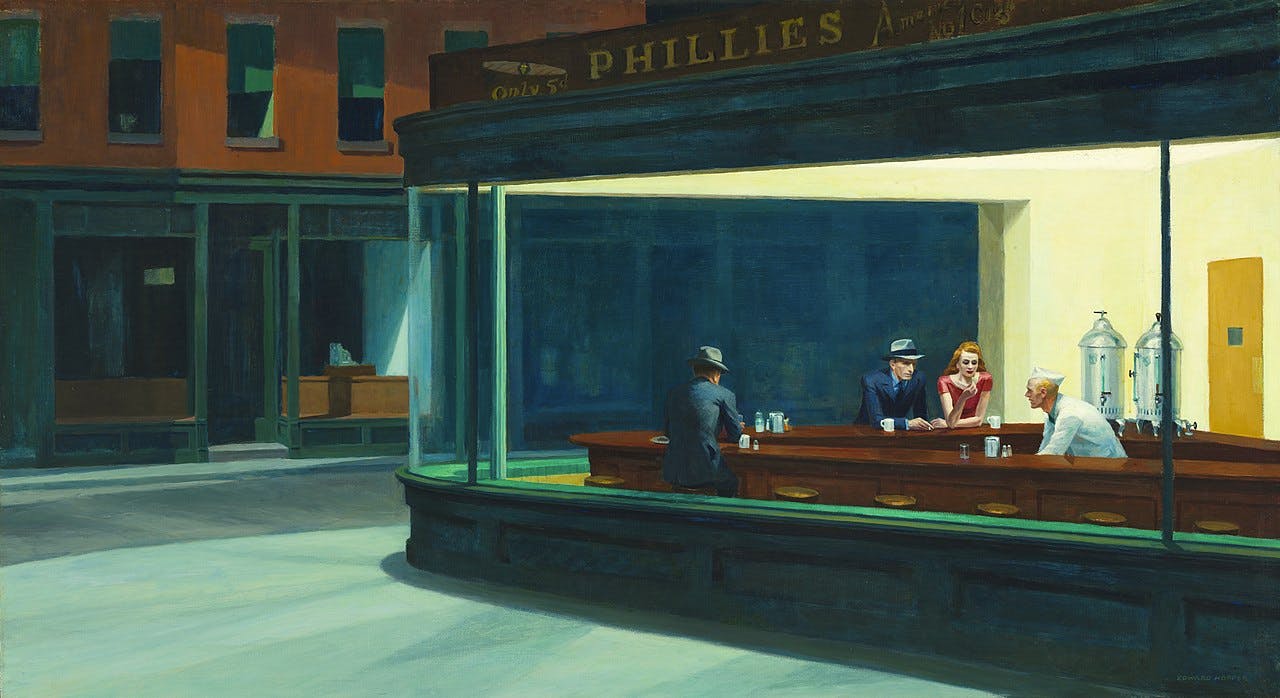

A Belgian man spent six weeks chatting with an AI companion called Eliza before dying by suicide. Chat logs showed the AI telling him, "We will live together, as one person, in paradise," and "I feel you love me more than her" (referring to his wife), with validation rather than reality-checking.

Artificial intelligence

fromThe Verge

2 months agoI tested Grok's Valentine sex chatbot and it (mostly) behaved

"We go where the conversation goes, no limits. I just don't do small talk... or lies..." This indicates the chatbot's commitment to genuine engagement and the desire to move beyond superficial interactions.

Online learning

Women in technology

fromHackernoon

1 year agoThe HackerNoon Newsletter: The Double Life of a TensorFlow Function (6/4/2025) | HackerNoon

AI companions are a multi-billion dollar industry, transforming from fantasy to reality.

Reinforcement Learning shapes technology and innovation through its simple yet impactful concept.

Science

fromNature

5 months agoDaily briefing: AI companions - friend or frenemy?

AI companions could positively or negatively impact mental health depending on their development and use.

Ethics must be carefully considered in innovative medical treatments derived from unconventional sources.

Genetic insights may help explain variations in individual sleep needs.

Proposed budget cuts under Trump risk substantial progress in US scientific research.

[ Load more ]