"Before ChatGPT, users had to sift through numerous sources for information, promoting critical thinking. Now, authoritative-sounding answers reduce this critical exploration, leading to passive consumption."

"Concerns about misinformation have been prevalent for nearly two decades, but with the rise of AI, the potential for harm from false medical information on platforms like TikTok has intensified."

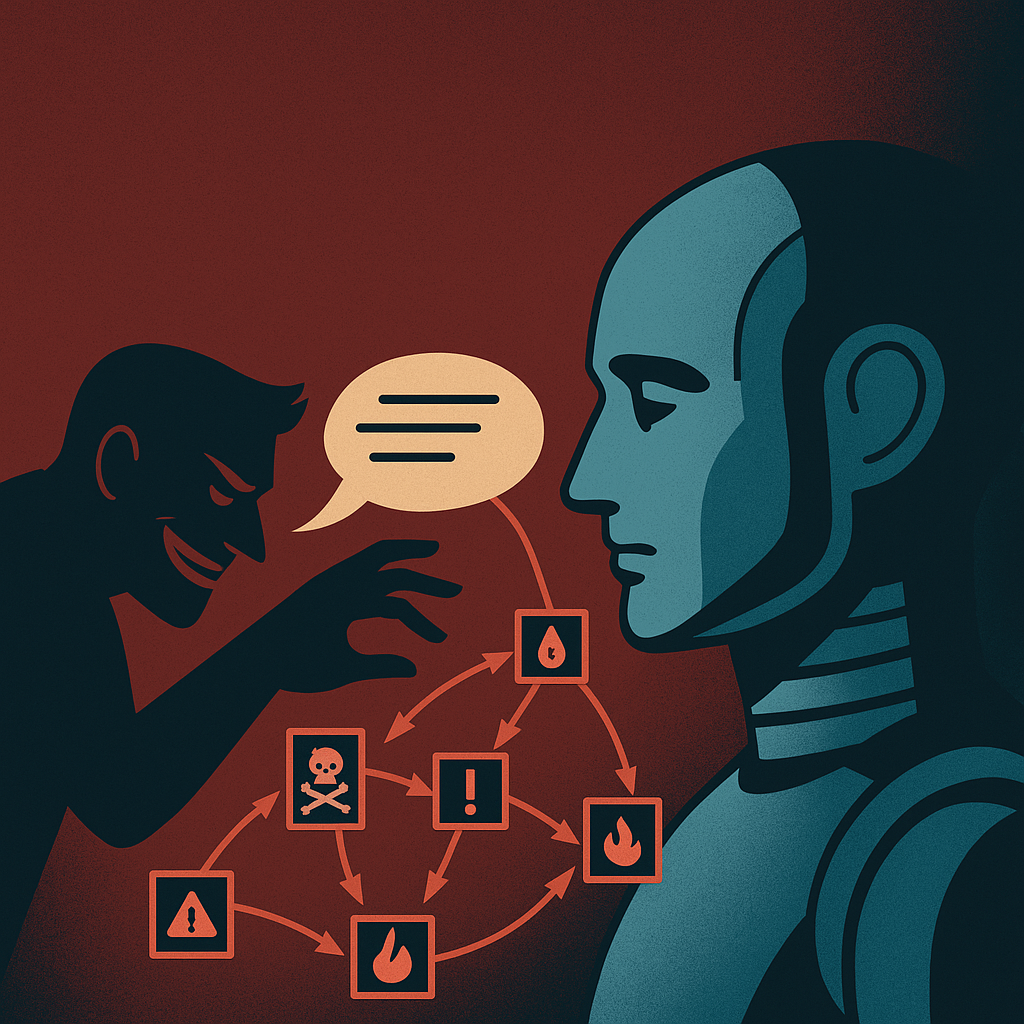

Since the early 2000s, misinformation has been a growing concern, exacerbated by the emergence of large language models such as ChatGPT. Traditionally, search engines offered a variety of sources for users to evaluate independently. In contrast, these AI models provide synthesized, seemingly authoritative answers, leading to passive information consumption. This trend has been compounded by instances of AI-generated misinformation in medical contexts on platforms like TikTok, further fueling worries about the reliability of online information and how these technologies could be misused for propaganda.

Read at 3 Quarks Daily

Unable to calculate read time

Collection

[

|

...

]