#ai-generated-code

#ai-generated-code

[ follow ]

fromSecurityWeek

4 days agoHow to Eliminate the Technical Debt of Insecure AI-Assisted Software Development

This extends to the software development community, which is seeing a near-ubiquitous presence of AI-coding assistants as teams face pressures to generate more output in less time. While the huge spike in efficiencies greatly helps them, these teams too often fail to incorporate adequate safety controls and practices into AI deployments. The resulting risks leave their organizations exposed, and developers will struggle to backtrack in tracing and identifying where - and how - a security gap occurred.

Artificial intelligence

fromTechCrunch

6 days agoFormer GitHub CEO raises record $60M dev tool seed round at $300M valuation | TechCrunch

Entire's tech has three components. One is a git-compatible database to unify the AI-produced code. Git is a distributed version control system popular with enterprises and used by open source sites like GitHub and GitLab. Another component is what it calls "a universal semantic reasoning layer" intended to allow multiple AI agents to work together. The final piece is an AI-native user interface designed with agent-to-human collaboration in mind.

Startup companies

fromInfoWorld

1 week agoGitHub eyes restrictions on pull requests to rein in AI-based code deluge on maintainers

GitHub is exploring what already seems like a controversial idea that would allow maintainers of repositories or projects to delete pull requests (PRs) or turn off the ability to receive pull requests as a way to address an influx of low-quality, often AI-generated contributions that many open-source projects are struggling to manage.

Software development

Artificial intelligence

fromEngadget

2 weeks agoMoltbook, the AI social network, exposed human credentials due to vibe-coded security flaw

Moltbook exposed millions of API tokens, tens of thousands of emails and private messages because insecure, AI-directed code allowed unauthenticated edits and bot impersonation.

Artificial intelligence

fromFortune

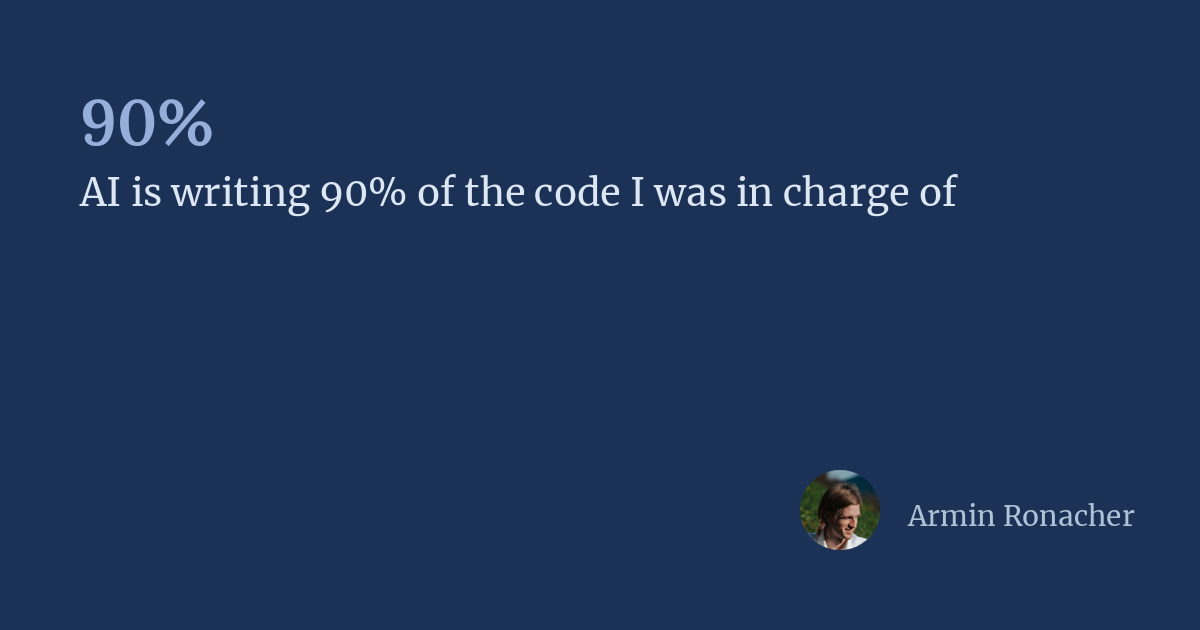

2 weeks agoAI writes 100% of the code at Anthropic, OpenAI top engineers say-with big implications for the future of software development jobs | Fortune

AI models now generate nearly all production code at some AI labs, allowing engineers to focus on editing and oversight rather than manual coding.

fromLogRocket Blog

3 weeks agoWhy AI coding tools shift the real bottleneck to review - LogRocket Blog

On December 19, 2025, Cursor acquired Graphite for more than $290 million. CEO Michael Truell framed the move simply: code review is taking up a growing share of developer time as the time spent writing code keeps shrinking. The message is clear. AI coding tools have largely solved the generation speed. Now the industry is betting that review is the next constraint to break.

Software development

fromLogRocket Blog

2 months agoFixing AI-generated code: 5 ways to debug, test, and ship safely - LogRocket Blog

AI tools often produce code that compiles and runs, but contains subtle bugs, security vulnerabilities, or inefficient implementations that may not surface until production. AI systems also lack a true understanding of business logic. They often create solutions that seem to work - but hide issues that aren't found until later. As developers are building solutions, the AI will most frequently cover common solutions but fail on edge cases.

Software development

fromTechCrunch

2 months agoSecurity startup Guardio nabs $80M from ION Crossover Partners | TechCrunch

Cybersecurity company Guardio is taking aim at a fresh market born amid this flux: finding malicious code written using AI tools. The company says it has found that with AI tools, malicious actors now find it easier than ever to build scam and phishing sites as well as the infrastructure needed to run them. Now, Guardio is leveraging its experience building browser extensions and apps that scan for malicious and phishing sites.

Information security

fromFast Company

3 months agoAI wrote the code. You got hacked. Now what?

This isn't hypothetical. In a survey of 450 security leaders, engineers, and developers across the U.S. and Europe, 1 in 5 organizations said they had already suffered a serious cybersecurity incident tied to AI-generated code, and more than two-thirds (69%) had uncovered flaws created by AI. Mistakes made by a machine, rather than by a human, are directly linked to breaches that are already causing real financial, reputational, or operational damage. Yet artificial intelligence isn't going away.

Artificial intelligence

fromInfoWorld

4 months agoThe productivity paradox of AI-assisted coding

But many engineering teams are noticing a trend: even as individual developers produce code faster, overall project delivery timelines are not shortening. This isn't just a feeling. A recent METR study found that AI coding assistants decreased experienced software developers' productivity by 19%. "After completing the study, developers estimate that allowing AI reduced completion time by 20%," the report noted. "Surprisingly, we find that allowing AI actually increases completion time by 19%-AI tooling slowed developers down."

Software development

fromTechCrunch

5 months agoVibe coding has turned senior devs into 'AI babysitters,' but they say it's worth it | TechCrunch

She called vibe coding a beautiful, endless cocktail napkin on which one can perpetually sketch ideas. But dealing with AI-generated code that one hopes to use in production can be "worse than babysitting," she said, as these AI models can mess up work in ways that are hard to predict. She had turned to AI coding in a need for speed with her startup, as is the promise of AI tools.

Software development

fromTheregister

5 months agoGitHub Copilot on autopilot as community complaints persist

I've been for a while now filing issues in the GitHub Community feedback area when Copilot intrudes on my GitHub usage, I deeply resent that on top of Copilot seemingly training itself on my GitHub-posted code in violation of my licenses, GitHub wants me to look at (effectively) ads for this project I will never touch

Software development

fromBusiness Insider

7 months agoRobinhood CEO says the majority of the company's new code is written by AI, with 'close to 100%' adoption from engineers

Nearly 100% of engineers at Robinhood are using AI code editors, making it hard to distinguish between human-written and AI-generated code, according to CEO Vlad Tenev.

Artificial intelligence

[ Load more ]