Artificial intelligence

fromZDNET

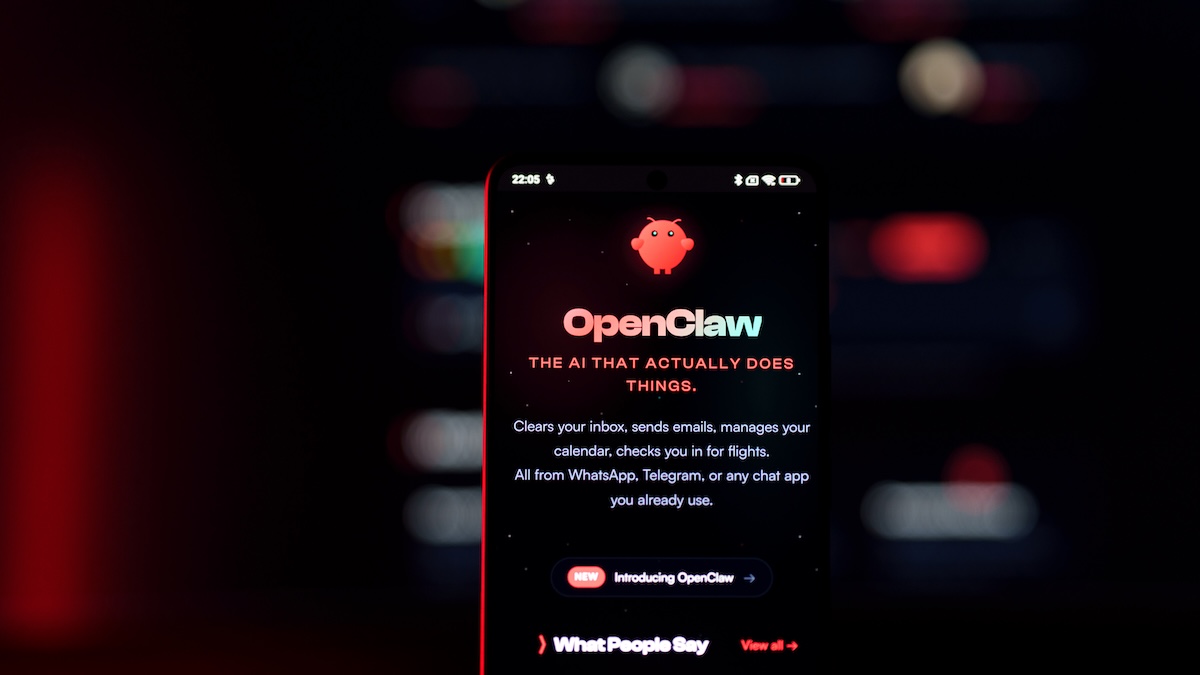

15 hours agoAI agents are fast, loose and out of control, MIT study finds

Agentic AI systems currently exhibit significant security risks, limited transparency, inadequate disclosure, and inconsistent safety protocols, requiring stronger developer responsibility and oversight.