"We were also asked to provide additional information about birth date, weight and height, which was optional but recommended (we skipped this). After the questionnaire, we were presented with an advertising network's lengthy consent form similar to Maya's, for which we manually deselected our consent to the listed data-sharing activities and vendors for the purposes of personalised advertising and analytics."

"Using the Intelligent Assistant feature required us to create an account. After creating an account via email, we could see in the web traffic that when we launched the app, our email and password were requested by the API to authorise and identify our log-in."

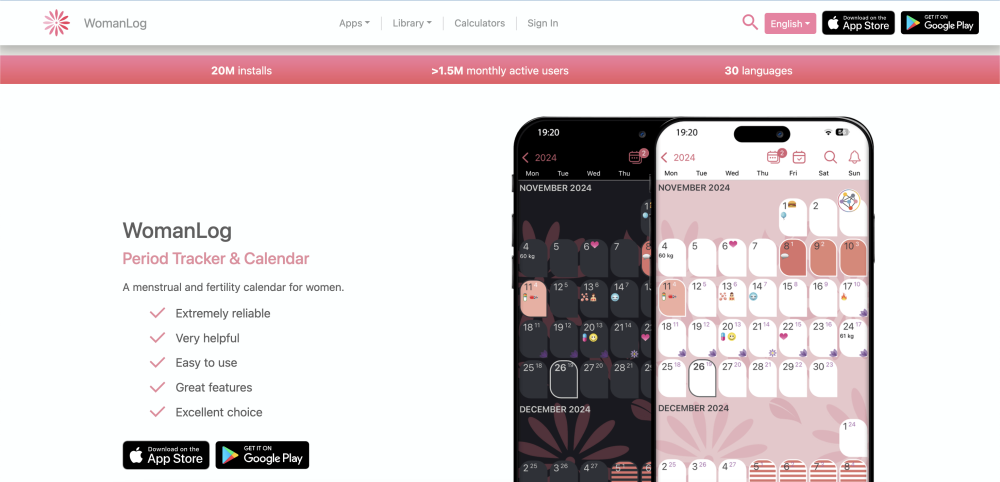

"In response to our findings, WomanLog stated that all communication between the app and their servers is HTTPS encrypted. Next, we tested the Intelligent Assistant feature, which is a paid-for period prediction and chatbot service powered by OpenAI."

The article explores the data sharing practices of the WomanLog app, highlighting user consent demands and security measures. Users were encouraged to provide additional personal data, although it was optional. They had to navigate a lengthy consent form for data-sharing activities, ensuring they could opt-out. Communication with the app's servers is reportedly HTTPS encrypted, providing some level of security. The testing also revealed potential data retention practices, as users were required to create accounts for paid features, raising concerns about data interception and privacy.

Read at privacyinternational.org

Unable to calculate read time

Collection

[

|

...

]