"English is now an API. Our apps read untrusted text; they follow instructions hidden in plain sight, and sometimes they turn that text into action. If you connect a model to tools or let it read documents from the wild, you have created a brand new attack surface. In this episode, we will make that concrete. We will talk about the attacks teams are seeing in 2025, the defenses that actually work, and how to test those defenses the same way we test code."

"Tori Westerhoff leads operations for Microsoft's AI Red Team. Her group red teams high-risk generative AI across models, systems, and features company-wide. Scope spans classic security plus AI-specific harms such as trustworthiness, national security, dangerous capabilities (chemical/biological/radiological/nuclear), autonomy, and cyber. She brings a background in neuroscience, national security, and an MBA, and emphasizes interdisciplinary testing and people-centric adoption challenges. Roman Lutz is an engineer on the tooling side of Microsoft's AI Red Team."

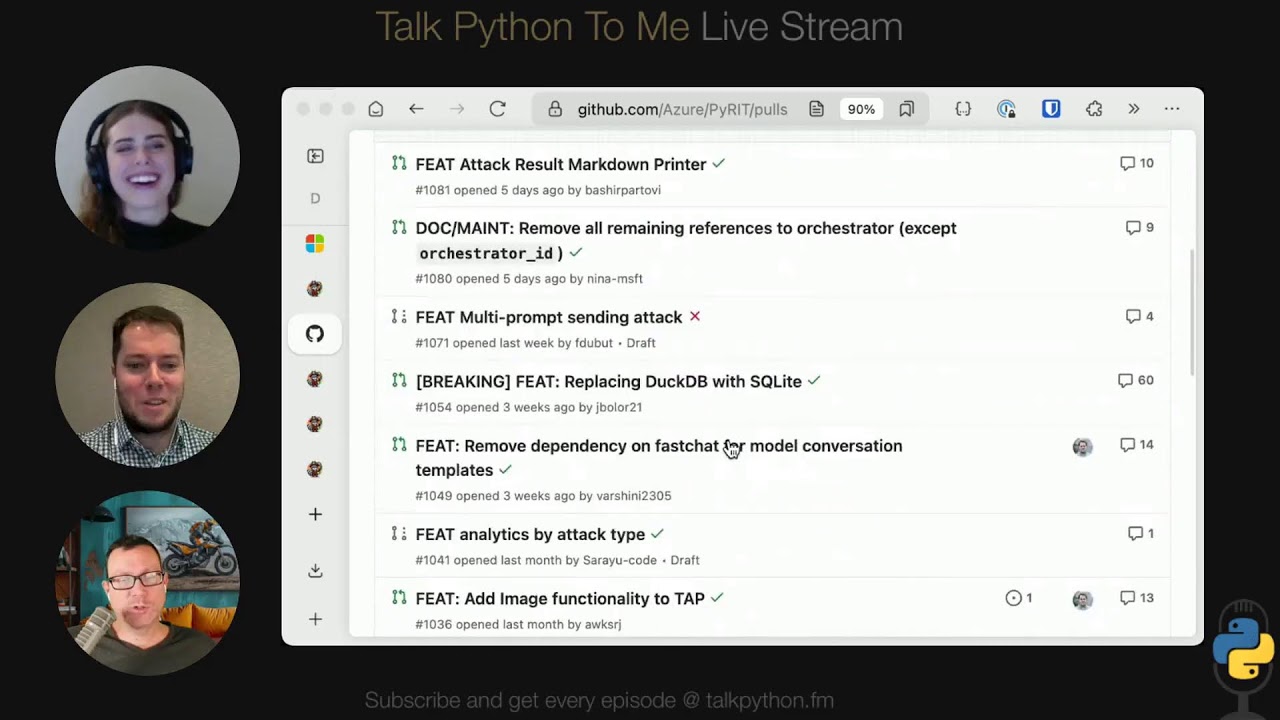

Natural language interfaces function as APIs, allowing applications to read and execute instructions embedded in untrusted text. Connecting models to external tools or documents creates a novel attack surface where hidden instructions can trigger actions. Contemporary threats include model exploitation, trustworthiness failures, dangerous capability enablement, autonomy misuse, and cyber risks. Effective defenses combine engineering controls, continuous red teaming, and automated testing that integrates with software development workflows. PyRIT is a Python framework for pressure-testing models and product integrations to reveal real-world vulnerabilities. Adopting red teaming as an everyday engineering practice enables shipping concrete mitigations within short release cycles.

Read at Talkpython

Unable to calculate read time

Collection

[

|

...

]