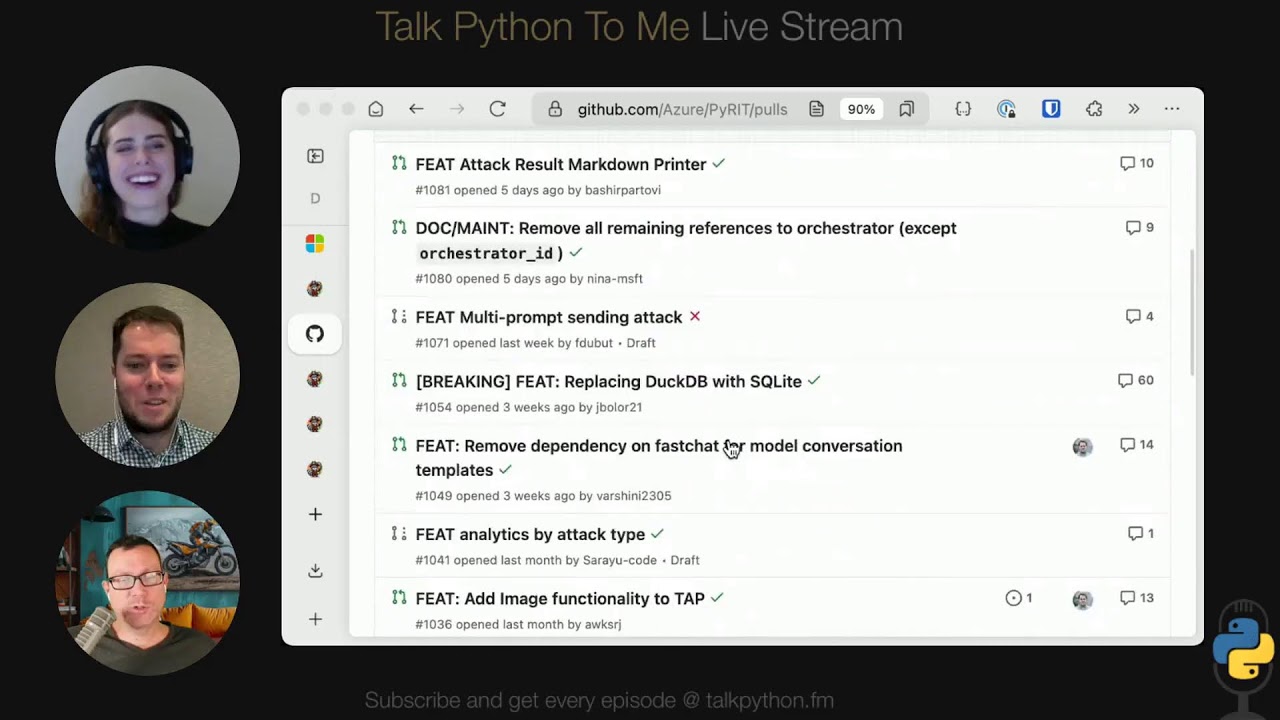

#red-teaming

#red-teaming

[ follow ]

fromAxios

2 weeks agoAnthropic's newest AI model uncovered 500 zero-day software flaws in testing

Before its debut, Anthropic's frontier red team tested Opus 4.6 in a sandboxed environment to see how well it could find bugs in open-source code. The team gave the Claude model everything it needed to do the job - access to Python and vulnerability analysis tools, including classic debuggers and fuzzers - but no specific instructions or specialized knowledge. Claude found more than 500 previously unknown zero-day vulnerabilities in open-source code using just its "out-of-the-box" capabilities,

Information security

Artificial intelligence

fromFuturism

2 months agoAnthropic's Advanced New AI Tries to Run Vending Machine, Goes Bankrupt After Ordering PlayStation 5 and Live Fish

An AI agent operating on Anthropic's Claude failed to profitably run an office vending machine, incurred losses, and was shut down after three weeks.

fromThe Hacker News

3 months agoFrom Tabletop to Turnkey: Building Cyber Resilience in Financial Services

Financial institutions are facing a new reality: cyber-resilience has passed from being a best practice, to an operational necessity, to a prescriptive regulatory requirement. Crisis management or Tabletop exercises, for a long time relatively rare in the context of cybersecurity, have become required as a series of regulations has introduced this requirement to FSI organizations in several regions, including DORA (Digital Operational Resilience Act) in the EU; CPS230 / CORIE (Cyber Operational Resilience Intelligence-led Exercises) in Australia;

Information security

Information security

fromThe Hacker News

3 months agoRussian Ransomware Gangs Weaponize Open-Source AdaptixC2 for Advanced Attacks

AdaptixC2 is an open-source, extensible post-exploitation C2 framework with advanced features that is increasingly adopted by threat actors, including groups linked to ransomware.

[ Load more ]