""We started to think about comparing these models in terms of environmental resources, water, energy, and carbon footprint," says Abdeltawab Hendawi, assistant professor at the University of Rhode Island."

"The findings are stark. OpenAI's o3 model and DeepSeek's main reasoning model use more than 33 watt-hours (Wh) for a long answer, which is more than 70 times the energy required by OpenAI's smaller GPT-4.1 nano."

"Claude-3.7 Sonnet, developed by Anthropic, is the most eco-efficient, the researchers claim, noting that hardware plays a major role in the environmental impact of AI models."

"Even short queries consume a noticeable amount of energy. A single brief GPT-4o prompt uses about 0.43 Wh. At OpenAI's estimated 700 million GPT-4o calls per day..."

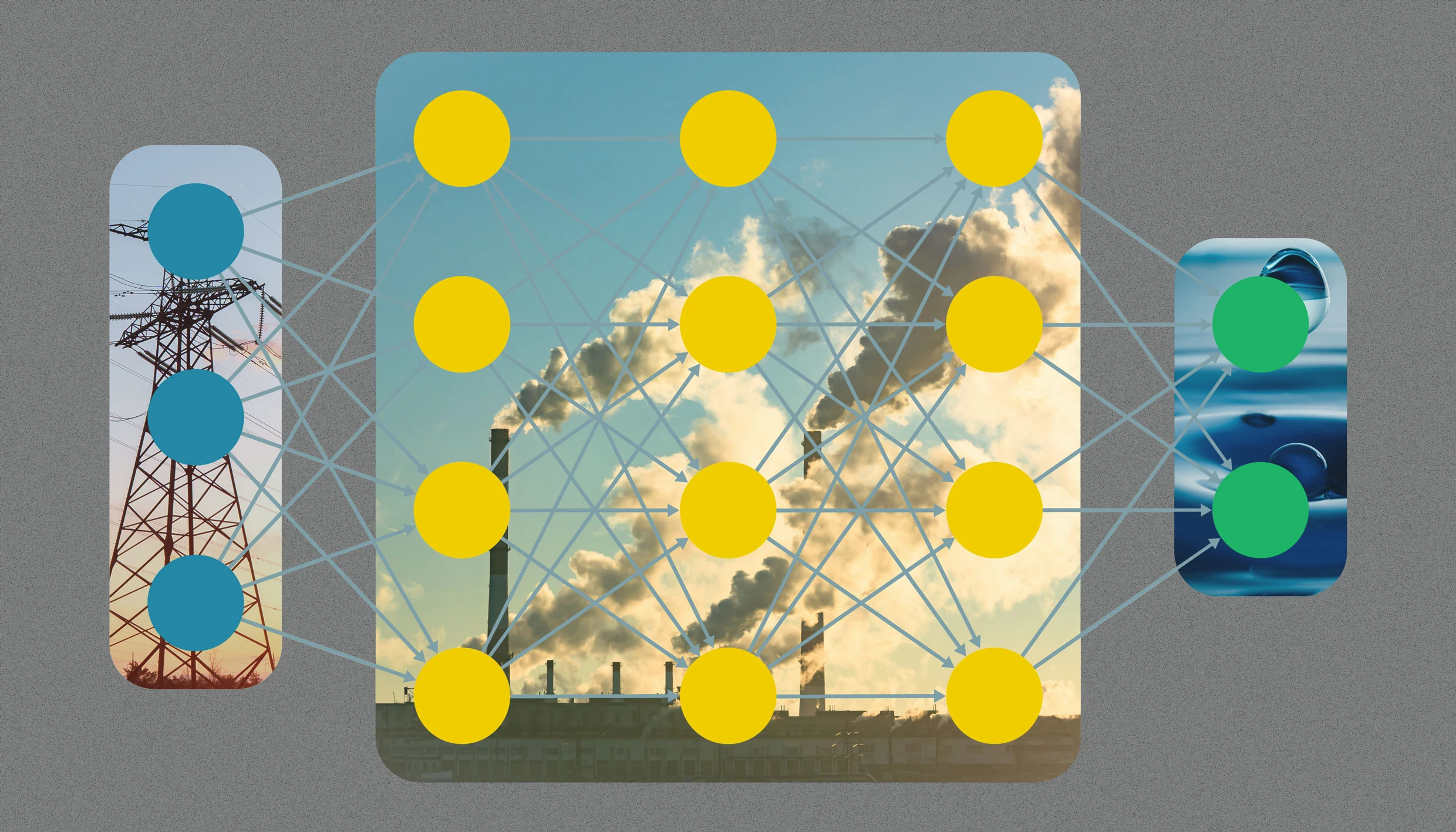

AI companies are hesitant to disclose information on their environmental impact, yet a recent study from Cornell University provides a clearer picture. Researchers developed an infrastructure-aware benchmark that calculates the environmental footprint of 30 mainstream AI models based on latency data, GPU operations, and regional power grids. The results show a significant variance in eco-efficiency, with some models using over 33 watt-hours for long answers, highlighting the disparity in energy demands among AI systems and the growing concern about their environmental toll.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]