#gpus

#gpus

[ follow ]

#nvidia #ai-infrastructure #data-centers #ai-chips #artificial-intelligence #ai #openai #amd #ai-hardware

Artificial intelligence

from24/7 Wall St.

1 month ago2026's Biggest AI Trends: Self-Driving Cars | TSLA, GOOGL, UBER, NVDA Stock

Vision-only autonomous driving, enabled by AI and GPUs, reduces cost and complexity, enabling large-scale deployment and driving massive demand benefiting platforms like NVIDIA.

fromThe Motley Fool

1 month agoNvidia vs. Alphabet: Which Is the Better AI Growth Stock for 2026? | The Motley Fool

There are numerous ways to bet on AI (artificial intelligence). But two paths are particularly intriguing: the AI technology suppliers and the beneficiaries of AI at scale. In other words, you can buy the company selling the "picks and shovels," or the chips and systems powering AI. Or, alternatively, you can invest in a company that integrates AI into existing products, services, and infrastructure used by billions of people.

Artificial intelligence

from24/7 Wall St.

2 months agoLukewarm AI Plays Can Catch Up in 2026

Undoubtedly, there's still a lot of nerves out there over the latest wave of volatility, which may very well be the start of a painful, drawn-out move lower. As to whether we're in an AI bubble, though, remains a mystery. It'll probably be the big question going into the new year. With a recent wave of relief powering hard-hit AI stocks higher in the last few sessions, it seems like AI fears might be in an even bigger bubble than the AI stocks themselves.

Artificial intelligence

from24/7 Wall St.

2 months agoThis Chinese GPU IPO Just Soared 425%. Has NVIDIA Met its Match?

For now, it's tough for U.S. investors to get a piece of Moore Threads, a firm that many may never have heard of prior to its multi-bagger IPO session last week. The company, which, like Nvidia, makes GPUs (Graphics Processing Units) to power the AI boom, might be worth keeping tabs on, especially if you have a lot of skin in the AI race with the U.S. AI innovators and hyperscalers.

Artificial intelligence

Artificial intelligence

fromTechzine Global

2 months agoNebius expands AI through billion-dollar deals with Microsoft and Meta

Nebius secured multibillion-dollar GPU and data-center deals with Microsoft and Meta, rapidly emerging as Europe’s largest neocloud provider and expanding GPU-based AI services.

from24/7 Wall St.

3 months agoIs Nvidia or Broadcom the Better Pick for a $75,000 Retirement Investment?

Artificial intelligence has powered much of the stock market's growth since late 2022. When ChatGPT launched that November, it proved how useful generative AI could be, and investor excitement exploded. Since then, the six largest tech companies have collectively gained more than $8 trillion in market value. Two of the biggest beneficiaries have been Nvidia (NASDAQ: NVDA) and Broadcom (NASDAQ: AVGO), whose market caps have soared 681% and 378% over that period.

Artificial intelligence

fromBusiness Insider

3 months agoThis big AI bubble argument is wrong

The big worry centers on GPUs, the chips needed to train and run AI models. As new GPUs come out, older ones get less valuable, through obsolescence and wear and tear. Cloud companies must use depreciation to reduce the value of these assets over a period that reflects reality. The faster the depreciation, the bigger the hit to earnings. Investors have begun to worry that GPUs only have useful lives of one or two years,

Artificial intelligence

fromBusiness Insider

3 months agoMark Zuckerberg says researchers at his philanthropy don't want more lab space or head count: 'They just want GPUs'

"We are not expanding a lot of square footage, per se, but we're expanding our compute," Chan said on an episode of " The a16Z Podcast" that aired November 6, when talking about their investment in Biohub, a collection of biology labs the philanthropy has backed since 2016. "The researchers, they don't want employees working for them, they don't want space, they just want GPUs," Zuckerberg added. "In a sense, that's new lab space. It's much more expensive than wet lab space," said Chan, who is a pediatrician by training.

Artificial intelligence

fromTheregister

3 months agoMicrosoft, Alphabet throw more cash on AI bonfire

Microsoft declared on Monday that it will spend more than $7.9 billion on infrastructure for its AI strategy in the UAE from the start of 2026 to the end of 2029. The Redmond firm says that this will comprise more than $5.5 billion in capital expenses for expansion of its AI and cloud infrastructure, including new steps it plans to disclose in the UAE capital Abu Dhabi later this week, plus $2.4 billion in planned local operating expenses.

Tech industry

from24/7 Wall St.

3 months agoThis Is Why AI Is Not a Bubble and Nvidia will Reach $10 Trillion

Valuations further differentiate the periods: dotcom tech stocks often traded at 150 to 180 times trailing earnings, while current AI frontrunners average around 40 times. Some AI hardware purchasers have reported improved return on ivested capital (ROIC), but results vary across the industry. This solid groundwork distinguishes AI from historical bubbles and primes leading companies like Nvidia for substantial expansion.

Tech industry

fromInfoQ

4 months agoIBM Cloud Code Engine Serverless Fleets with GPUs for High-Performance AI and Parallel Computing

IBM Cloud Code Engine, the company's fully managed, strategic serverless platform, has introduced Serverless Fleets with integrated GPU support. With this new capability, the company directly addresses the challenge of running large-scale, compute-intensive workloads such as enterprise AI, generative AI, machine learning, and complex simulations on a simplified, pay-as-you-go serverless model. Historically, as noted in academic papers, including a recent Cornell University paper, serverless technology struggled to efficiently support these demanding, parallel workloads,

Artificial intelligence

from24/7 Wall St.

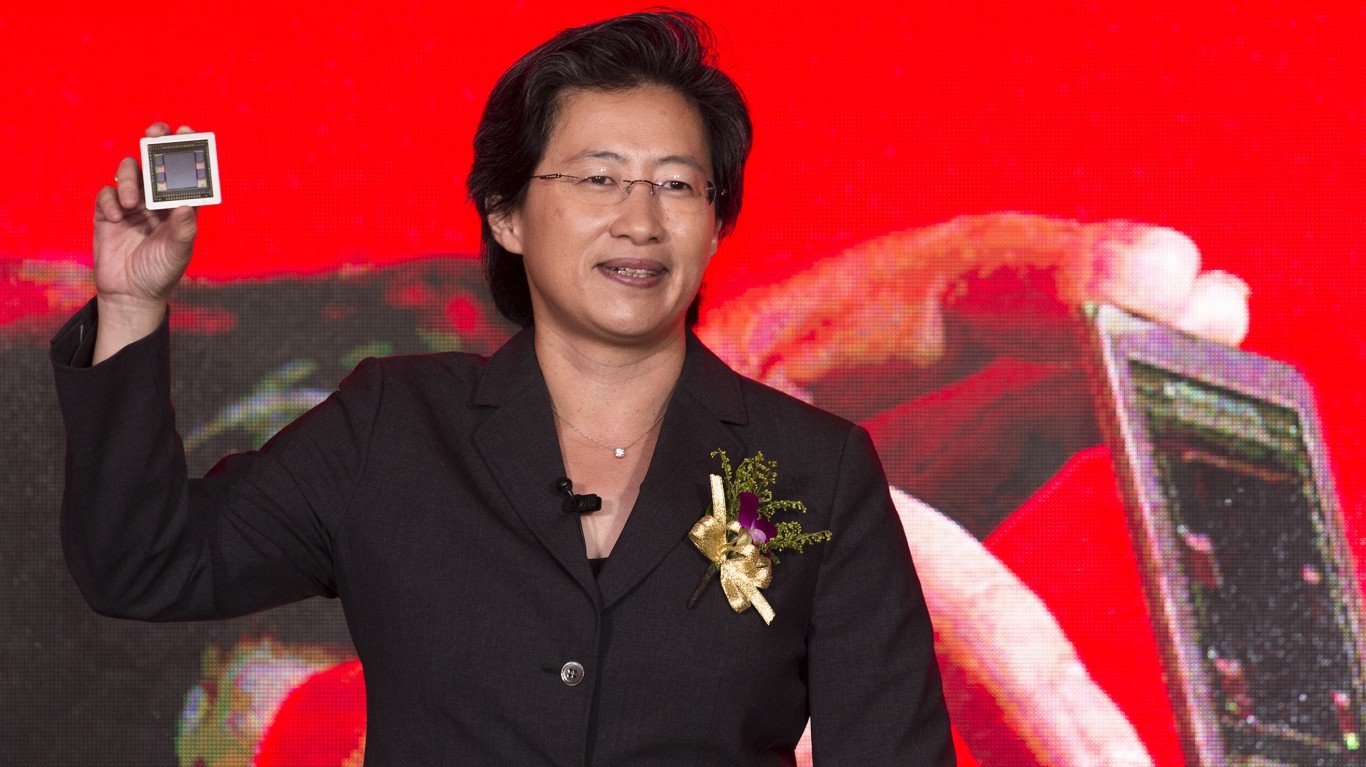

4 months agoAfter Massive OpenAI Deal, Is AMD Stock Still a Buy?

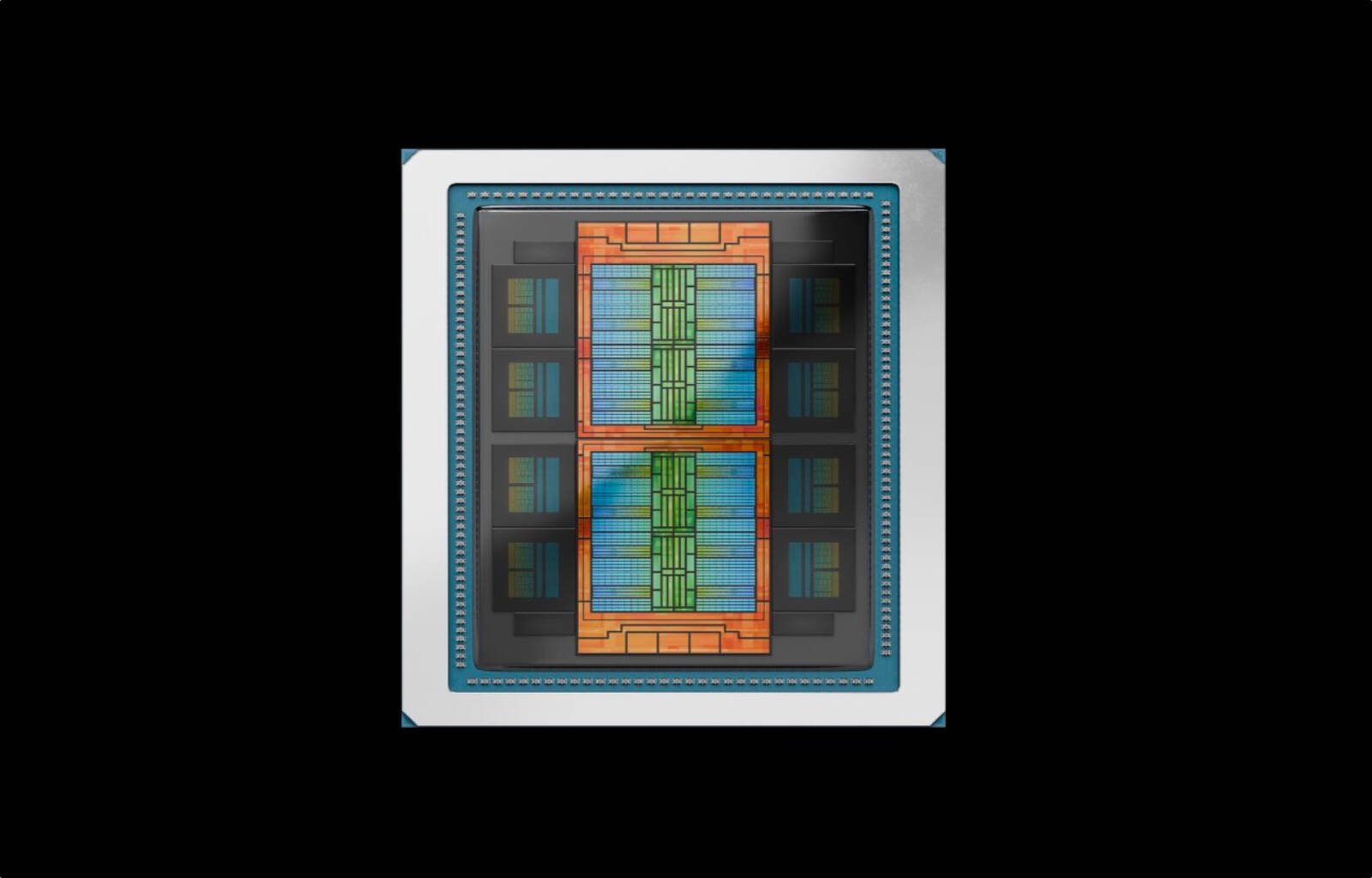

( Advanced Micro DevicesNASDAQ:AMD) shocked investors yesterday with a landmark multi-year agreement to supply OpenAI with 6 gigawatts of its Instinct graphics processing units (GPUs), starting with 1 gigawatt in the second half of 2026. The deal, which could generate tens of billions in annual revenue for AMD, includes a warrant allowing OpenAI to acquire up to 160 million shares - roughly 10% of the company - for a nominal fee, tied to deployment milestones and stock price targets up to $600 per share.

Tech industry

fromTechCrunch

5 months agoCohere hits $7B valuation a month after its last raise, partners with AMD | TechCrunch

On Wednesday, Enterprise AI model-maker Cohere said it raised an additional $100 million - bumping its valuation to $7 billion - in an extension to a round announced in August. The August round was an oversubscribed $500 million round at a $6.8 billion valuation, the company said at the time.

Venture

fromComputerWeekly.com

5 months agoAgentic AI: Storage and 'the biggest tech refresh in IT history' | Computer Weekly

It's a very broad question. But, to start, I think it's important to point out that this is in some respects an entirely new form of business logic and a new form of computing. And so, the first question becomes, if agentic systems are reasoning models coupled with agents that perform tasks by leveraging reasoning models, as well as different tools that have been allocated to them to help them accomplish their tasks ... these models need to run on very high-performance machinery.

Artificial intelligence

[ Load more ]