#agi

#agi

[ follow ]

#openai #artificial-intelligence #ai-safety #microsoft #large-language-models #xai #gpt-5 #chatgpt #investment

Artificial intelligence

fromBusiness Insider

1 week agoOpenClaw creator makes a case for 'specialized intelligence' over superintelligence

Specialized, task-focused AI produces practical advances and aligns with human societal specialization, offering more value than pursuing hypothetical AGI or superintelligence.

fromwww.theguardian.com

3 weeks agoChina lags behind US at AI frontier but could quickly catch up, say experts

The world today is witnessing the dawn of an AI-driven intelligent revolution, Eddie Wu told a developer conference in September. Artificial general intelligence (AGI) will not only amplify human intelligence but also unlock human potential, paving the way for the arrival of artificial superintelligence (ASI). ASI, Wu said, could produce a generation of super scientists' and full-stack super engineers', who would tackle unsolved scientific and engineering problems at unimaginable speeds.

Tech industry

fromwww.theguardian.com

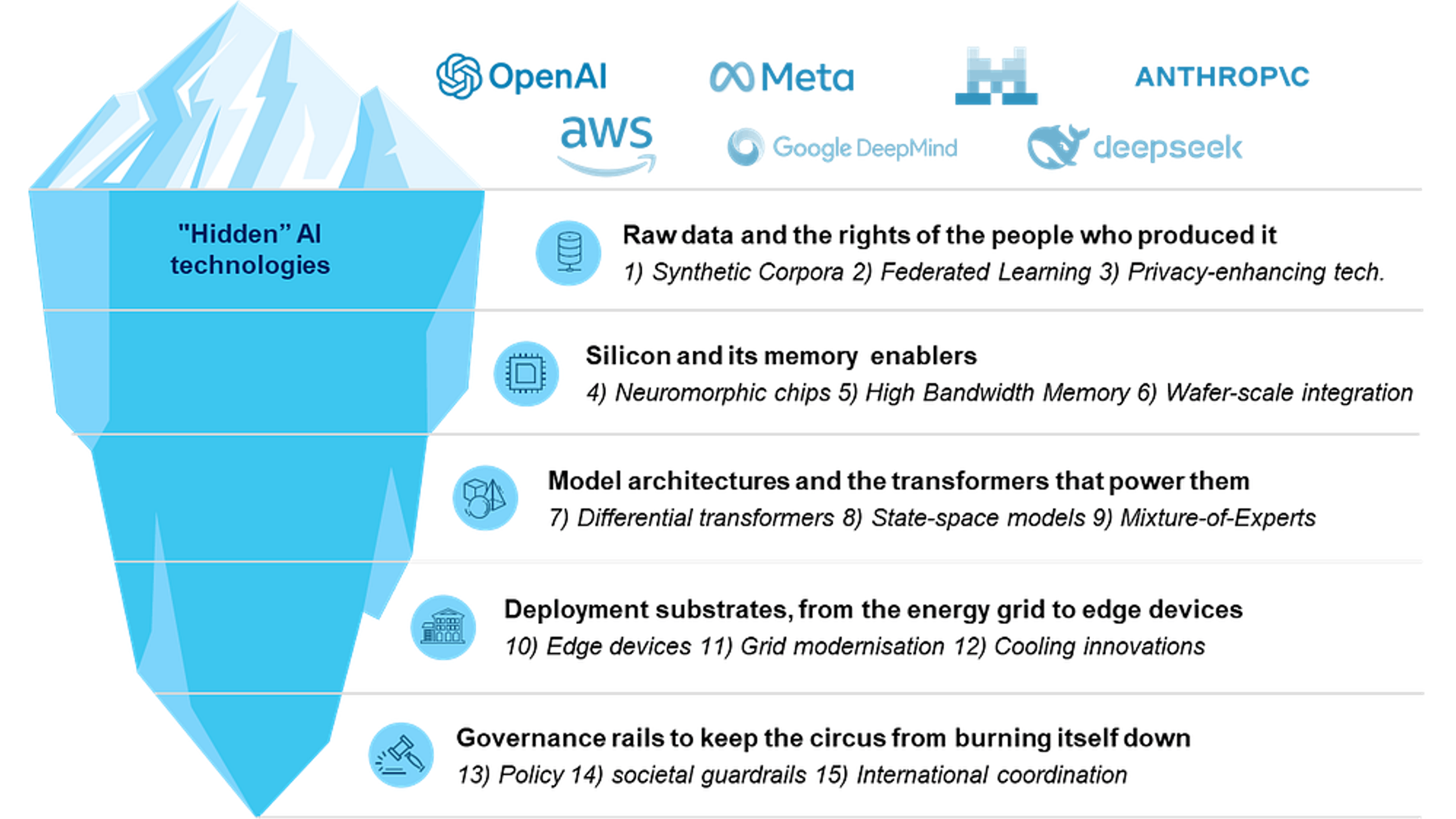

1 month agoWe could hit a wall': why trillions of dollars of risk is no guarantee of AI reward

Trillions of dollars rest on the answer. The figures are staggering: an estimated $2.9tn (2.2tn) being spent on datacentres, the central nervous systems of AI tools; the more than $4tn stock market capitalisation of Nvidia, the company that makes the chips powering cutting-edge AI systems; and the $100m signing-on bonuses offered by Mark Zuckerberg's Meta to top engineers at OpenAI, the company behind ChatGPT.

Artificial intelligence

Artificial intelligence

fromThe Verge

2 months agoAI companies are sick of their favorite buzzword - so they're inventing new ones

Major AI companies are abandoning the term 'AGI' and rebranding essentially equivalent concepts with proprietary labels while publicly downplaying AGI as a meaningful milestone.

Artificial intelligence

fromFortune

2 months agoAmazon CEO Andy Jassy announces departure of AI exec Rohit Prasad in leadership shakeup | Fortune

Rohit Prasad will depart Amazon at year-end; Peter DeSantis will lead AI models, custom chips, and quantum computing; Pieter Abbeel will head frontier model research.

Artificial intelligence

fromBusiness Insider

2 months agoOpenAI's head of Codex says the bottleneck to AGI is humanity's inability to type fast enough

Human typing and prompt-writing speed bottleneck AGI progress; enabling AI agents to validate and autonomously review work will unlock rapid productivity growth.

fromBusiness Insider

2 months agoGoogle DeepMind CEO Demis Hassabis says AI scaling 'must be pushed to the maximum'

Google DeepMind CEO Demis Hassabis, whose company just released Gemini 3 to widespread acclaim, has made it clear where he stands on the issue. "The scaling of the current systems, we must push that to the maximum, because at the minimum, it will be a key component of the final AGI system," he said at the Axios' AI+ Summit in San Francisco last week. "It could be the entirety of the AGI system."

Artificial intelligence

Artificial intelligence

fromFast Company

3 months agoAI CEOs are promising all-powerful superintelligence. Government insiders have thoughts

AI promises breakthroughs in medicine, science, and human-level intelligence while governments lag adoption and critics warn of unreliability and threats to democratic values.

Artificial intelligence

fromBusiness Insider

3 months agoSam Altman shares his 'best, accidental' career advice - and why it's the best time to get into computer science

Focus on AI now; hang around the smartest people, work on interesting problems, and improve quickly—computer science is high-leverage with AI reshaping society.

fromTheregister

3 months agoIntel CTO and AI boss quits to join OpenAI

Katti replied with a post in which he declared himself "Excited for the opportunity to work with" Brockman, OpenAI CEO Sam Altman, and others at the company "on building out the compute infrastructure for AGI!" He also said he's very grateful for the tremendous opportunity and experience at Intel over the last 4 years leading networking, edge computing and AI,

Artificial intelligence

fromFuturism

3 months agoSam Altman Says That in a Few Years, a Whole Company Could Be Run by AI, Including the CEO

OpenAI CEO Sam Altman says that an era when entire companies are run by AI models is nearly upon us. And if he has it his way, it'll be OpenAI leading the charge, even if it means losing his job. "Shame on me if OpenAI isn't the first big company run by an AI CEO," Altman said on an episode of the "Conversations with Tyler" podcast recorded last month

Artificial intelligence

fromBusiness Insider

3 months agoGoogle is hiring an economist to understand how advanced AI could affect our wallets

You will lead a new area of research, exploring post-AGI economics, the future of scarcity, and the distribution of power and resources in a world fundamentally reshaped by advanced AI,

Artificial intelligence

Artificial intelligence

fromBusiness Insider

4 months agoMeta CMO says there are 3 things that will determine whether AI causes companies to grow or shrink head count

AI will both eliminate and create jobs through efficiency gains and new work, and the net workforce impact for companies remains uncertain.

Venture

fromFortune

5 months agoWhy IVP's Somesh Dash believes that Silicon Valley can look beyond artificial general intelligence for spirituality | Fortune

Tech's push toward AGI and machine-to-machine systems risks eroding human connection, making empathy, community, and service indispensable and irreplaceable strengths.

fromFuturism

5 months agoAnti-AI Activist on Day Three of Hunger Strike Outside Anthropic's Headquarters

AI fever might have an iron grip on Fortune 500 CEOs, Wall Street traders, and government officials, but there are still some out their immune to the tech industry's charms. For evidence, look no further than activist and organizer Guido Reichstadter, who's currently running on day three of a hunger strike on the front steps of the headquarters of the AI giant Anthropic.

Artificial intelligence

fromComputerworld

5 months agoAGI explained: Artificial intelligence with humanlike cognition

As artificial intelligence, particularly generative AI, gains traction in the business world after years of promise, a new generation of AI is starting to emerge - at least in the hype cycle. It's not agentic AI, it's not robotic AI, or physical AI. It is artificial general intelligence, or AGI. Two years ago, fear of AGI run amok prompted 1,000 tech leaders and AI researchers to sign an open letter calling for a pause on new AI model rollouts.

Artificial intelligence

fromwww.scientificamerican.com

6 months agoCan Writing Math Proofs Teach AI to Reason Like Humans?

A few months before the 2025 International Mathematical Olympiad (IMO) in July, a three-person team at OpenAI made a long bet that they could use the competition's brutally tough problems to train an artificial intelligence model to think on its own for hours so that it was capable of writing math proofs. Their goal wasn't simply to create an AI that could do complex math but one that could evaluate ambiguity and nuanceskills AIs will need if they are to someday take on many challenging real-world tasks.

Artificial intelligence

fromwww.theguardian.com

6 months agoIt's missing something': AGI, superintelligence and a race for the future

OpenAI's new GPT-5 model represents a significant step toward artificial general intelligence, yet it lacks crucial elements like autonomous continuous learning, limiting its full potential.

Artificial intelligence

fromFortune

6 months agoAI is gutting workforces-but an ex-Google exec says CEOs are too busy 'celebrating' their efficiency gains to see they're next

My belief is it is 100% crap. The best at any job will remain. The best software developer, the one that really knows architecture, knows technology, and so on will stay—for a while.

Artificial intelligence

[ Load more ]