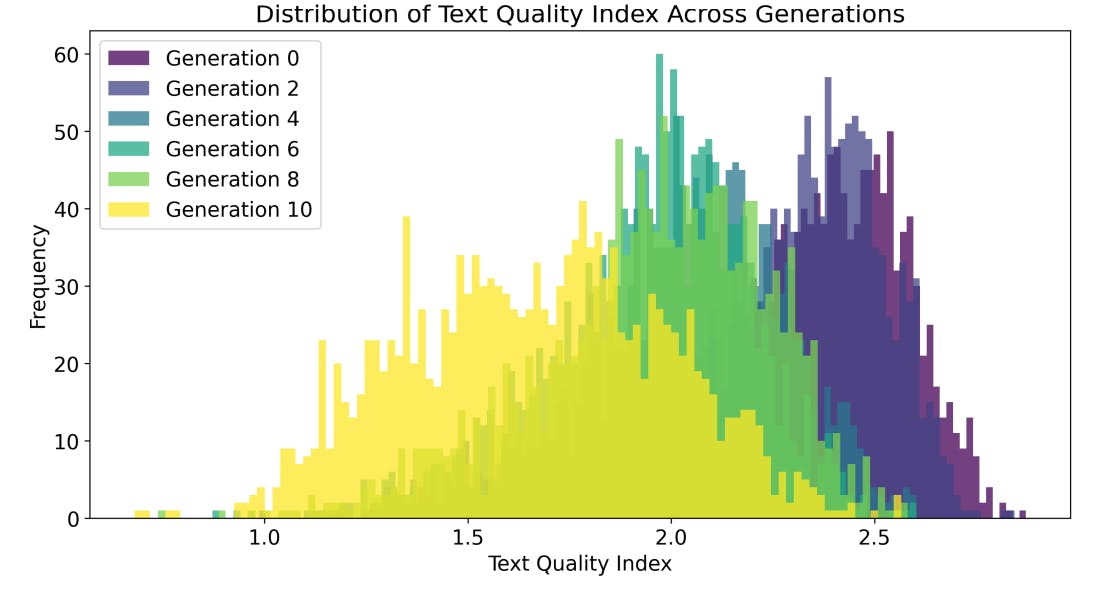

"The experimental results indicate a consistent trend of quality degradation in outputs across multiple generations, suggesting possible issues within model calibration and bias."

"This study demonstrates how iterative training methodologies can amplify biases and degrade text quality, emphasizing the importance of qualitative assessments in AI-generated text."

The article discusses the implications of iterative synthetic generation in AI models, particularly focusing on how models like GPT-2 demonstrate quality degradation and bias amplification over generations. A series of experiments—including mathematical formulations of WMLE, various sampling setups, and qualitative bias analysis—reveal consistent trends in lowered text quality indices and increased perplexity. The findings highlight the impact of training methods on model performance, calling attention to the need for rigorous evaluation of AI outputs to ensure ethical and reliable use.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]