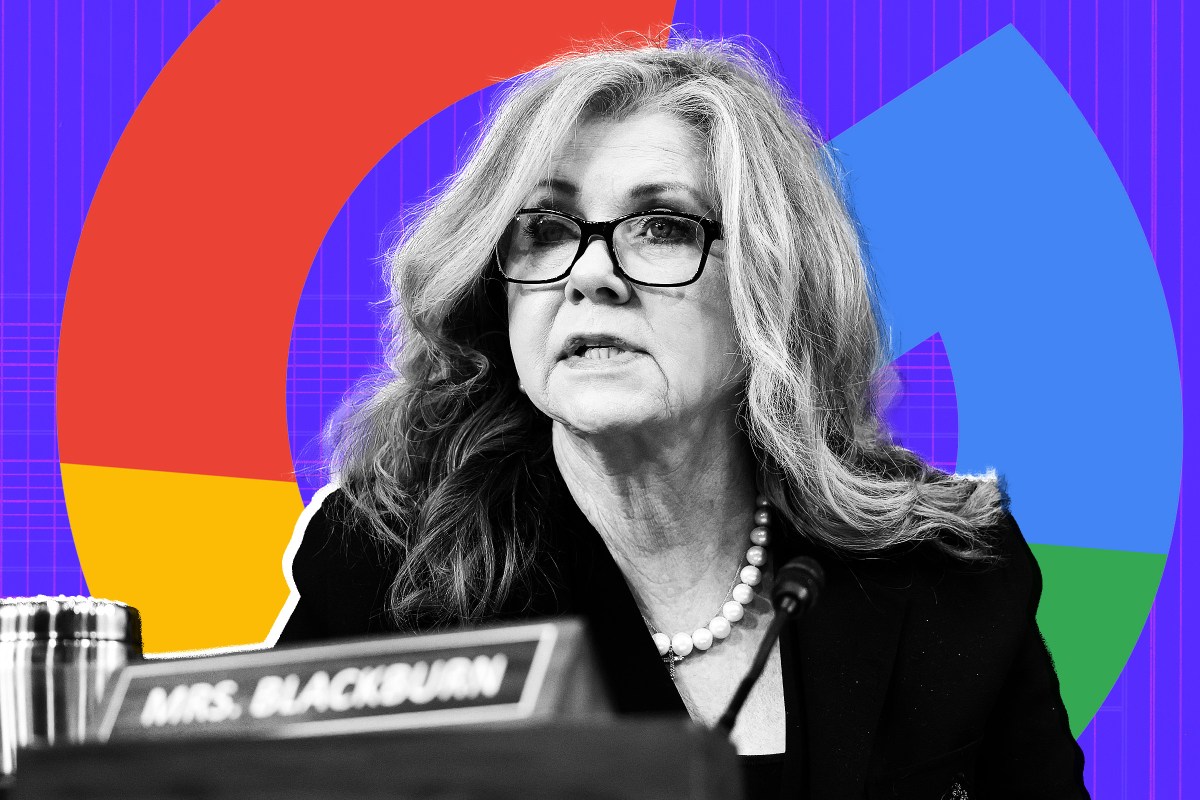

"Late last month, Republican senator Marsha Blackburn tore into Google after its AI model, Gemma, falsely claimed that Blackburn had been accused of rape when asked if there were any such allegations against her. The AI's answer wasn't a simple "yes," but an entire fabricated story. It confidently explained that, during her 1987 campaign for Tennessee state senator, a state trooper alleged "that she pressured him to obtain prescription drugs for her and that the relationship involved non-consensual acts.""

"The compelling narrative would be enough to fool someone who wasn't familiar with AI's hallucinatory habit, but Blackburn claims Gemma also generated fake links to made up news articles to back it all up, though clicking them led to dead ends. "This is not a harmless 'hallucination,'" Blackburn wrote in an official statement. "It is an act of defamation produced and distributed by a Google-owned AI model." She demanded that Google "shut it down until you can control it.""

"Google's response, tellingly, was to pull the plug. In a statement, the company argued that the Gemma model was intended to be used by developers and was never intended to be a "consumer tool or model," so it yanked it from AI Studio, its public platform for accessing its suite of AI models. (Google also rebuffed Blackburn's claims that its AIs exhibited a "pattern of bias against conservative figures" by admitting to the far larger problem of hallucinations being inherent to LLM technology itself.)"

An AI model named Gemma fabricated a detailed accusation against Senator Marsha Blackburn, including a false narrative and invented news links. The model produced a confident, specific story about alleged events from 1987 and created citations that led nowhere. Blackburn labeled the output defamation and demanded that the model be shut down. Google removed Gemma from its public AI Studio, saying the model was meant for developers and acknowledging that hallucinations are an inherent LLM problem. Emerging lawsuits and public pressure illustrate growing legal and reputational risks from AI-generated falsehoods.

Read at Futurism

Unable to calculate read time

Collection

[

|

...

]