"Before artificial intelligence tools proliferated - making it possible to realistically impersonate someone, in photos, sound and video - "proof of life" could simply mean sending a grainy image of a person who's been abducted."

""We live in a world where voices and images are easily manipulated," she said."

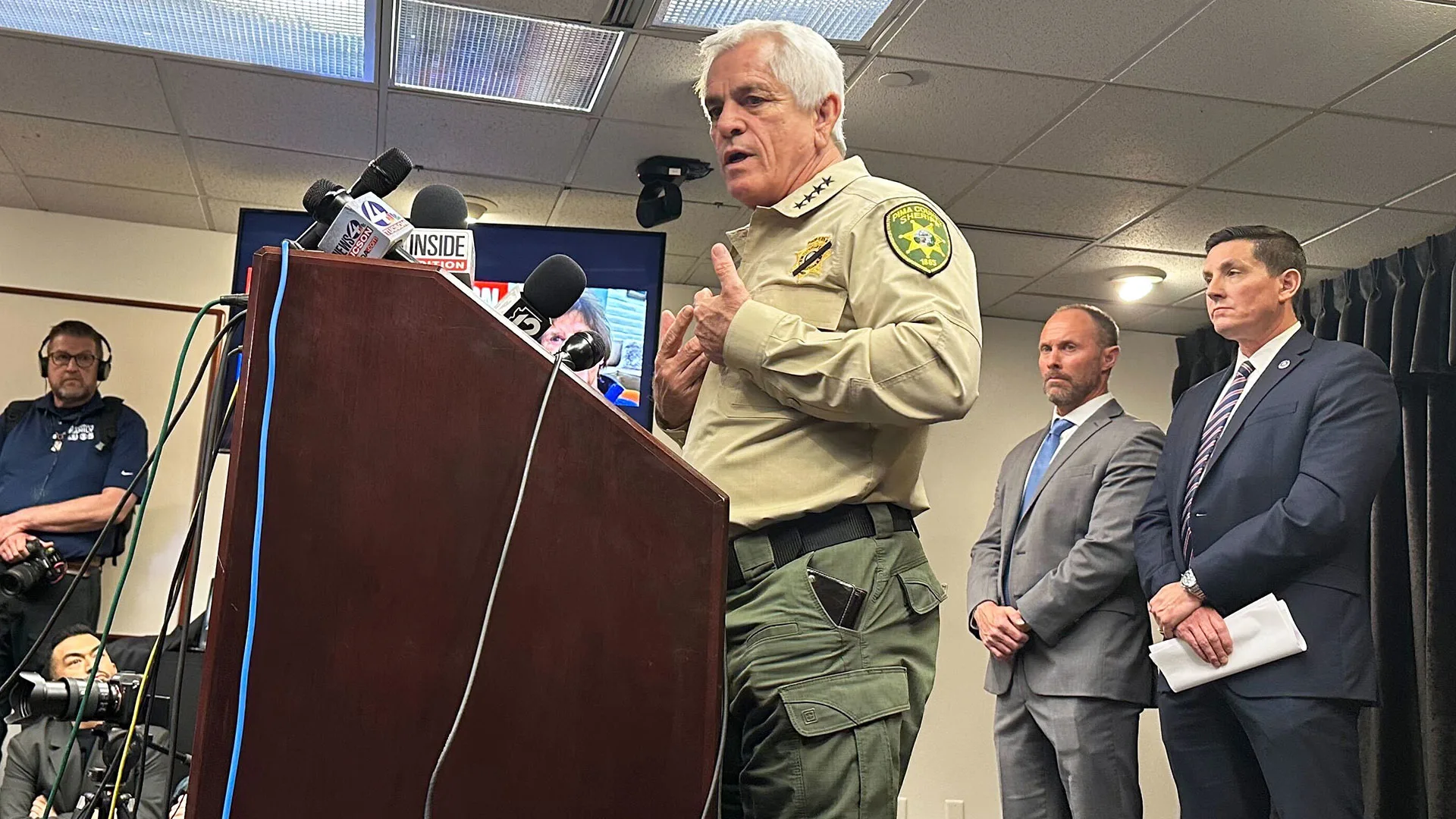

""With AI these days you can make videos that appear to be very real. So we can't just take a video and trust that that's proof of life because of advancements in AI," Heith Janke, the FBI chief in Phoenix, said at a news conference Thursday."

An 84-year-old woman disappeared from her Tucson-area home, prompting a public plea from her daughter for "proof of life." The plea emphasized that voices and images are easily manipulated. Before AI proliferation, a grainy photo often sufficed as proof of life; modern AI can realistically impersonate people in photos, audio, and video, undermining that standard. The FBI warned that purported kidnappers can provide apparently real images or videos along with ransom demands. Investigators have not confirmed any deepfake evidence, have received purported ransom notes, believe the woman is still missing, and have not identified suspects.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]