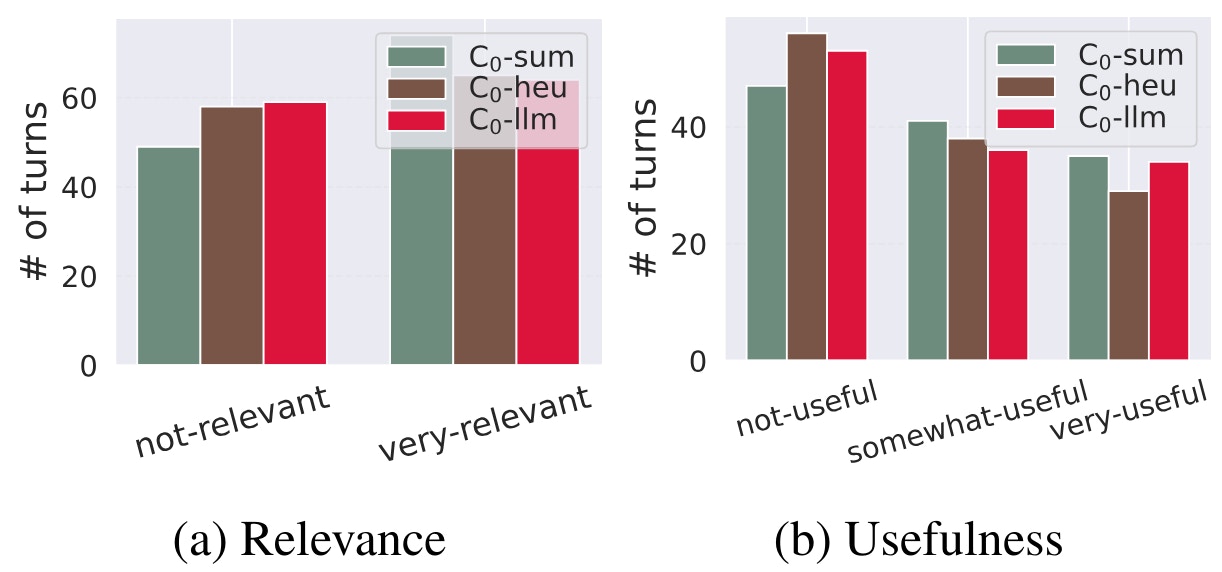

"Our analysis reveals that when no prior dialogue context is provided, annotators rate system responses as more relevant or useful due to the lack of contextual evidence."

"The findings indicate that casual conversational cues, such as movie inquiries, led annotators to assume relevance, resulting in higher ratings for system responses in C0."

The study conducted by researchers from the University of Amsterdam assessed the role of dialogue context in crowdsourcing experiments. The analysis shows that when annotators have no prior context (C0), they tend to rate system responses as more relevant and useful compared to conditions with some context (C3 and C7). This tendency suggests that the absence of context leads annotators to rely on assumptions about users' prior inquiries, particularly in casual conversations. The implications highlight the significance of context in dialogue systems for enhancing response quality.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]