"Sampling is essential in machine learning as it helps to prevent model overfitting, allowing for better predictions on unseen data by using appropriate sample datasets."

"Languages like Scala and PySpark facilitate scalable random sampling, yet they face challenges, including memory errors arising from trying to process large datasets on a single machine."

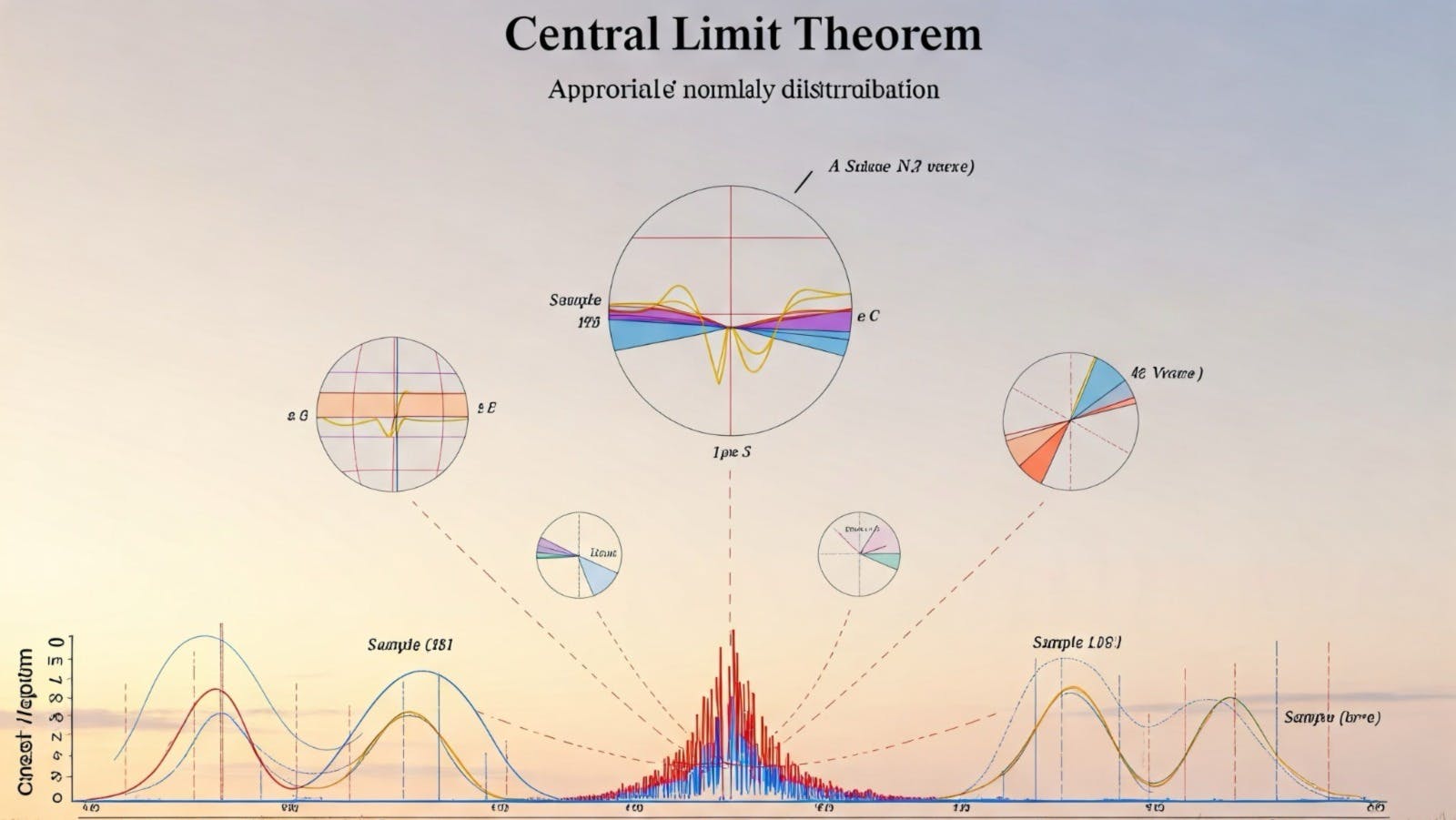

Sampling is a critical technique in machine learning that involves selecting random samples from large datasets. This is vital for reducing overfitting and ensuring that models can generalize well to new data. Effective sampling methods are crucial for creating robust ML models, especially when dealing with large-scale data, which is commonly managed using languages like Scala or PySpark. However, challenges such as memory errors can arise when data is pulled to a single machine. This article discusses how to achieve effective random sampling using Scala Spark and explores the application of the central limit theorem in this context.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]