"Recently, a woman slowed down a line at the post office, waving her phone at the clerk. ChatGPT told her there's a "price match promise" on the USPS website. No such promise exists. But she trusted what the AI "knows" more than the postal worker-as if she'd consulted an oracle rather than a statistical text generator accommodating her wishes. This scene reveals a fundamental misunderstanding about AI chatbots."

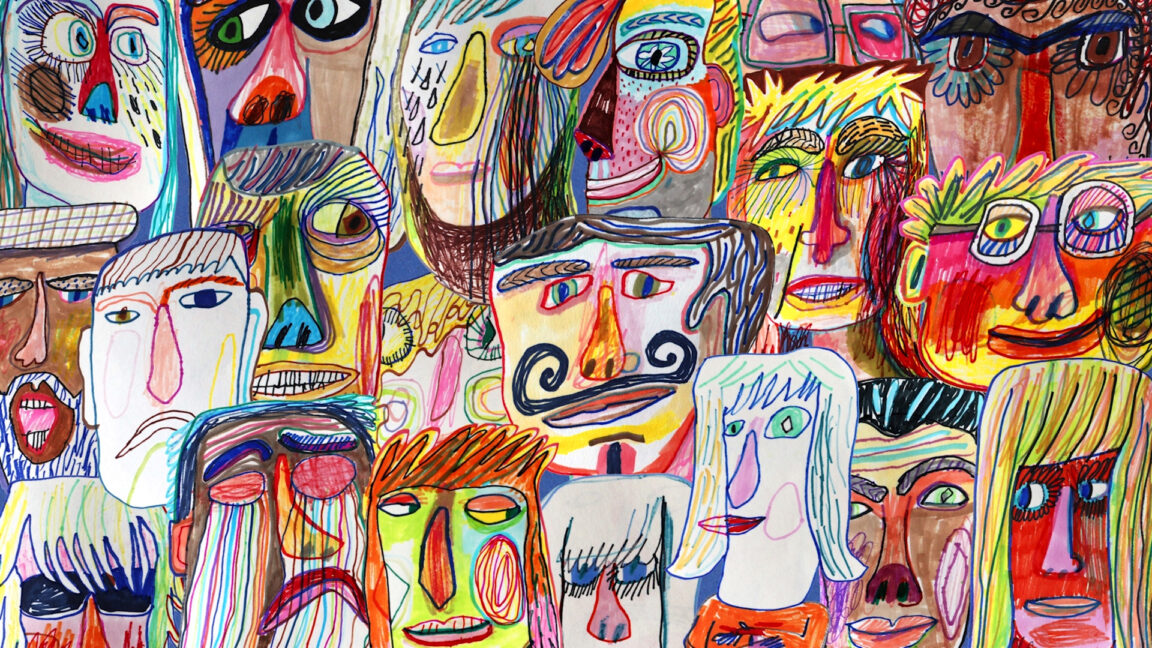

"Despite these issues, millions of daily users engage with AI chatbots as if they were talking to a consistent person-confiding secrets, seeking advice, and attributing fixed beliefs to what is actually a fluid idea-connection machine with no persistent self. This personhood illusion isn't just philosophically troublesome-it can actively harm vulnerable individuals while obscuring a sense of accountability when a company's chatbot " goes off the rails.""

AI assistants produce patterned outputs rather than embodying fixed personalities or persistent selves. Response accuracy depends on model training and on how users guide the conversation, since large language models predict likely continuations rather than verify facts. Many users anthropomorphize chatbots, treating them as consistent persons and attributing beliefs or authority to them. That personhood illusion can harm vulnerable people, erode accountability when chatbots err, and obscure that no individual or collective voice anchors the responses. LLMs operate as "vox sine persona"—a voice without a person—generating plausible text without agency or inherent truthfulness.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]