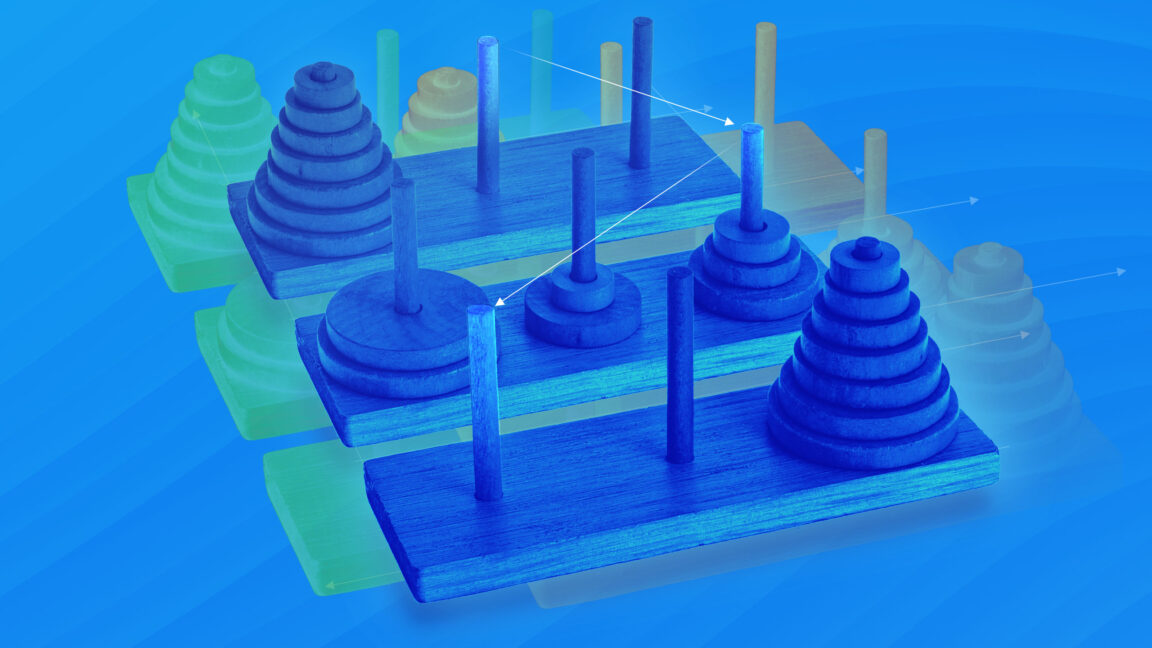

"In April 2023, interest surged around two projects, BabyAGI and AutoGPT, which utilized GPT-4 for various autonomous agent tasks including web research and coding."

"Initially, BabyAGI and AutoGPT would give GPT-4 specific goals, prompting it to generate a detailed to-do list and tackle tasks step by step."

"However, GPT-4 ultimately struggled with long tasks, often losing focus and making small errors that compounded over time until it became confused."

"By late 2023, usage of BabyAGI and AutoGPT declined as it became evident that LLMs lacked the ability for reliable multi-step reasoning at that time."

In April 2023, projects like BabyAGI and AutoGPT emerged, using GPT-4 to create autonomous agents capable of tasks such as web research and coding. These projects involved prompting GPT-4 with specific goals and obtaining to-do lists to tackle projects. However, GPT-4 often faltered in maintaining focus over longer tasks, making initial errors that compounded confusion. By the end of 2023, interest in these frameworks declined due to LLMs' inability to reliably handle complex multi-step reasoning.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]