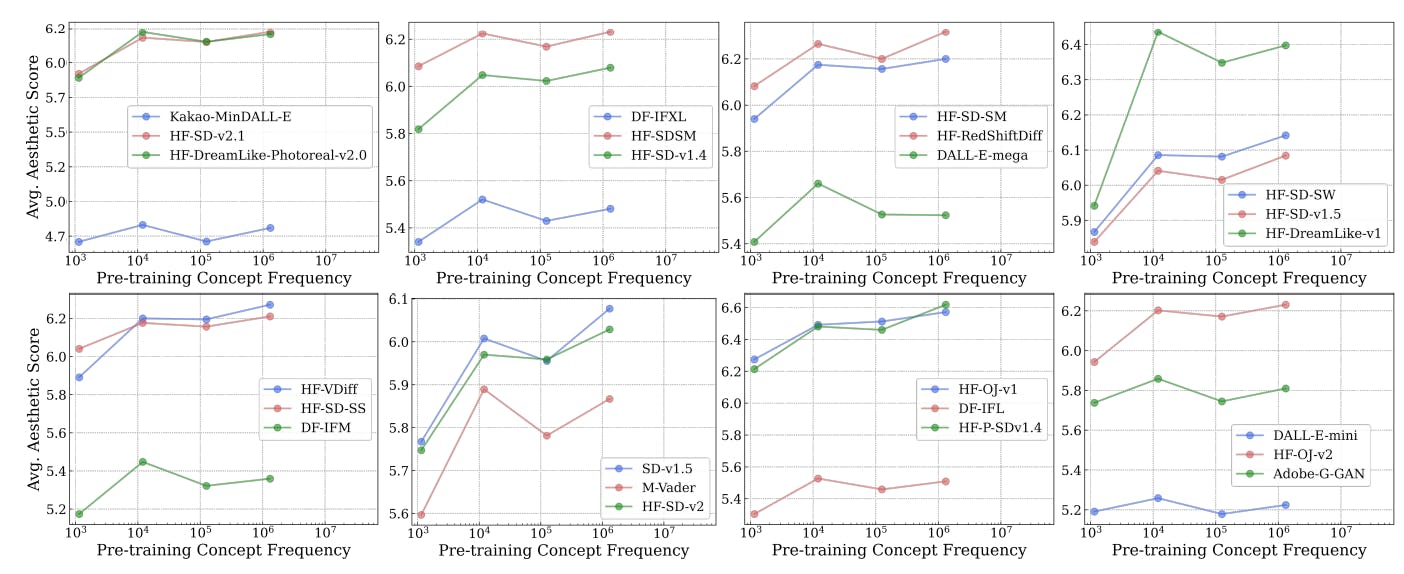

"The study identifies a significant relationship between pretraining concept frequencies and zero-shot performance, demonstrating that higher frequency in pretraining leads to improved task outcomes across various metrics."

"Experimental results indicate that effective pretraining, characterized by concept frequency, can enhance model performance in zero-shot learning tasks, validating the scaling trend of frequency to performance."

Higher pretraining concept frequencies correlate with improved zero-shot performance in multimodal models, particularly in tasks like retrieval, classification, and generation. Two classes of models are analyzed: Image-Text and Text-to-Image, using diverse datasets for evaluation. Experimental results illustrate the importance of concept frequency in achieving better performance on downstream tasks. The findings emphasize that understanding concept frequencies can provide insights into model capabilities and guide effective pretraining strategies across multimodal applications.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]