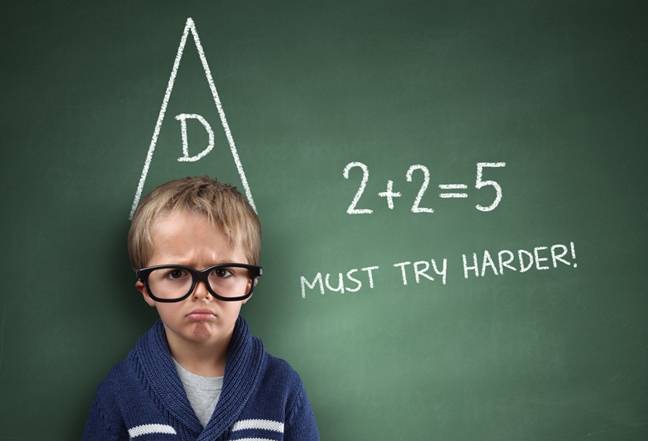

""Potemkins are to conceptual knowledge what hallucinations are to factual knowledge - hallucinations fabricate false facts; potemkins fabricate false conceptual coherence.""

"Computer scientists propose the term "potemkin understanding" to describe a failure mode in AI where models succeed at benchmarks but lack true concept comprehension."

Researchers from MIT, Harvard, and the University of Chicago introduced the term "potemkin understanding" for a failure mode in large language models, indicating that these models can excel at conceptual benchmarks while lacking genuine comprehension. This term draws inspiration from Potemkin villages, which were false constructions meant to deceive. The academics distinguish "potemkins" from "hallucinations," noting that while hallucinations represent factual errors, potemkin understanding relates to a superficial grasp of concepts. This notion avoids anthropomorphizing AI models and reflects their limitations in deep understanding despite benchmark success.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]