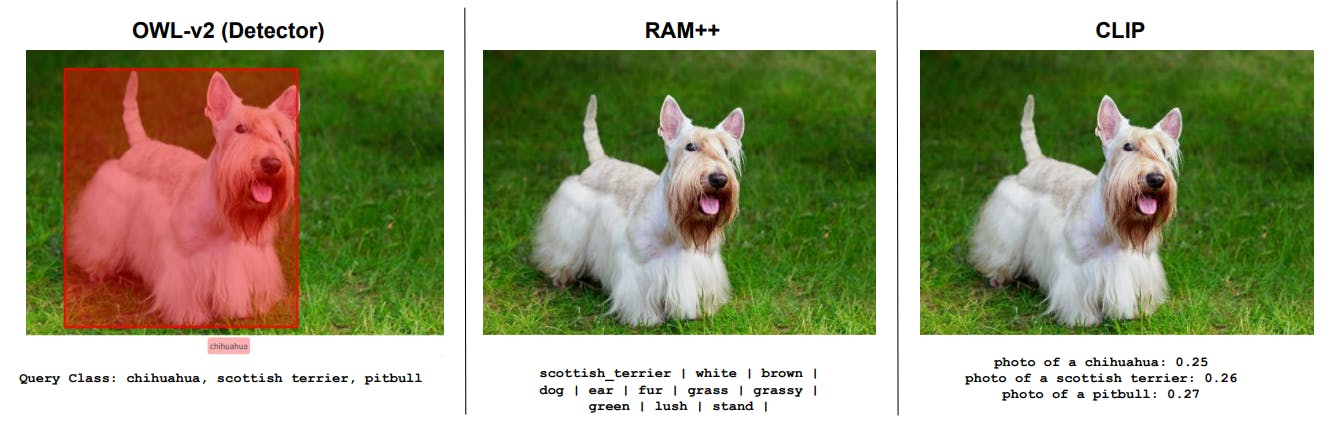

"The choice of the RAM++ model over CLIPScore or open-vocabulary detectors is justified by its performance on fine-grained classes and basic input images."

"Concept frequency derived using RAM++ anticipates model performance across various prompting strategies, retrieval metrics, and even when applied to T2I models."

"Experimental results indicate that pretraining frequency directly correlates to zero-shot performance, highlighting its significance in determining model efficacy."

"The research addresses several challenges, including the importance of concept generalization to synthetic datasets and the varying performance metrics across different datasets."

Pretraining frequency significantly predicts the zero-shot performance of models, showcasing efficacy across multiple metrics and experiments. RAM++ is employed for its strengths in handling fine-grained class assignments from concept frequencies. The study examines the generalization of pretraining data to various synthetic datasets while asserting the importance of controlling for similar samples. Additionally, it assesses performance scaling trends and highlights challenges related to concept alignment and model evaluation methodologies, establishing a foundational understanding of the interplay between frequency and performance.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]