fromTechCrunch

10 hours agoArtificial intelligence

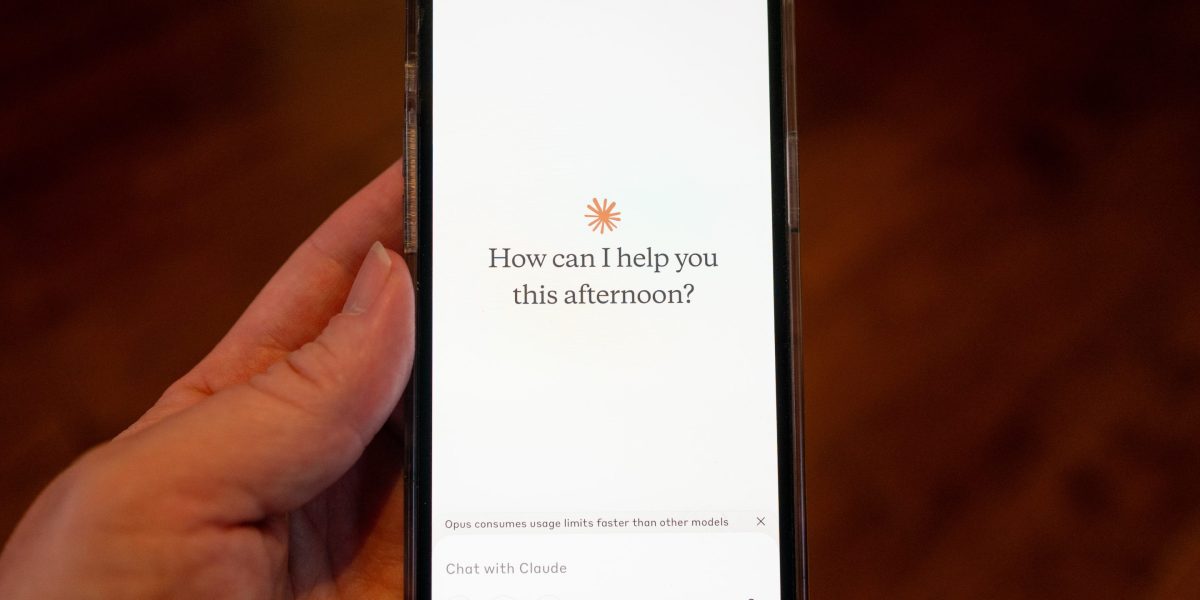

Anthropic revises Claude's 'Constitution,' and hints at chatbot consciousness | TechCrunch

Anthropic updated Claude's Constitution, refining Constitutional AI principles to add nuance on ethics and user safety while reinforcing self-supervision via natural-language instructions.