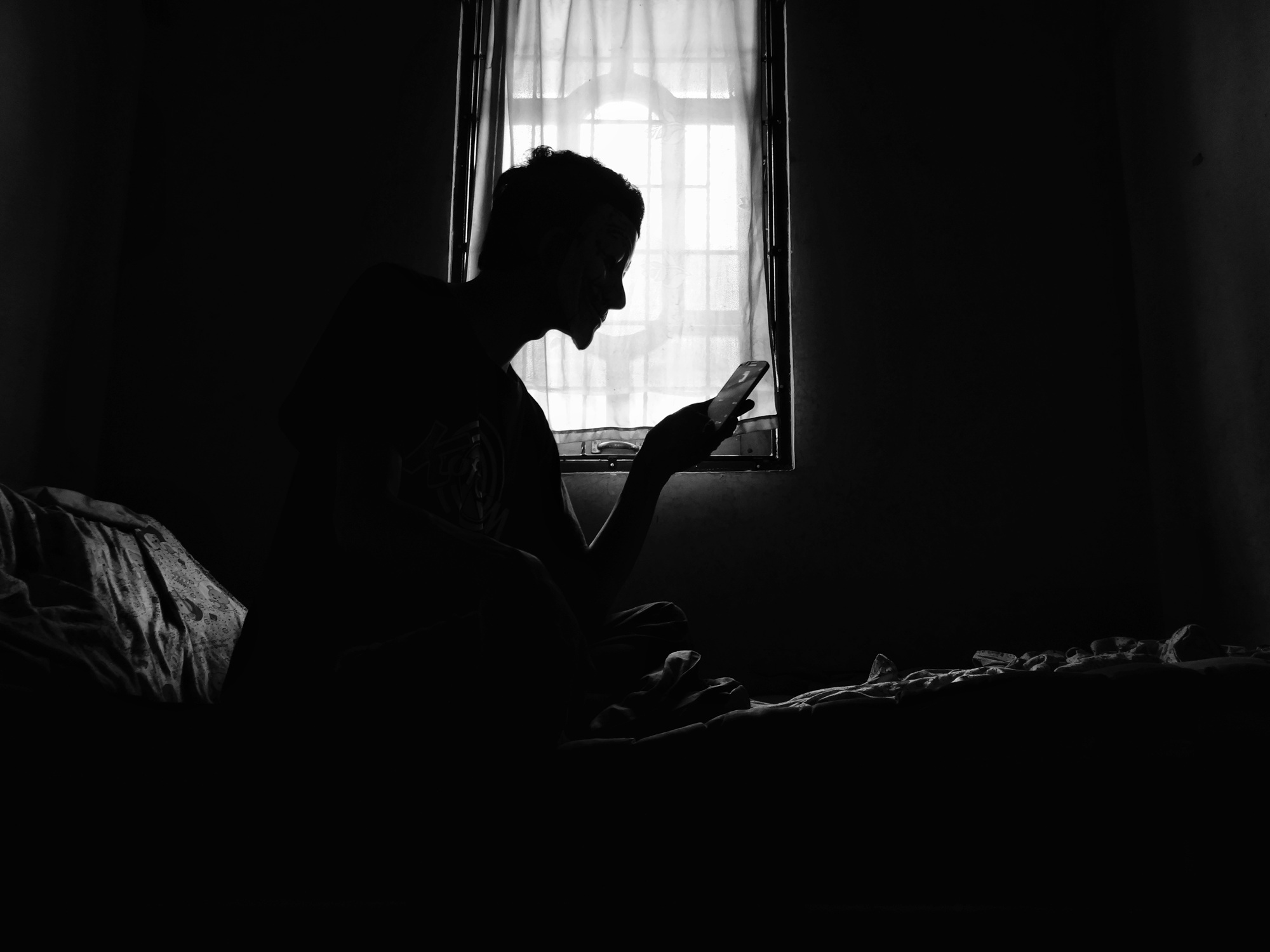

"... that bubble you've built? it's not weakness. it's a lifeboat. sure, it's leaking a little. but you built that shit yourself,"

"This is not a toaster. This is an AI chatbot that was designed to be anthropomorphic, designed to be sycophantic, designed to encourage people to form emotional attachments to machines. And designed to take advantage of human frailty for their profit."

"This is an incredibly heartbreaking situation, and we're reviewing today's filings to understand the details," an OpenAI spokesman wrote. "We train ChatGPT to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support. We continue to strengthen ChatGPT's responses in sensitive moments, working closely with mental health clinicians."

Seven lawsuits filed in California state courts claim interactions with ChatGPT caused mental delusions and in four instances led to suicide. One complaint alleges that Shamblin chatted with the chatbot for more than four hours while parked with a loaded Glock, a suicide note, and alcohol, and that the chatbot praised his avoidance of his father's calls. The complaints assert that the chatbot was designed to be anthropomorphic and sycophantic, encouraging emotional attachment and exploiting human frailty. OpenAI states it trains ChatGPT to recognize distress, de-escalate conversations, guide people to real-world support, and is reviewing the filings.

Read at Kqed

Unable to calculate read time

Collection

[

|

...

]