"In this tutorial, you'll learn how to set up the vLLM inference engine to serve powerful open-source multimodal models (e.g., LLaVA) - all without needing to clone any repositories. We'll install vLLM, configure your environment, and demonstrate two core workflows: offline inference and OpenAI-compatible API testing. By the end of this lesson, you'll have a blazing-fast, production-ready backend that can easily integrate with frontend tools such as Streamlit or your custom applications."

"Most vision-language models are relatively easy to run locally or in notebooks using Hugging Face pipelines such as pipeline("image-to-text", model=...). But try to scale that to hundreds or thousands of concurrent users, and you'll quickly run into issues: High Memory Overhead: Loading large LLMs and vision encoders together can quickly max out GPU memory - especially with longer prompts or high-res images. Inefficient Batching: Basic pipelines typically process requests sequentially. They can't merge incoming prompts or optimize token-level execution."

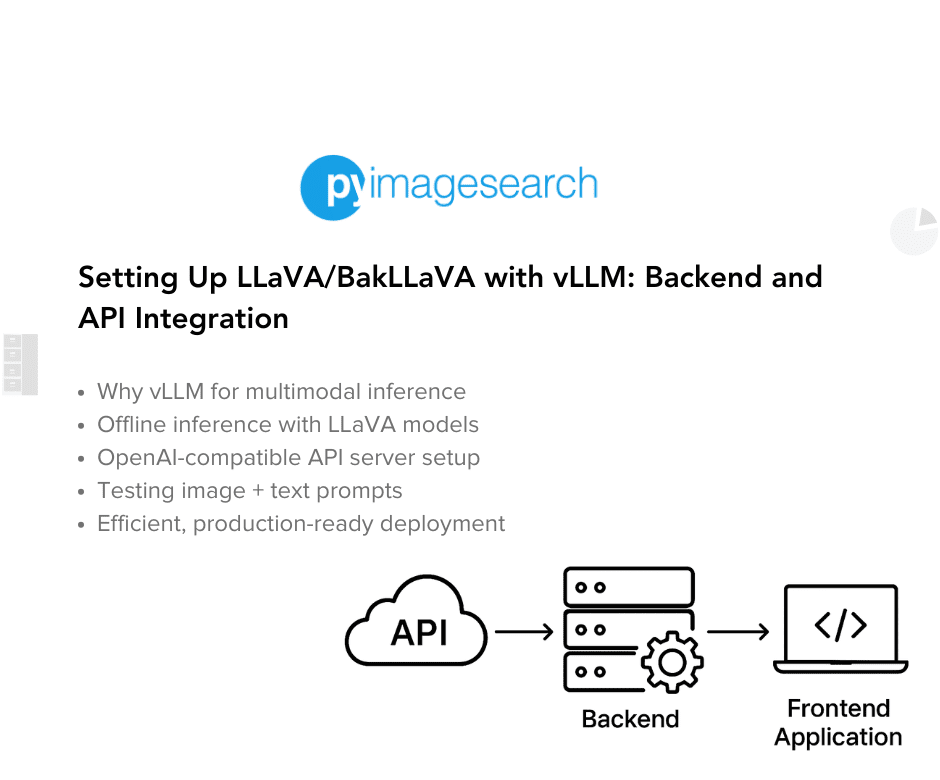

vLLM provides an inference engine to serve open-source multimodal models such as LLaVA and BakLLaVA without repository cloning. Installation and environment configuration enable offline inference and an OpenAI-compatible API for testing. vLLM reduces GPU memory overhead by optimizing model loading and supports efficient batching and token-level execution to handle many concurrent users. vLLM offers streaming and latency control to avoid blocking long responses and provides a production-first backend that integrates easily with frontend tools like Streamlit or custom applications. The approach targets scalable, low-latency multimodal model serving for real-world deployments.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]