#vllm

#vllm

[ follow ]

#llava #ai-inference #sglang #multimodal-llms #model-serving #pagedattention #memory-management #inferact

Startup companies

fromTechCrunch

1 month agoSources: project SGLang spins out as RadixArk with $400M valuation as inference market explodes | TechCrunch

Team behind SGLang launched RadixArk to commercialize inference optimization; RadixArk was recently valued near $400 million and backed by Accel and angel investors.

fromPyImageSearch

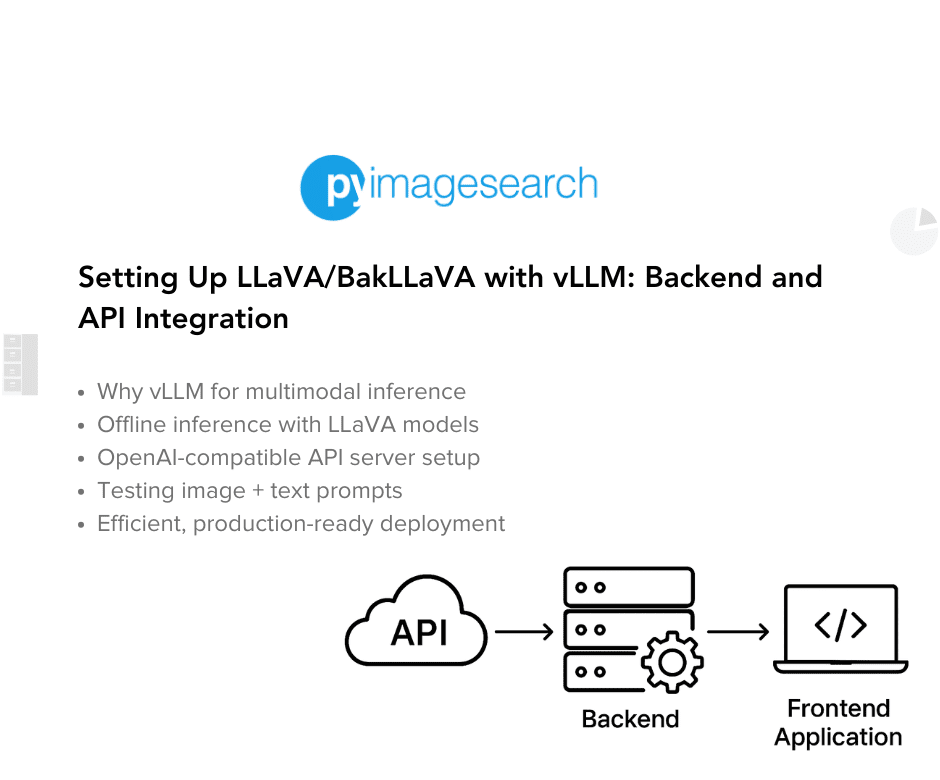

5 months agoBuilding a Streamlit Python UI for LLaVA with OpenAI API Integration - PyImageSearch

In this tutorial, you'll learn how to build an interactive Streamlit Python-based UI that connects seamlessly with your vLLM-powered multimodal backend. You'll write a simple yet flexible frontend that lets users upload images, enter text prompts, and receive smart, vision-aware responses from the LLaVA model - served via vLLM's OpenAI-compatible interface. By the end, you'll have a clean multimodal chat interface that can be deployed locally or in the cloud - ready to power real-world apps in healthcare, education, document understanding, and beyond.

Python

fromInfoWorld

5 months agoUnlocking LLM superpowers: How PagedAttention helps the memory maze

KV blocks are like pages. Instead of contiguous memory, PagedAttention divides the KV cache of each sequence into small, fixed-size KV blocks. Each block holds the keys and values for a set number of tokens. Tokens are like bytes. Individual tokens within the KV cache are like the bytes within a page. Requests are like processes. Each LLM request is managed like a process, with its "logical" KV blocks mapped to "physical" KV blocks in GPU memory.

Artificial intelligence

fromInfoQ

5 months agoGenAI at Scale: What It Enables, What It Costs, and How To Reduce the Pain

My name is Mark Kurtz. I was the CTO at a startup called Neural Magic. We were acquired by Red Hat end of last year, and now working under the CTO arm at Red Hat. I'm going to be talking about GenAI at scale. Essentially, what it enables, a quick overview on that, costs, and generally how to reduce the pain. Running through a little bit more of the structure, we'll go through the state of LLMs and real-world deployment trends.

Artificial intelligence

[ Load more ]