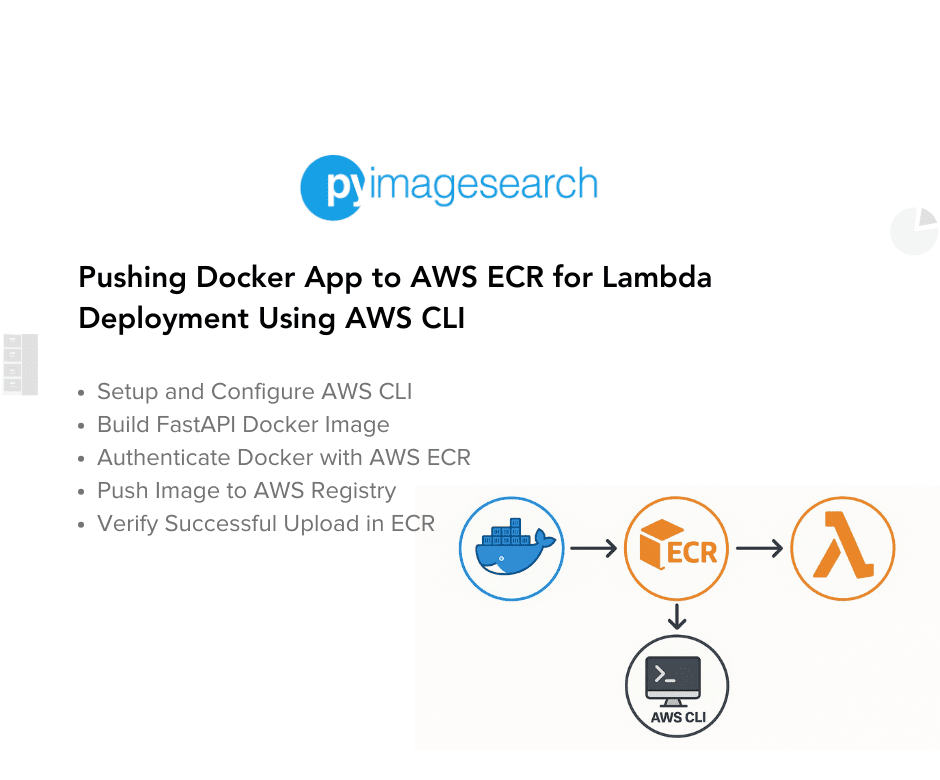

"To learn how to set up AWS CLI, containerize your FastAPI AI app, and push it to AWS ECR for Lambda deployment, just keep reading. In the previous lesson, we containerized our FastAPI AI inference app and tested it locally using Docker, ensuring consistent execution across environments. Since AWS Lambda supports container-based deployment, this step was essential for our serverless setup."

"Before deploying it to AWS Lambda, we need to store the container in AWS Elastic Container Registry (ECR) so Lambda can access it. Built a FastAPI-based AI inference server that loads an ONNX model for image classification. Ran the app locally with Uvicorn to validate functionality. Created a Dockerfile to package the FastAPI app into a container. Built and tested the Docker container, ensuring the app runs smoothly inside it. Verified the FastAPI inference endpoint using Postman, cURL, and browser requests."

AWS CLI must be configured for secure authentication and to enable command-line interaction with AWS services. Create an ECR repository, authenticate Docker to ECR, tag the local Docker image with the ECR repository URI, and push the image to ECR so Lambda can access it. The FastAPI-based AI inference server loads an ONNX model for image classification and runs under Uvicorn. Containerizing the app via a Dockerfile ensures consistent execution across environments. Test the container locally and verify the inference endpoint with Postman, cURL, or a browser before pushing. Storing the container image in AWS ECR prepares it for scalable, serverless deployment to AWS Lambda.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]