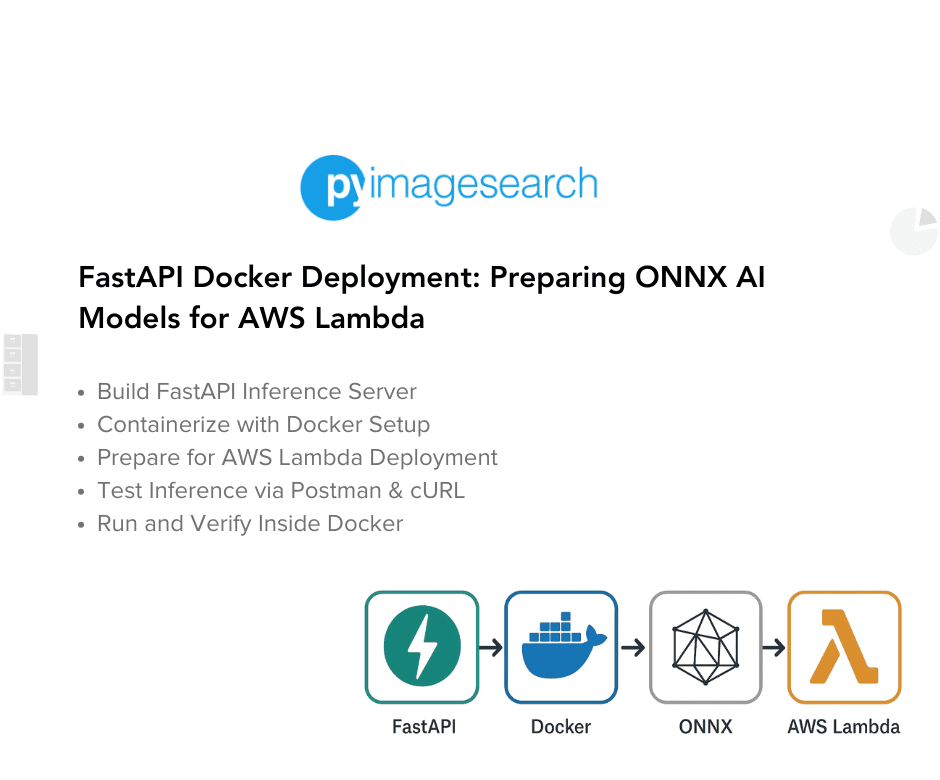

"In this tutorial, you will learn how to build a FastAPI-based AI inference server for serving an ONNX model, handle image preprocessing, and expose an API endpoint for real-time predictions. We'll also run the server in a Docker container, ensuring consistent environments before AWS Lambda deployment. By the end, you'll have a fully functional AI API running in Docker, ready for serverless deployment on AWS Lambda."

"In the previous lesson, we converted a PyTorch ResNetV2-50 model to ONNX, analyzed its structure, and validated its performance using ONNX Runtime. We compared the ONNX model against PyTorch in terms of model size and inference speed, where ONNX showed a 17% improvement for single-image inference and a 2x speed boost for batch inference (128 images). After confirming that ONNX maintains the same accuracy as PyTorch, we are now ready to serve the model via an API and containerize it for deployment."

"Running inference locally is fine for testing, but real-world AI applications require serving models via APIs so users or systems can send inference requests dynamically. A dedicated API: Enables real-time AI inference: Instead of running models locally for every request, an API makes it accessible to multiple users or applications. Simplifies integration: Any frontend (like a web app) or backend service can communicate with the AI model using simple API calls."

FastAPI serves ONNX models to provide real-time AI inference through REST endpoints and image preprocessing pipelines. Docker containers create consistent runtime environments for reliable deployment and testing. Converting PyTorch ResNetV2-50 to ONNX preserves accuracy while improving single-image inference by 17% and achieving a 2x speedup for batch inference (128 images). APIs enable scalable, asynchronous inference accessible to multiple frontends and backend services via simple calls. Containerized FastAPI applications are ready for serverless deployment on AWS Lambda after validation and optimization. The workflow includes model conversion, ONNX Runtime validation, API implementation, Dockerization, and preparation for cloud deployment.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]