"In this tutorial, you'll learn how to build an interactive Streamlit Python-based UI that connects seamlessly with your vLLM-powered multimodal backend. You'll write a simple yet flexible frontend that lets users upload images, enter text prompts, and receive smart, vision-aware responses from the LLaVA model - served via vLLM's OpenAI-compatible interface. By the end, you'll have a clean multimodal chat interface that can be deployed locally or in the cloud - ready to power real-world apps in healthcare, education, document understanding, and beyond."

"As open-source vision-language models like LLaVA and BakLLaVA continue to evolve, there's growing demand for accessible interfaces that let users interact with these models using both images and natural language. After all, multimodal models are only as useful as the tools we have to explore them. Whether you're a data scientist testing image inputs, a product engineer prototyping a visual assistant, or a researcher sharing a demo - having a quick, browser-based UI can save you hours of effort."

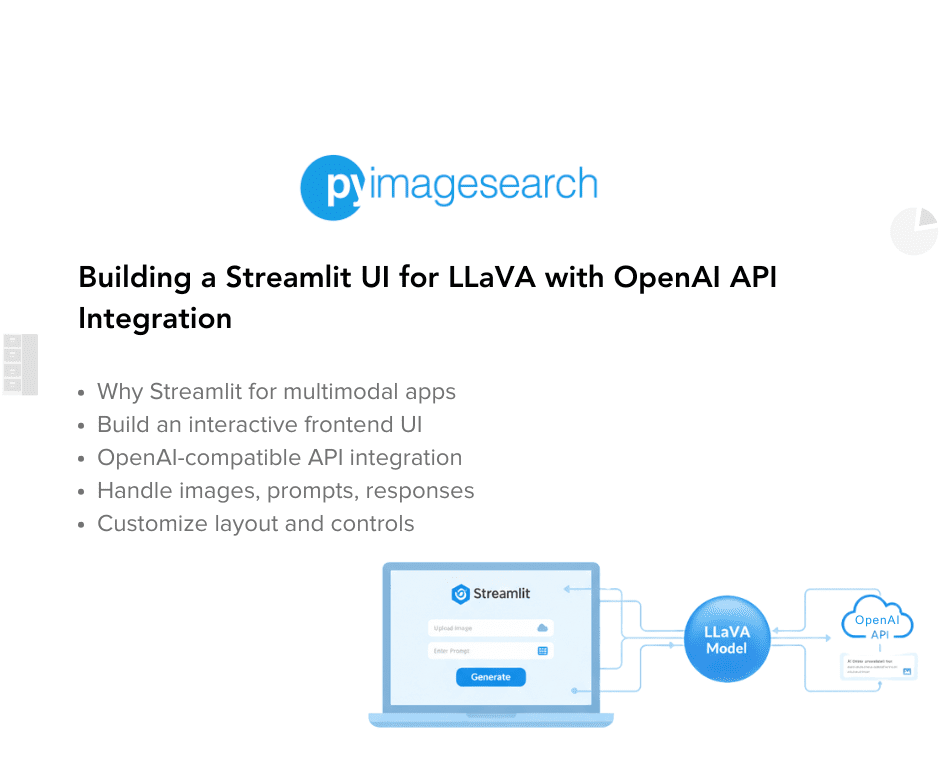

An interactive Streamlit Python UI connects to a vLLM-powered multimodal API and handles image and text inputs, enabling vision-aware responses from LLaVA models via an OpenAI-compatible interface. The frontend supports file uploaders for images and documents, dropdowns to select models, and real-time outputs such as answers, captions, and annotations. The UI simplifies prototyping for data scientists, product engineers, and researchers by providing a browser-based interface without front-end experience. The interface can be deployed locally or in the cloud and supports use cases in healthcare, education, and document understanding. Streamlit enables rapid conversion of Python scripts into shareable web apps with minimal code.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]