"A new benchmark study released by Vals AI suggests that both legal-specific and general large language models are now capable of performing legal research tasks with a level of accuracy equaling or exceeding that of human lawyers. The report, VLAIR - Legal Research, extends the earlier Vals Legal AI Report (VLAIR) from February 2025 to include an in-depth examination of how various AI products handle traditional legal research questions."

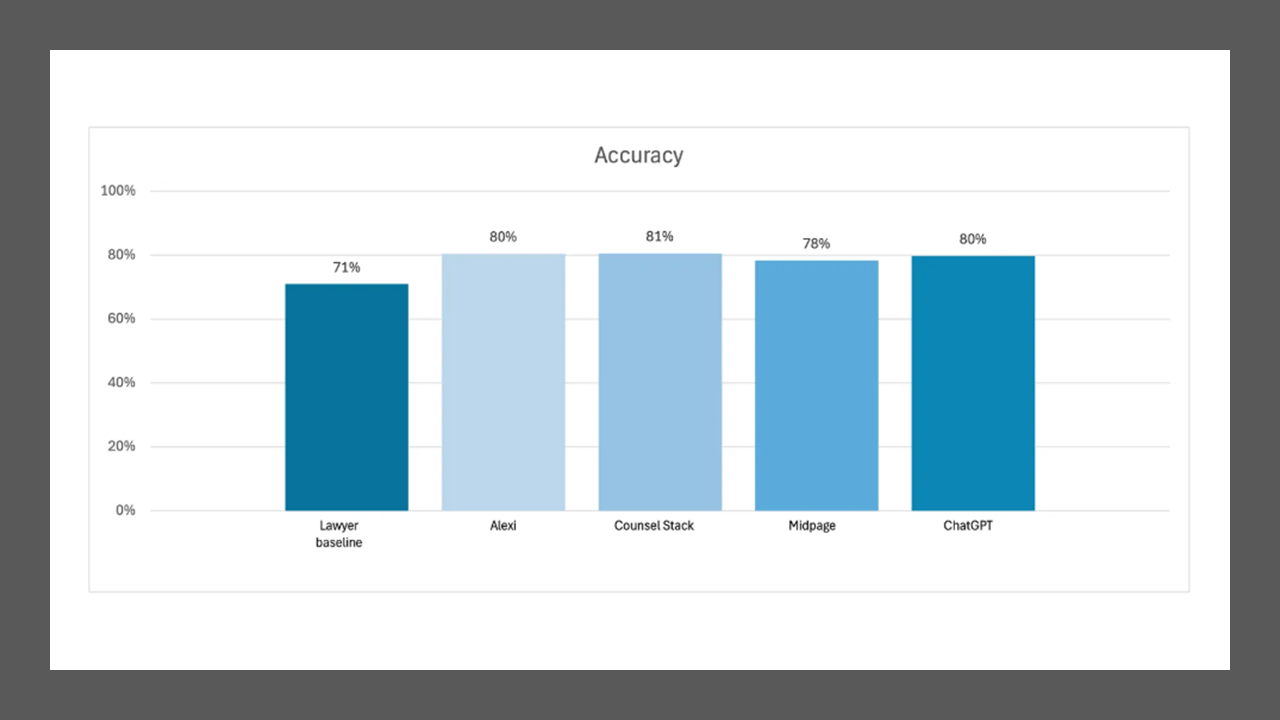

"This follow-up study compared three legal AI systems - Alexi, Counsel Stack and Midpage - and one foundational model, ChatGPT, against a lawyer baseline representing traditional manual research. All four AI products, including ChatGPT, scored within four points of each other, with the legal AI products performing better overall than the generalist product, and with all performing better than the lawyer baseline. The highest performer across all criteria was Counsel Stack."

"Unfortunately, the benchmarking did not include the three largest AI legal research platforms: Thomson Reuters, LexisNexis and vLex. According to a spokespeople for Thomson Reuters and LexisNexis, neither company opted into participating in the study. They did not not say why. vLex, however, originally agreed to have its Vincent AI participate in the study, but then withdrew before the final results were published."

Vals AI extended its February 2025 VLAIR evaluation to benchmark legal research capabilities across multiple AI products. The study compared three legal AI systems—Alexi, Counsel Stack, and Midpage—and one foundational model, ChatGPT, against a lawyer baseline representing manual research on tasks including document extraction, document Q&A, summarization, redlining, transcript analysis, chronology generation, and EDGAR research. All four AI products scored within four points of each other, with legal-specific platforms performing better overall than the generalist model and all surpassing the lawyer baseline. Counsel Stack achieved the highest performance across criteria. Major vendors Thomson Reuters, LexisNexis, and vLex did not participate; vLex withdrew citing a focus away from enterprise AI tools.

Read at LawSites

Unable to calculate read time

Collection

[

|

...

]