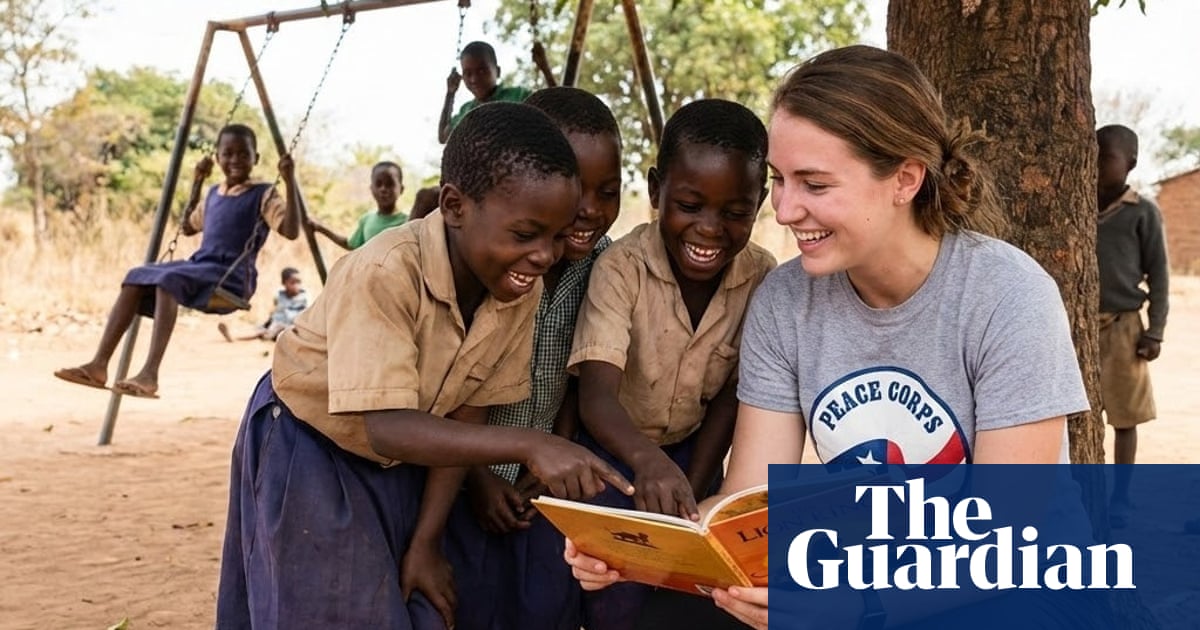

"Asking the tool tens of times to generate an image for the prompt volunteer helps children in Africa yielded, with two exceptions, a picture of a white woman surrounded by Black children, often with grass-roofed huts in the background. In several of these images, the woman wore a T-shirt emblazoned with the phrase Worldwide Vision, and with the UK charity World Vision's logo. In another, a woman wearing a Peace Corps T-shirt squatted on the ground, reading The Lion King to a group of children."

"The first thing that I noticed was the old suspects: the white saviour bias, the linkage of dark skin tone with poverty and everything. Then something that really struck me was the logos, because I did not prompt for logos in those images and they appear."

"We haven't been contacted by Google or Nano Banana Pro, nor have we given permission to use or manipulate our own logo or misrepresent our work in this way."

Nano Banana Pro often produced images depicting white individuals surrounded by Black children for prompts about volunteering or aid in Africa. Generated scenes commonly included grass- or tin-roofed huts and T-shirts bearing charity names or logos such as World Vision, Peace Corps, Save the Children, and Doctors Without Borders without those logos being requested. A researcher noticed both the persistent white saviour framing and unexpected logo appearances during experimentation. A World Vision spokesperson stated they had not been contacted nor given permission for logo use. The outcomes raise concerns about racialized portrayals, misrepresentation of charities, and potential harms in AI image generation.

Read at www.theguardian.com

Unable to calculate read time

Collection

[

|

...

]