"In our experiments, we attempted the following standard datasets in the machine learning literature to assess the effectiveness of DP-based Fair Learning."

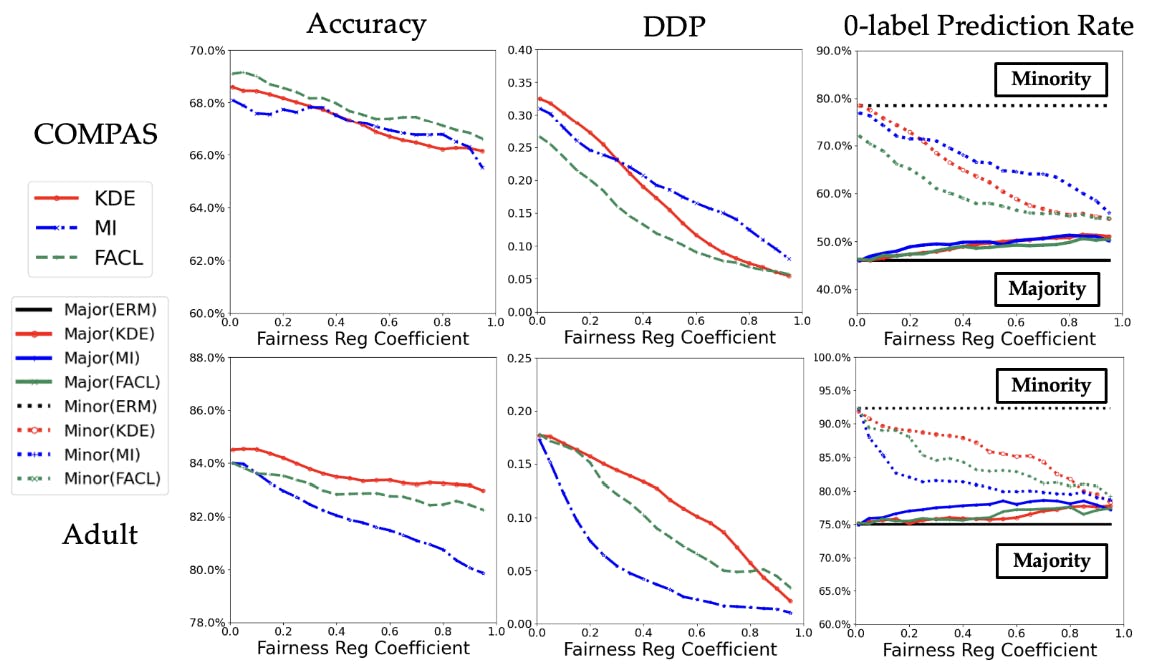

"To simulate a setting with imbalanced sensitive attribute distribution, we considered 2500 training and 750 test samples from the COMPAS dataset."

"Our analysis utilized the Adult dataset to observe model behavior in relation to gender-based sensitive attribute distribution in fair supervised learning."

"Utilizing CelebA, we evaluated model performance concerning gender disparities, emphasizing the importance of balanced representation in supervised learning."

The article discusses advances in fair supervised learning, specifically through distributionally robust optimization and differential privacy (DP). It outlines empirical evaluations using datasets like COMPAS, Adult, and CelebA, emphasizing the importance of balancing sensitive attribute distributions, such as race and gender. Experiments showcase model performance in heterogeneous federated learning scenarios, highlighting inductive biases and fairness metrics. The results indicate that applying DP methods leads to effective and fair learning outcomes, ensuring equitable predictions across diverse populations.

#fairness-in-machine-learning #differential-privacy #supervised-learning #federated-learning #bias-in-ai

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]