"In testing the predictive power of pretraining concept frequencies, the results show a clear scaling trend where increased frequency directly correlates with improved zero-shot performance across different prompting strategies and evaluation metrics."

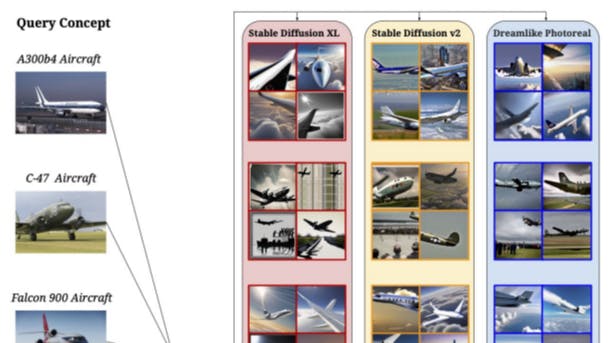

"Long-tailed concepts were assessed in the experimental setup, demonstrating that state-of-the-art T2I models can effectively generate images for these concepts through controlled prompting and retrieval evaluations."

Pretraining concept frequencies significantly influence zero-shot performance in text-to-image (T2I) models, with experimental results demonstrating a scaling trend. The study evaluated T2I models on long-tailed concepts using a controlled prompting strategy and the nearest-neighbor retrieval metric. Results exhibited that higher frequencies of concepts in pretraining datasets directly correlated with enhanced performance across diverse metrics. Additionally, the quantitative results further confirmed the predictive capabilities of concept frequencies when tested in both sampling methods and across different model types.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]