"Web-crawled pretraining datasets significantly influence the zero-shot evaluation performance of multimodal models like CLIP and Stable-Diffusion, demonstrating predictive qualities based on concept frequency."

"The research identifies that concept frequency within pretraining datasets can predict performance in zero-shot scenarios, emphasizing the importance of dataset curation for training effective multimodal AI models."

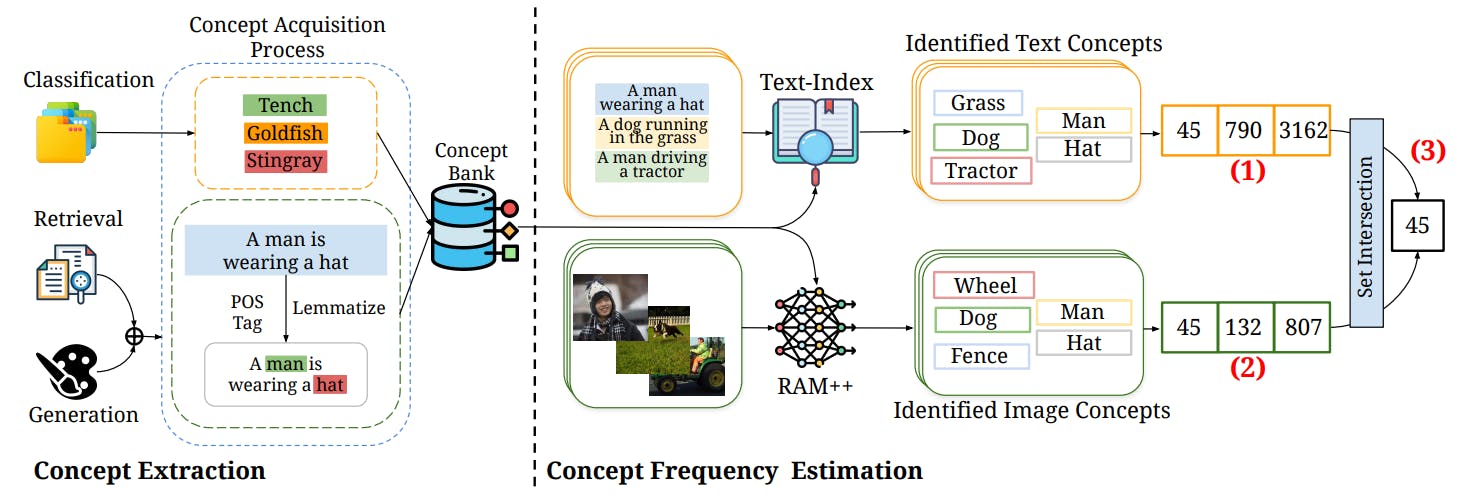

Pretraining datasets sourced from web data play a critical role in achieving high zero-shot evaluation performance for multimodal models, exemplified by CLIP for classification/retrieval and Stable-Diffusion for image generation. The study investigates the correlation between concept frequencies in pretraining data and their predictive capabilities for model performance in zero-shot scenarios. Various experiments validate that higher frequencies of certain concepts within these datasets enhance both classification and retrieval efficacy. This underscores the necessity of careful dataset design to optimize model training and future applications in AI.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]