"I often explain responsible AI development through baking. If you bake a cake with salt instead of sugar, you cannot fix it afterwards. You have to start over. The metaphor lands because everyone understands irreversibility. Yet I keep meeting consulting firms and product teams, many in highly regulated sectors, who assume they can retrofit compliance later. Build fast, they say. Worry about transparency and accountability once the system works."

"They cannot. Most AI applications being developed today, especially those built on opaque foundation models with no visibility on data provenance, bias controls, or training processes, cannot be retrofitted to meet regulatory requirements. The EU AI Act does not allow you to add documentation, traceability, and human oversight as afterthoughts. These must be baked into the architecture from the beginning."

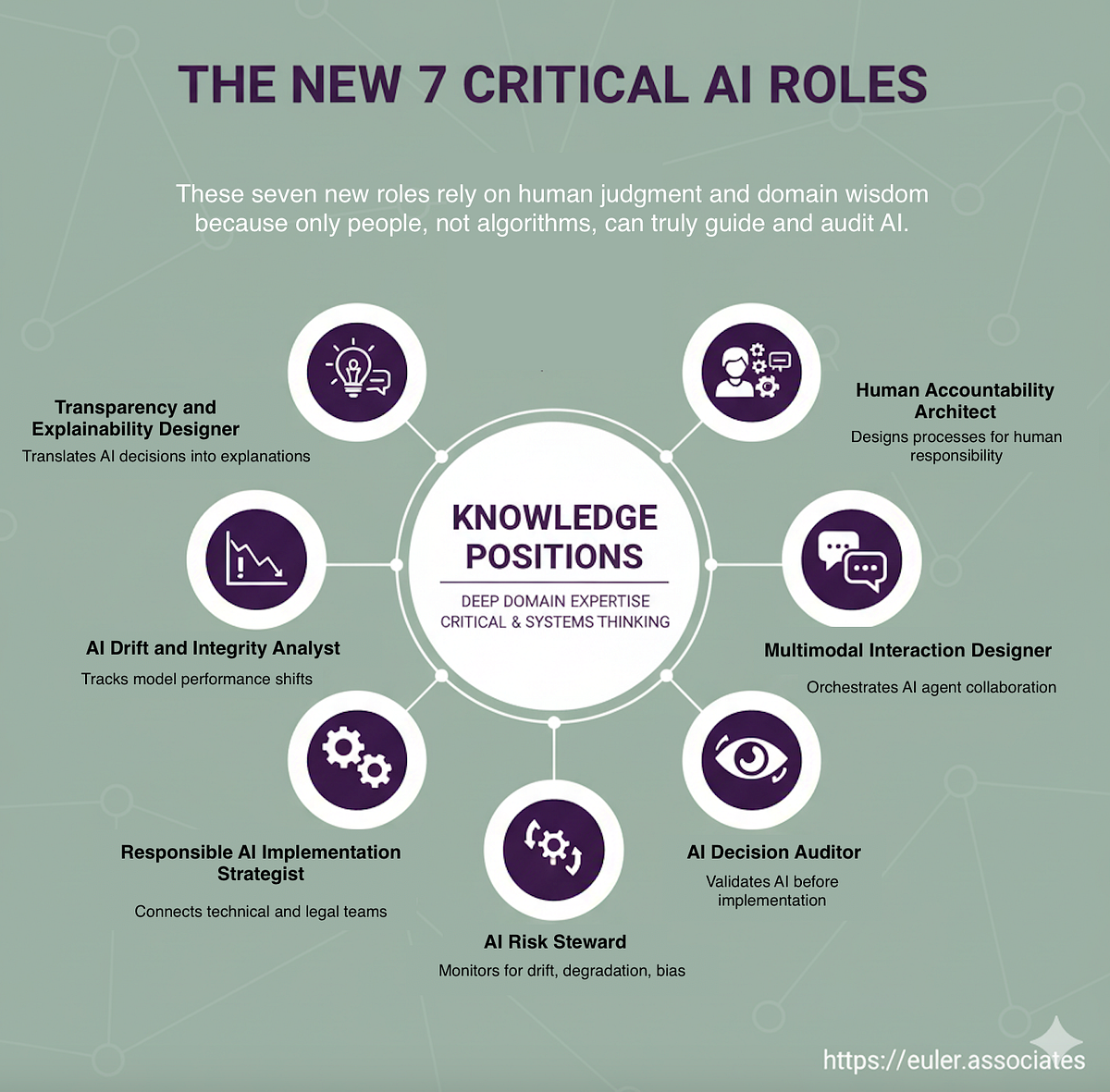

"Whilst headlines obsess over which jobs AI will eliminate, something more consequential is happening. We are creating an entire ecosystem of AI systems with no one qualified to govern them: a recent study by Fluree shows that the average enterprise runs an 367 (!) SaaS apps, most of which have AI integrated. The issue isn't that the technology is ungovernable, it's that we're not cultivating the expertise required to do the governing."

"Think about what deploying a high-risk AI system actually requires. You need someone who can question whether the training data reflects reality or bias. Someone who can challenge whether the optimisation function aligns with human values. Someone who can monitor whether a model performing at 95% accuracy today has quietly degraded. Someone who can explain to a regulator, or an affected citizen, why the algorithm made the decision it did. These are not technical problems. They are judgement problems.And judgement cannot be automated."

Responsible AI development requires embedding compliance, documentation, traceability, and human oversight into system architecture from the start because many AI systems cannot be retrofitted once deployed. Opaque foundation models lacking visibility on data provenance, bias controls, or training processes are especially irreparable. The EU AI Act prohibits adding required safeguards as afterthoughts. Enterprises are proliferating AI across hundreds of SaaS applications while lacking qualified governance expertise. Deploying high-risk AI demands people who can assess training data bias, align optimisation with human values, monitor model degradation, and explain decisions to regulators or affected citizens. These are judgment problems that cannot be automated.

Read at Medium

Unable to calculate read time

Collection

[

|

...

]