"Since prehistoric ages, humans had to decide on whether the place they are in is safe to stay in or not, if the strangers they meet on their way are safe to be around or not, and if the berries they were given by others were safe to eat or not. In psychology, trust is the willingness to rely on someone or something despite uncertainty. In tech, trust means believing that the system is competent, predictable, aligned with your goals, and transparent about limitations."

"Even before a person starts using a system, they start the judgement. Just the same way, when we meet a stranger, we start assessing them by the way they are dressed, how they move, and speak to others. With AI systems, we can show the capacity of the product using the sample prompts and previous similar projects. This would allow the user to build trust even before they start engaging with the software."

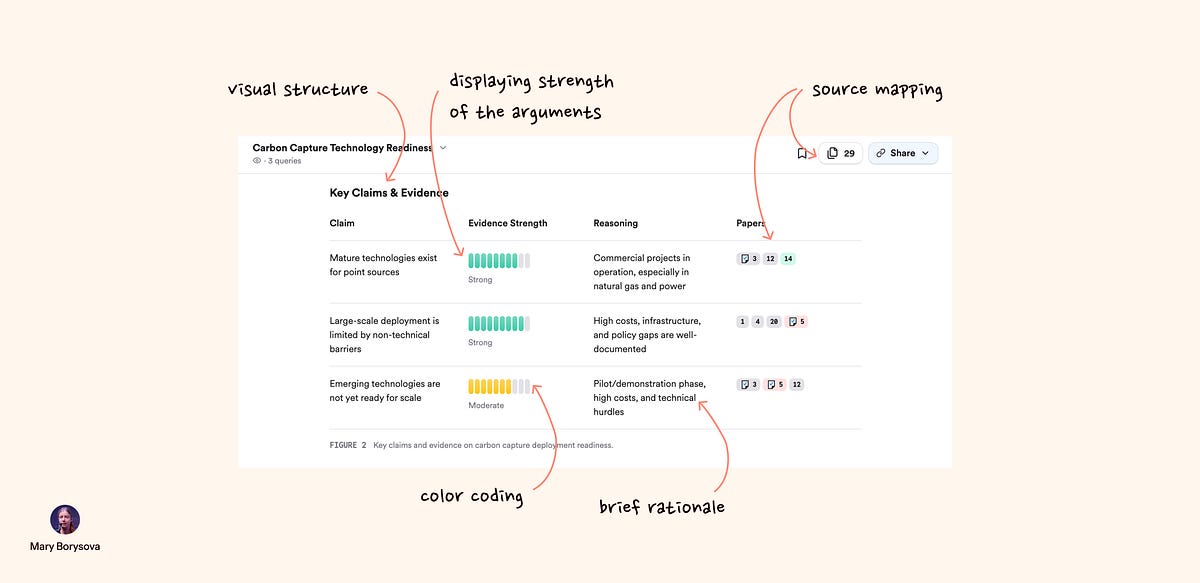

Trust is a fragile, fundamental basis for human interaction, historically rooted in survival judgments about places, people, and food. In psychology, trust is the willingness to rely on someone or something despite uncertainty. In technology, trust requires belief that a system is competent, predictable, goal-aligned, and transparent about limitations. Traditional tools produce deterministic, predictable results, while AI produces probabilistic outputs and can operate as opaque black boxes. Calibrated trust requires users to know when to rely on AI and when to apply their own judgment; enabling pre-use testing through sample prompts and previews helps users assess capacity before commitment.

Read at Medium

Unable to calculate read time

Collection

[

|

...

]