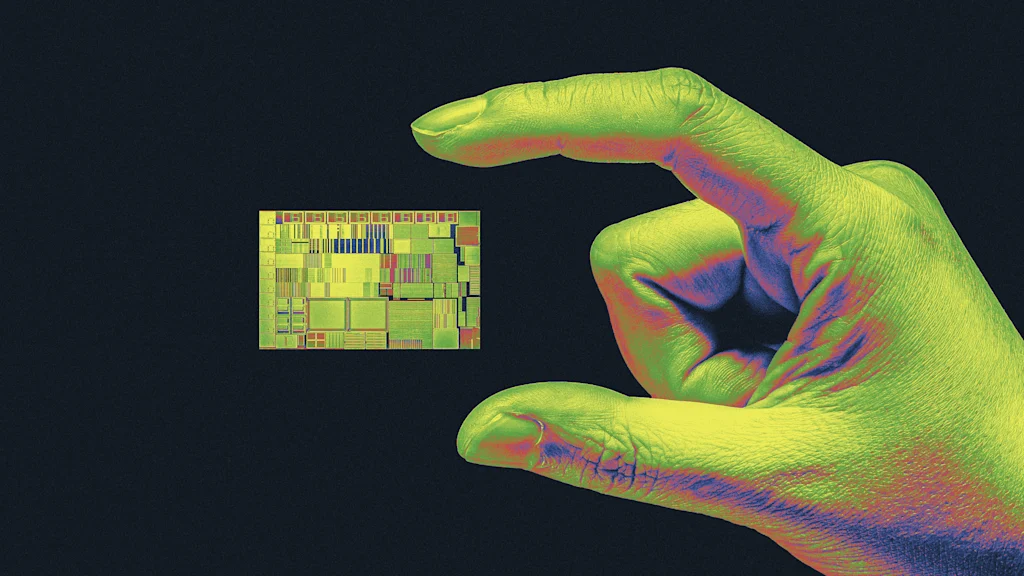

"So far, Nvidia has provided the vast majority of the processors used to train and operate large AI models like the ones that underpin ChatGPT. Tech companies and AI labs don't like to rely too much on a single chip vendor, especially as their need for computing capacity increases, so they're looking for ways to diversify. And so players like AMD and Huawei, as well as hyperscalers like Google and Amazon AWS, which just released its latest Trainium3 chip,"

"are hurrying to improve their own flavors of AI accelerators, the processors designed to speed up specific types of computing tasks. Could the competition eventually reduce Nvidia, AI's dominant player, to just another AI chip vendor, one of many options, potentially shaking up the industry's technological foundations? Or is the rising tide of demand for AI chips big enough to lift all boats?"

Nvidia currently supplies the vast majority of processors used to train and operate large AI models such as those underpinning ChatGPT. Tech companies and AI labs seek to avoid dependence on a single chip vendor as computing capacity demands grow, prompting efforts to diversify. Competitors including AMD and Huawei, and hyperscalers such as Google and Amazon AWS (which released the Trainium3 chip), are accelerating development of specialized AI accelerators to speed up specific computing tasks. The industry faces whether competition will make Nvidia one of many vendors or whether rising AI demand will lift all suppliers. Fast Company's World Changing Ideas Awards deadline is Friday, December 12, at 11:59 p.m. PT.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]