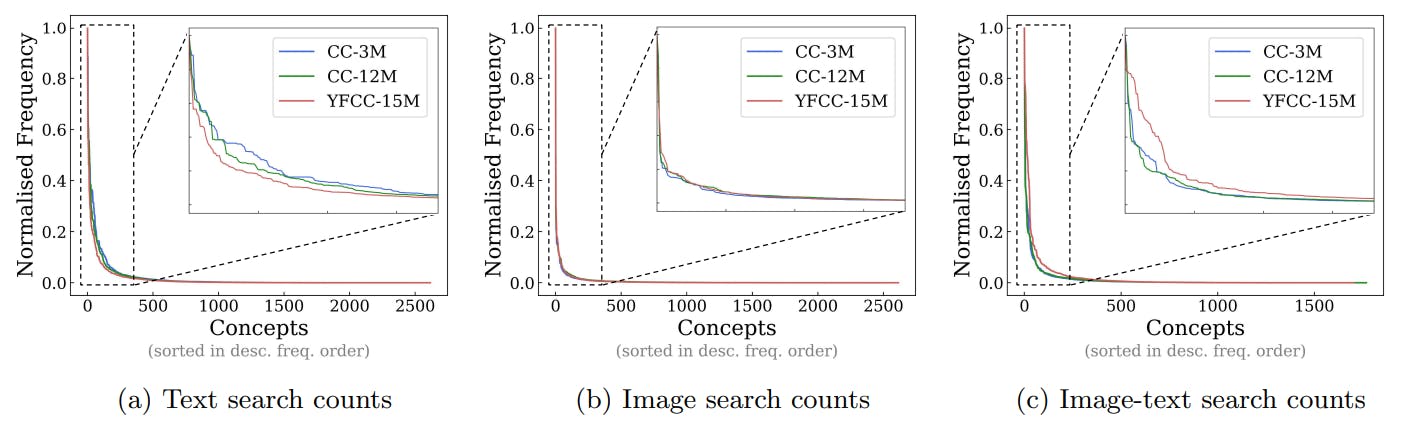

"Controlling for sample-level similarity between pretraining and downstream datasets is crucial for accurately measuring the impact of concept frequency on zero-shot performance."

"Concept frequency can significantly influence zero-shot performance across various models, supporting the hypothesis that higher frequencies in pretraining yield better downstream results."

"Experiments reveal that synthetic control of concept distributions can validate the relationship between concept frequency and downstream performance, reinforcing earlier findings in model evaluations."

"The study demonstrates a clear trend where pretraining frequencies correlate strongly with performance outcomes, suggesting an avenue for improving model training strategies."

Pretraining frequency is a significant predictor of zero-shot performance across various models. By controlling for similarity between pretraining and downstream datasets, the effects of concept frequency can be isolated. Experimental setups allow for testing with synthetic distributions, further validating the relationship between concept frequency and performance outcomes. Results show that models trained with higher concept frequencies demonstrate improved downstream performance. These findings suggest that understanding and leveraging concept frequencies in pretraining data can enhance model capabilities in real-world applications.

#pretraining-data #zero-shot-performance #concept-frequency #model-evaluation #synthetic-data-testing

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]