fromFast Company

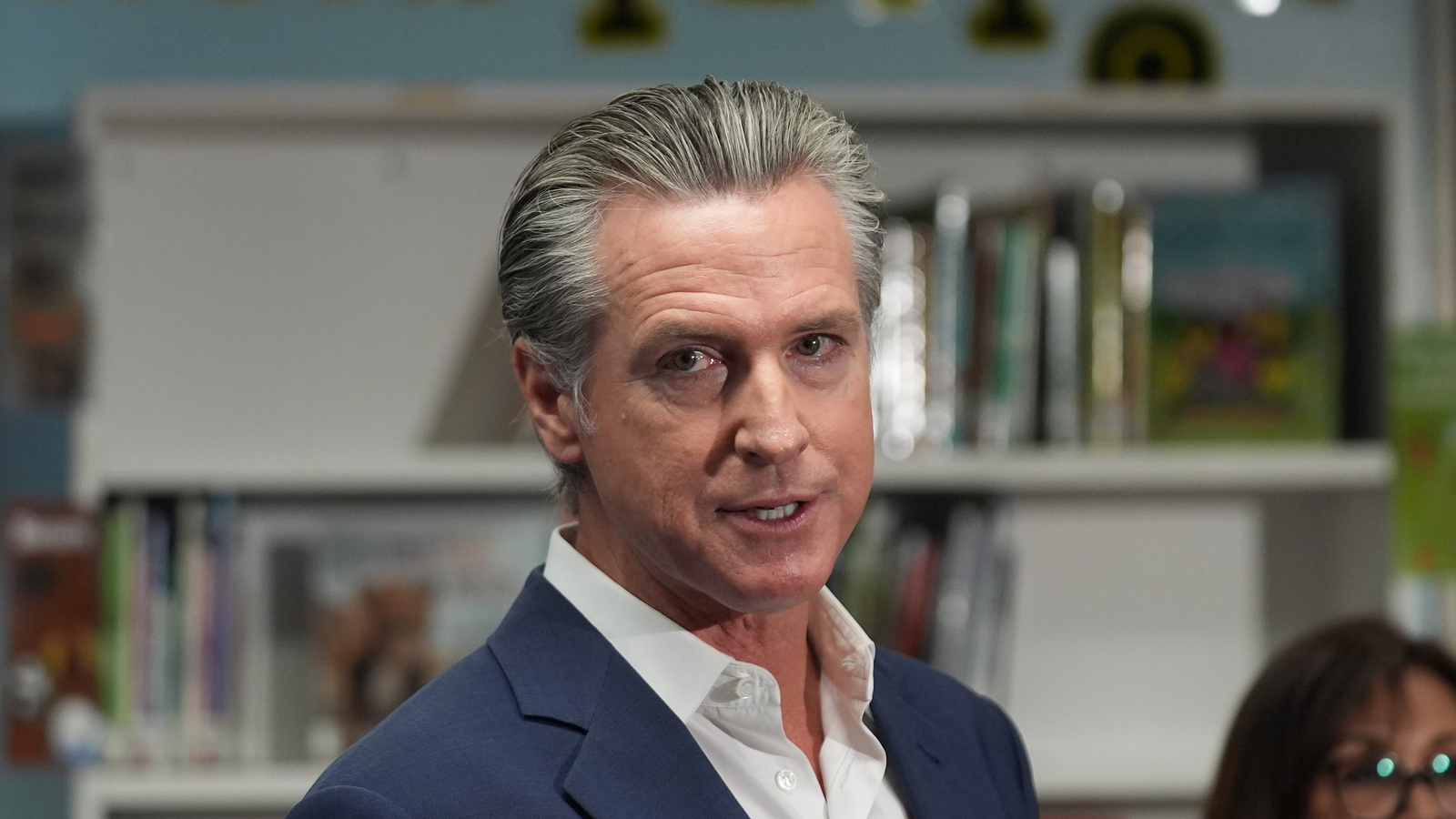

1 week agoGovernor Newsom vetoed a bill restricting kids' access to AI chatbots. Here's why

The bill would have banned companies from making AI chatbots available to anyone under 18 years old unless the businesses could ensure the technology couldn't engage in sexual conversations or encourage self-harm. While I strongly support the author's goal of establishing necessary safeguards for the safe use of AI by minors, (the bill) imposes such broad restrictions on the use of conversational AI tools that it may unintentionally lead to a total ban on the use of these products by minors," Newsom said.

California