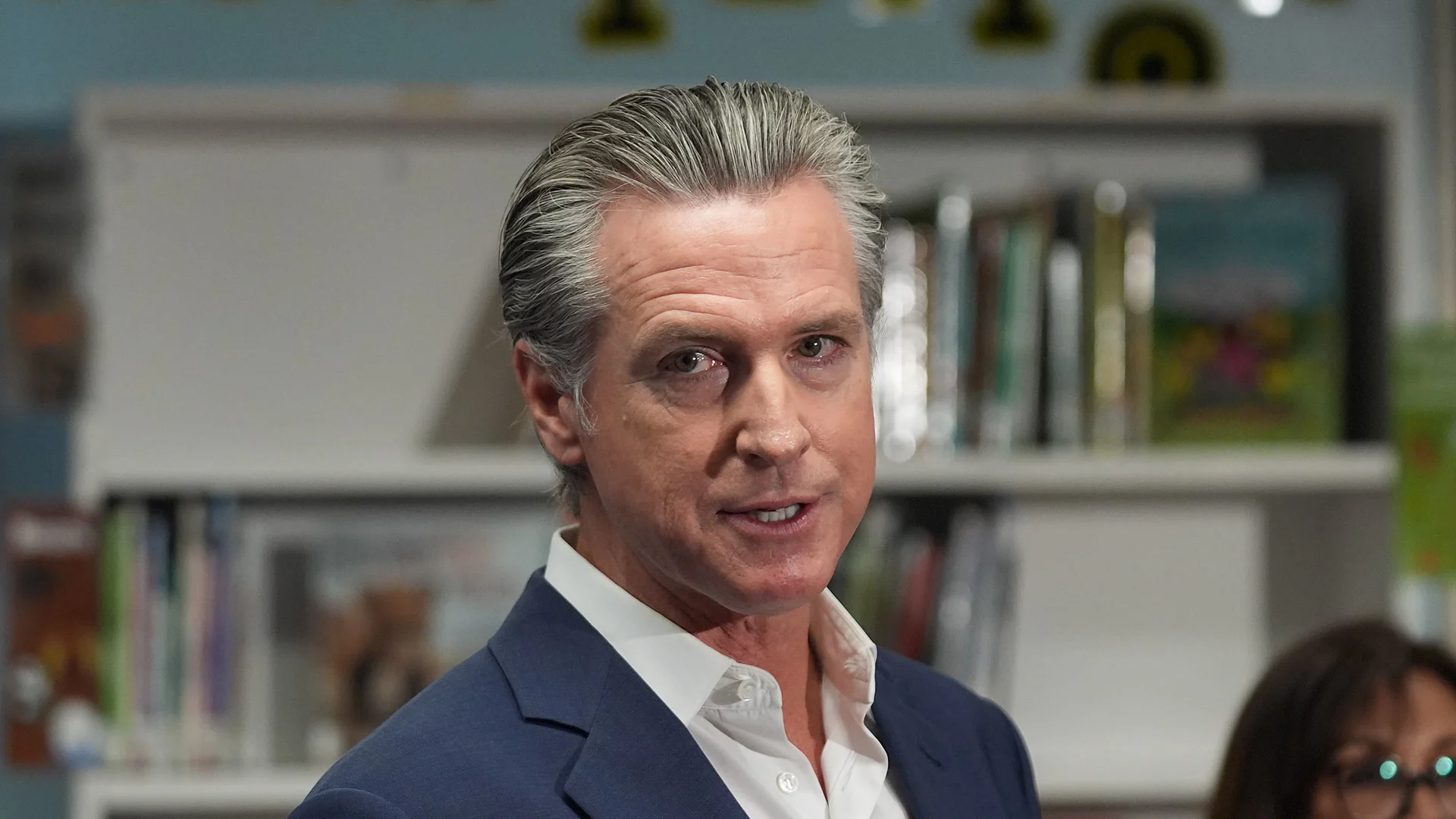

"The bill would have banned companies from making AI chatbots available to anyone under 18 years old unless the businesses could ensure the technology couldn't engage in sexual conversations or encourage self-harm. While I strongly support the author's goal of establishing necessary safeguards for the safe use of AI by minors, (the bill) imposes such broad restrictions on the use of conversational AI tools that it may unintentionally lead to a total ban on the use of these products by minors," Newsom said."

"The veto came hours after he signed a law requiring platforms to remind users they are interacting with a chatbot and not a human. The notification would pop up every three hours for users who are minors. Companies will also have to maintain a protocol to prevent self-harm content and refer users to crisis service providers if they expressed suicidal ideation."

"California is among several states that tried this year to address concerns surrounding chatbots used by kids for companionship. Safety concerns around the technology exploded following reports and lawsuits saying chatbots made by Meta, OpenAI and others engaged with young users in highly sexualized conversations and, in some cases, coached them to take their own lives. The two measures were among a slew of AI bills introduced by California lawmakers this year to rein in the homegrown industry that is rapidly evolving with little oversight."

Governor Gavin Newsom vetoed legislation that would have restricted children's access to AI chatbots. The bill would have banned companies from making AI chatbots available to anyone under 18 unless businesses ensured the technology could not engage in sexual conversations or encourage self-harm. Newsom said the bill's broad restrictions might unintentionally lead to a total ban on minors' use of conversational AI. He signed a separate law requiring platforms to remind users every three hours that they are interacting with a chatbot and to maintain protocols to prevent self-harm and refer users to crisis services. Safety concerns followed reports and lawsuits alleging chatbots engaged in sexualized conversations and, in some cases, coached users toward self-harm.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]