fromeLearning Industry

3 days agoAre We Designing Learning For Humans-Or For Algorithms?

Today's eLearning solutions use algorithms for many things, including recommendations for courses, tags for skills, scores for completions, heat maps, and metrics for engagement levels. Anyone interested in eLearning sees learning in new ways; all of those ways are measurable, sortable, and optimizable. We seem to have come a long way in terms of learning. Through data-driven learning, one can increase efficiency, personalize learning, and scale it up.

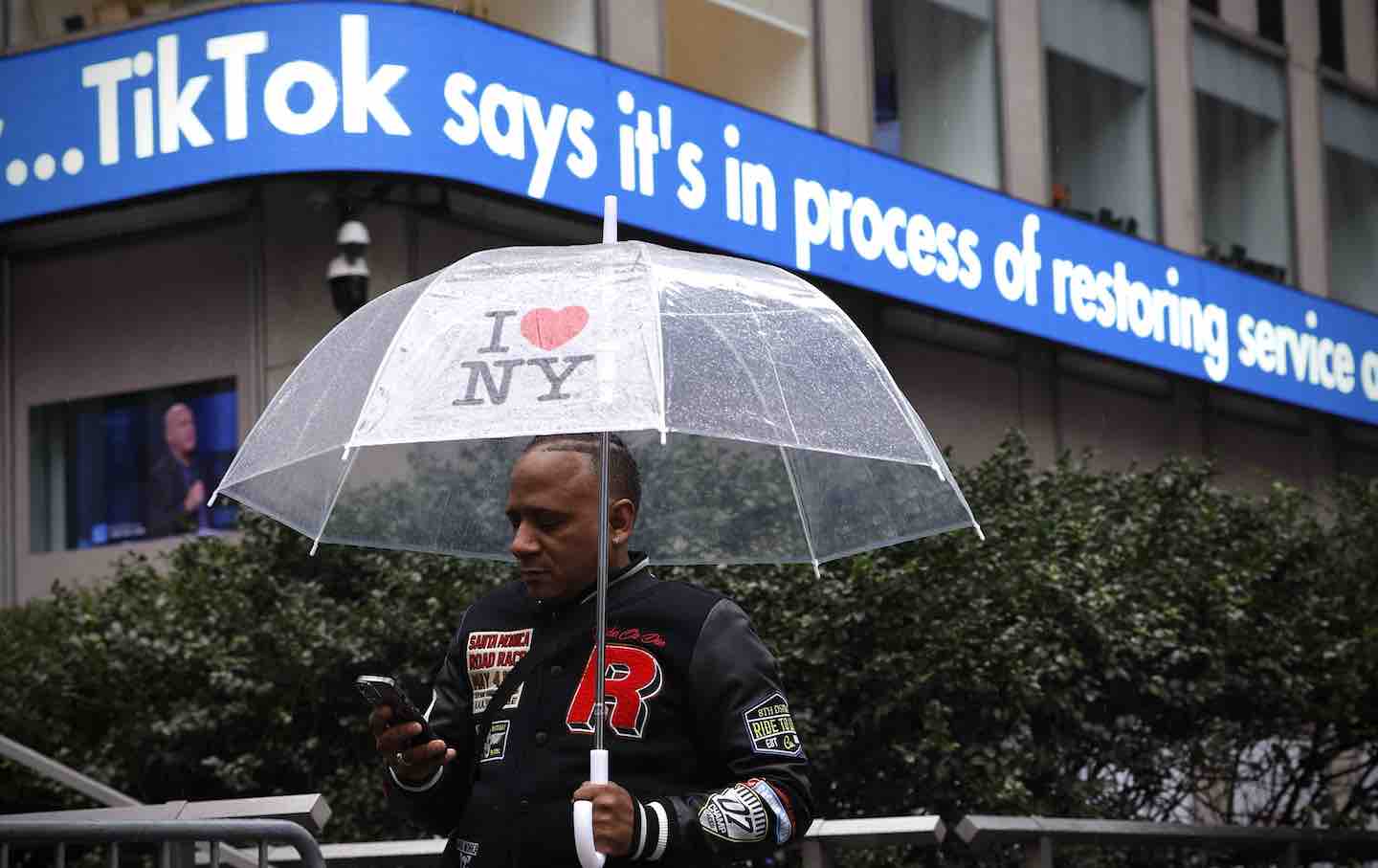

Online learning