#lora

#lora

[ follow ]

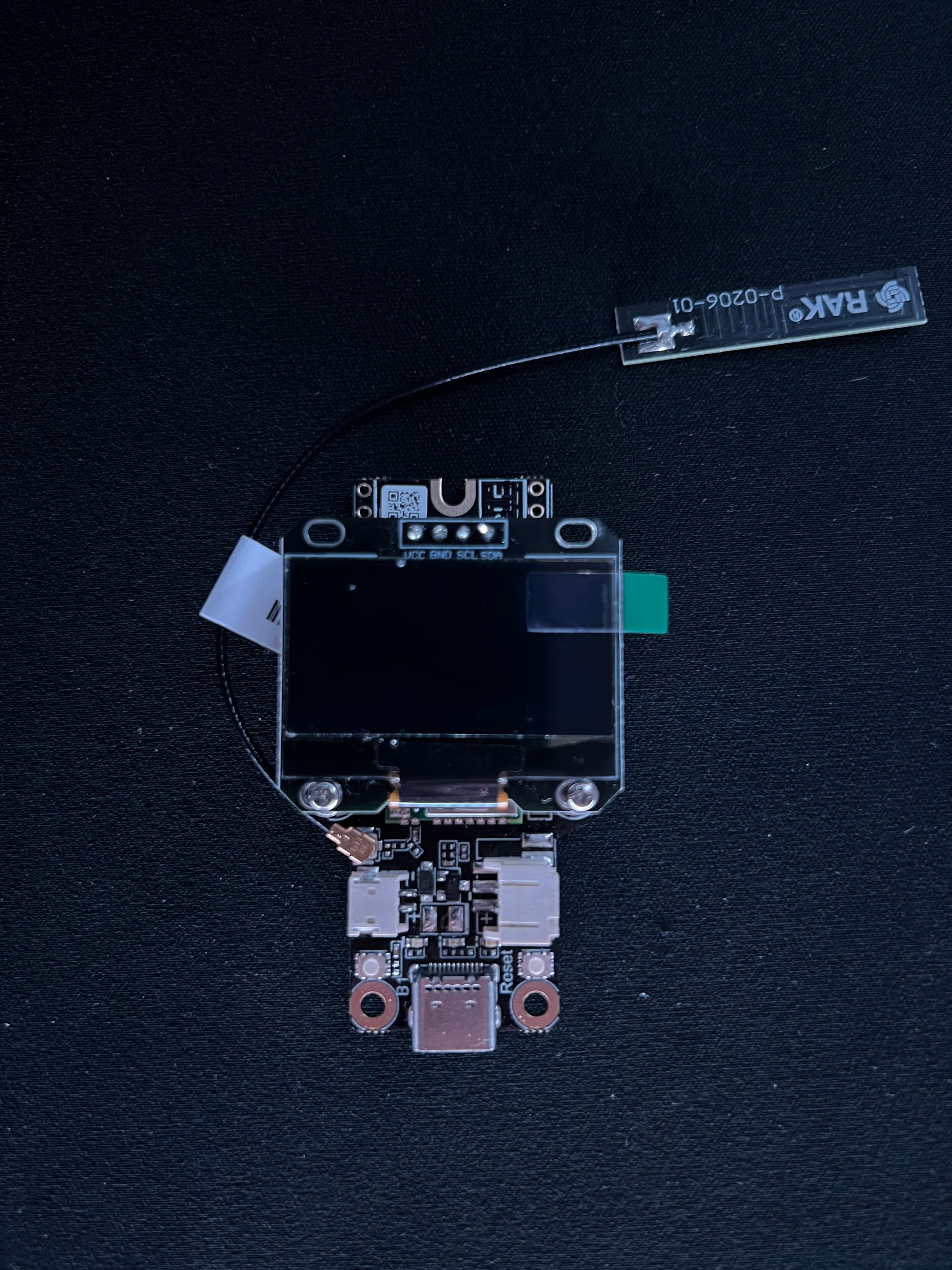

#meshtastic #mesh-networking #esp32 #fine-tuning #off-grid-communication #encrypted-messaging #low-power-devices

Artificial intelligence

fromInfoQ

4 months agoThinking Machines Releases Tinker API for Flexible Model Fine-Tuning

Thinking Machines released Tinker, an API that simplifies fine-tuning open-weight language models by managing infrastructure, GPU allocation, and checkpoints through simple Python primitives.

fromAdrelien - Your Source for Tech News, Tutorials, and Reviews

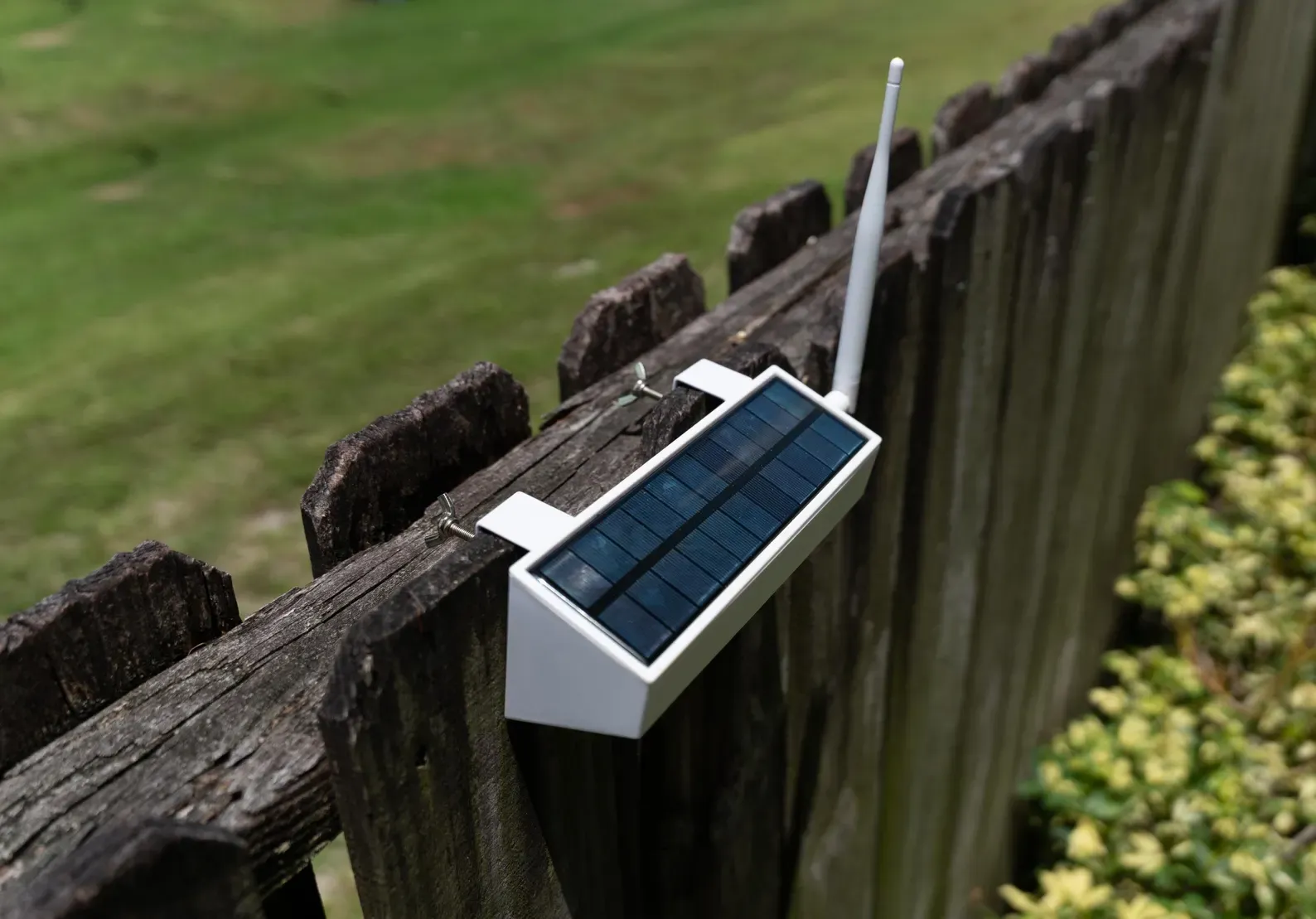

7 months agoWio Tracker L1 Pro: Meshtastic Handheld w/ Solar Support Killer Price

The Wio Tracker L1 Pro delivers GPS, solar charging, joystick controls, and 2.5-day battery life for just $42.90, making it user-friendly and practical.

Gadgets

fromHackernoon

56 years agoThe Last Rank We Need? QDyLoRA's Vision for the Future of LLM Tuning | HackerNoon

QDyLoRA offers an efficient and effective technique for LoRA-based fine-tuning LLMs on downstream tasks, eliminating the need for tuning multiple models for optimal rank.

Artificial intelligence

[ Load more ]