"How do you ensure that you make optimal use of all available compute when you connect multiple data centers? "That's not an easy problem to solve," says Lund. Certainly not if the goal is to do so as efficiently as possible. "Of course, you can resend packets if they haven't arrived, but AI training doesn't stop there," he continues. They use checkpointing to work optimally."

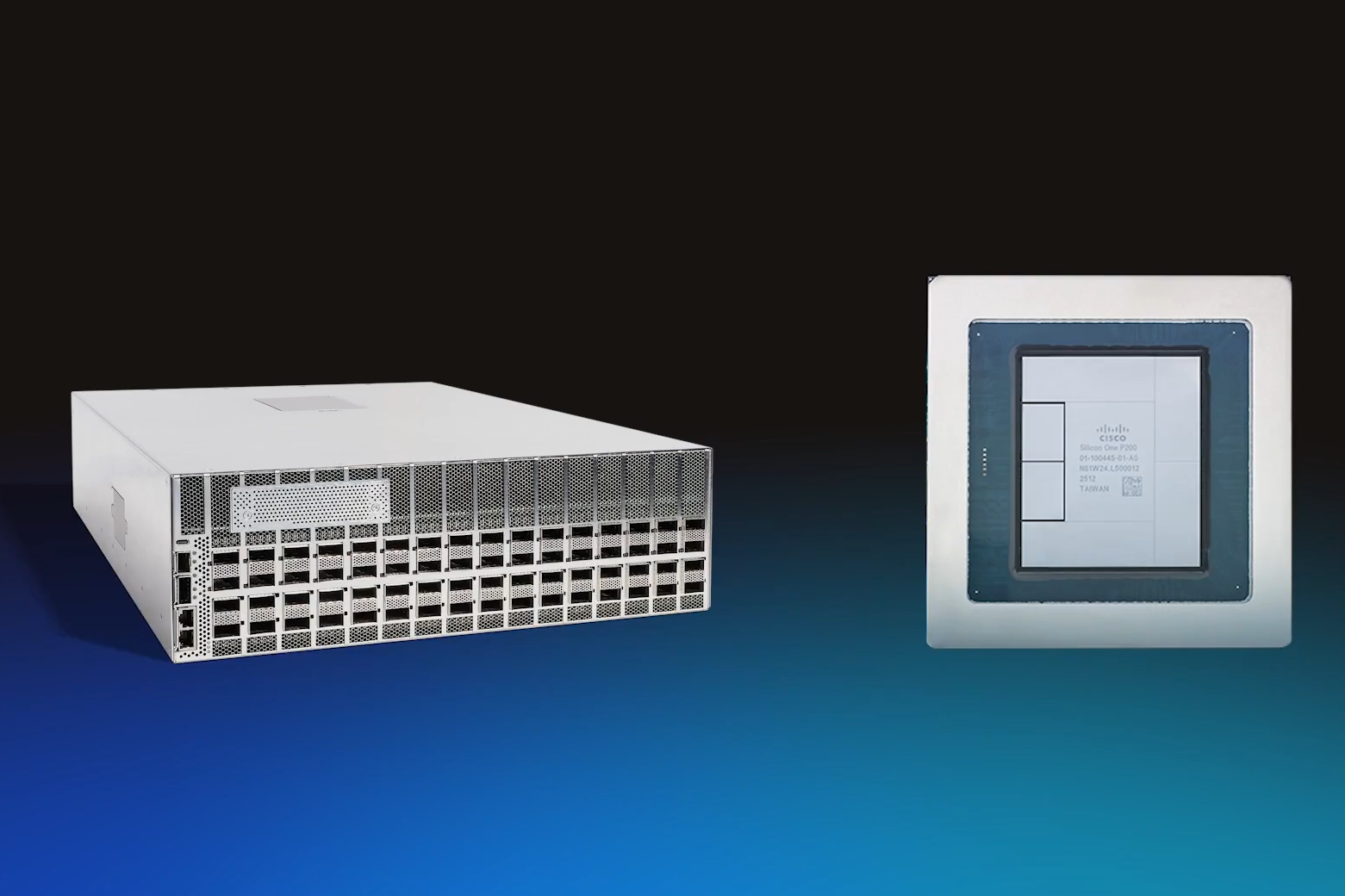

"Deep buffering with Silicon One P200 in 8223 Router Cisco believes that it has solved the above problem with the new Silicon One P200 chip. This chip offers a throughput of 51.2 Tbps (20 billion packets per second) and can scale up to a total bandwidth of up to 3 exabits per second. That should be more than enough for the time being."

The 8223 Router paired with the Silicon One P200 delivers 51.2 Tbps throughput (20 billion packets per second) and deep buffering to support scale-across AI workloads across multiple data centers. The P200 can scale total bandwidth up to 3 exabits per second. Scale-across addresses AI models that have grown too large for a single data center. Inter-datacenter connections must be highly efficient to avoid lost packets, checkpointing inefficiencies, and idle GPU cycles. Deep buffering and very high throughput aim to prevent expensive GPUs from sitting idle and to preserve training continuity.

Read at Techzine Global

Unable to calculate read time

Collection

[

|

...

]