"Uber has shared details of Ceilometer, an internal adaptive benchmarking framework designed to evaluate infrastructure performance beyond application-level metrics. The system helps Uber qualify new cloud SKUs, validate infrastructure changes, and measure efficiency initiatives using repeatable, production-like benchmarks. As infrastructure heterogeneity increases across cloud providers and hardware generations, Uber built Ceilometer to provide consistent, data-driven performance signals across environments. At Uber scale, benchmarking infrastructure has historically been a fragmented and manual process."

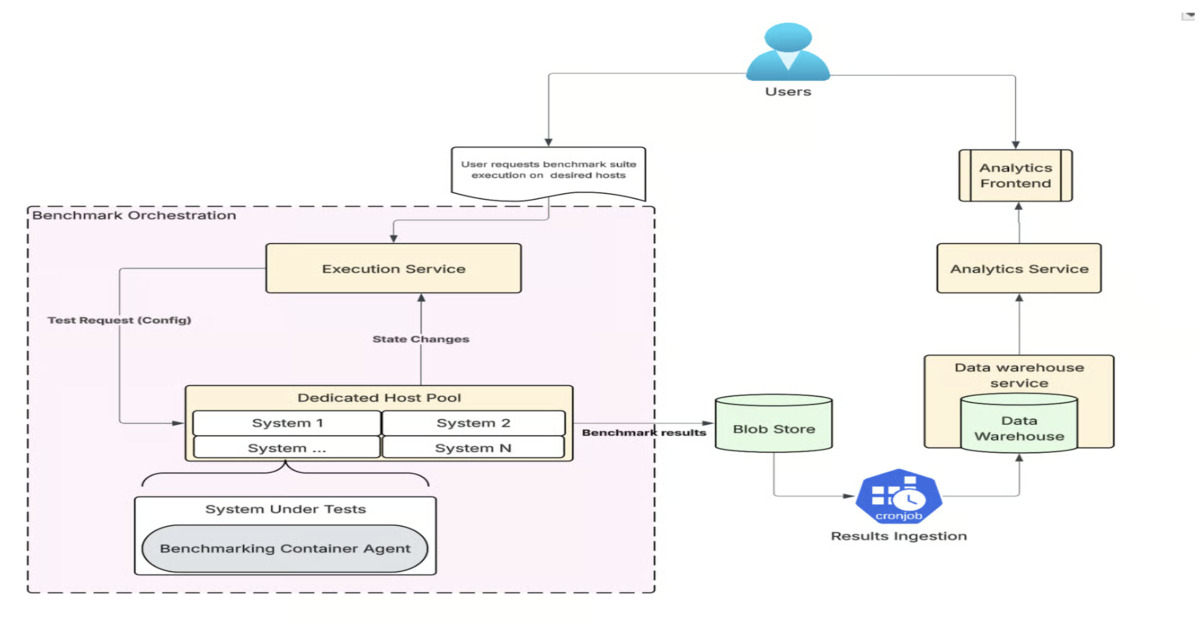

"Ceilometer is architected as a distributed system that coordinates benchmark execution across dedicated machines. Tests are executed in parallel to reflect realistic workload behavior, with raw outputs stored in durable blob storage. Results are validated, normalized, and ingested into Uber's centralized data warehouse, where they can be queried and analyzed alongside production metrics. According to Uber engineers, this design allowed them to identify performance regressions, configuration inefficiencies, or hardware-level differences using a consistent data model."

Ceilometer automates repeatable, production-like benchmarks to qualify cloud SKUs, validate infrastructure changes, and measure efficiency across heterogeneous environments. The platform centralizes orchestration, execution, result ingestion, and analysis to replace one-off scripts and spreadsheets, enabling standardized and reproducible comparisons across servers, workloads, and environments. Benchmarks run in parallel on dedicated machines, with raw outputs stored in durable blob storage and normalized for ingestion into a centralized data warehouse. The framework supports synthetic benchmarks and stateful workload integrations to characterize CPU, memory, network, storage, and database performance and to detect regressions and configuration inefficiencies.

Read at InfoQ

Unable to calculate read time

Collection

[

|

...

]