"Uber has built HiveSync, a sharded batch replication system that keeps Hive and HDFS data synchronized across multiple regions, handling millions of Hive events daily. HiveSync ensures cross-region data consistency, enables Uber's disaster recovery strategy, and eliminates inefficiency caused by the secondary region sitting idle, which previously incurred hardware costs equal to the primary, while still maintaining high availability. Built initially on the open-source Airbnb ReAir project, HiveSync has been extended with sharding, DAG-based orchestration, and a separation of control and data planes."

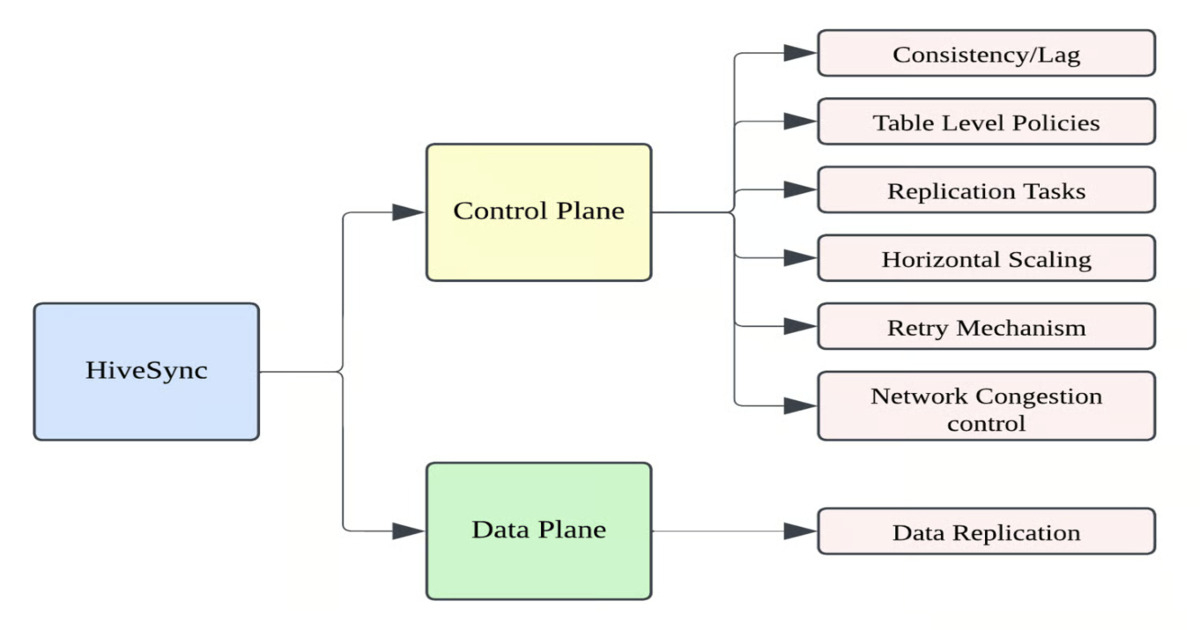

"HiveSync separates the control plane, which orchestrates jobs and manages state in a relational metadata store, from the data plane, which performs HDFS and Hive file operations. A Hive Metastore event listener captures DDL and DML changes, logging them to MySQL and triggering replication workflows. Jobs are represented as finite-state machines, supporting restartability and robust failure recovery. A DAG manager enforces shard-level ordering"

HiveSync implements sharded cross-region replication for Hive and HDFS to preserve disaster readiness and enable analytics access without duplicating compute in secondary regions. Sharding divides tables and partitions into independent units for parallel replication and fine-grained fault tolerance. The design separates a control plane that orchestrates jobs and stores state in a relational metadata store from a data plane that performs HDFS and Hive file operations. A Hive Metastore event listener captures DDL and DML changes and logs them to MySQL. Replication jobs are modeled as finite-state machines, supporting restartability and robust failure recovery, and a DAG manager enforces shard-level ordering. Uber uses a hybrid transfer strategy: RPC for small jobs and DistCp on YARN for large transfers.

Read at InfoQ

Unable to calculate read time

Collection

[

|

...

]